Web scraping is the automatic collection of data from the internet. A “Crawler” is usually used in this procedure, which browses several web pages and scrapes data from specific pages. You will want to scrape the pages for multiple reasons. It primarily speeds up the collection of data by eliminating the need for manual data collection. When data collecting is required but the website does not have an API, scraping is an option.

In this blog, we will learn how to scrape New York Times News articles using Node JS and Puppeteer.

The Puppeteer will use the chromium-browser behind the pages. Puppeteer uses the Chromium browser to produce HTML and JavaScript behind the scenes, which makes it highly handy for retrieving content supplied by JavaScript/AJAX functions.

To do so, you’ll have to install Puppeteer in such a directory in which you’ll write the data scraping scripts. Make a directory like this, for example…

mkdir puppeteer cd puppeteer npm install puppeteer --save

The installation of Puppeteer and Chromium will take a few moments.

Let’s start with a script like this once that’s done…

const puppeteer = require('puppeteer');

puppeteer.launch({ headless: true, args: ['--user-agent="Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3312.0 Safari/537.36"'] }).then(async browser => {

const page = await browser.newPage();

await page.goto("https://www.nytimes.com/");

await page.waitForSelector('body');

var rposts = await page.evaluate(() => {

});

console.log(rposts);

await browser.close();

}).catch(function(error) {

console.error(error);

});

Even though it appears to be a lot, it simply opens the puppeteer browser, makes a new page, and loads the URL we want while waiting for the whole HTML to load.

The assess function also allows you to query the page’s content using CSS selectors and Puppeteer’s query functions

The second line asks the Puppeteer to load in headless mode, which means you won’t see the browser but it will be there behind the lines.

The —user-agent string pretends to be a Chrome browser on a Mac, preventing you from being blacklisted.

Save this file as getting nyt.js and run it to see if it gives you any issues.

node get_nyt.js

Let’s see if we can scrape some information now…

Navigate to the nytimes.com website with Chrome.

The headlines, links, and synopsis of the articles will be scrapped. To see what we’re up against, let’s launch the inspect tool.

With just a little experimenting, you can see that each post is wrapped in a tag with the class asset Wrapper.

We’ll use it for each loop to acquire the data inside them and have all the individual pieces separately since everything is contained within this one class.

So, here’s what the code will look like…

const puppeteer = require('puppeteer');

puppeteer.launch({ headless: true, args: ['--user-agent="Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3312.0 Safari/537.36"'] }).then(async browser => {

const page = await browser.newPage();

await page.goto("https://www.nytimes.com/");

await page.waitForSelector('body');

var rposts = await page.evaluate(() => {

let posts = document.body.querySelectorAll('.assetWrapper');

postItems = [];

posts.forEach((item) => {

let title = ''

let summary = ''

let link = ''

try{

title = item.querySelector('h2').innerText;

if (title!=''){

summary = item.querySelector('p').innerText;

link = item.querySelector('a').href;

postItems.push({title: title, link: link, summary: summary});

}

}catch(e){

}

});

var items = {

"posts": postItems

};

return items;

});

console.log(rposts);

await browser.close();

}).catch(function(error) {

console.error(error);

});

As you can see, the tag always contains the article’s title, which we extract. The question…

summary = item.querySelector('p').innerText;

This function returns the summary for each article. We put it in a try… catch… block since some people may not have a description and so report will have an error, causing the code to malfunction.

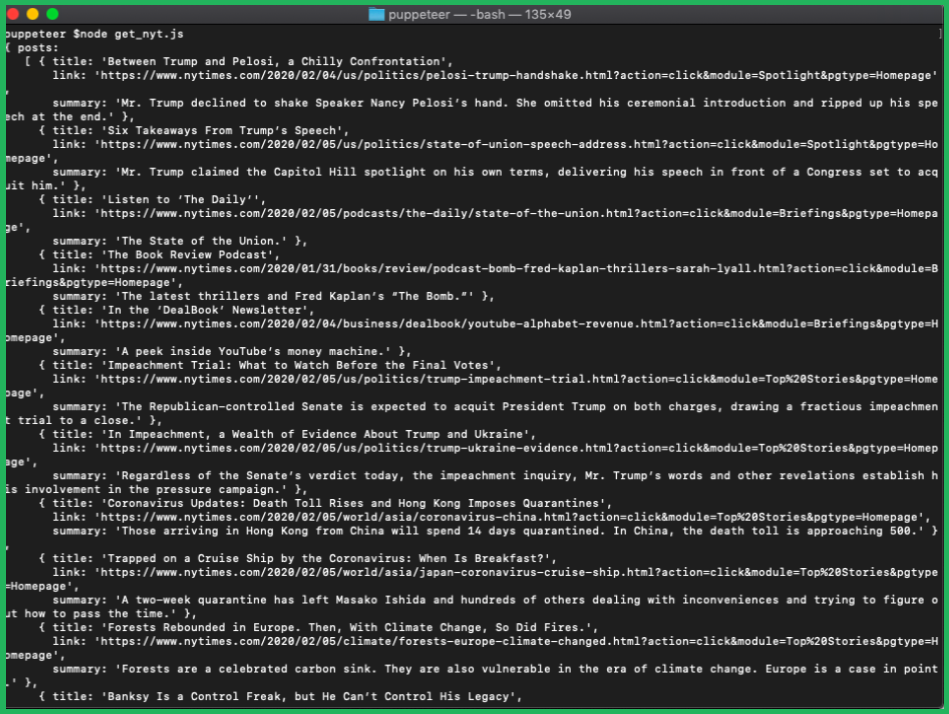

If you execute this, it should print all of the articles in the following manner.

If you want to apply this in reality and scale to hundreds of links, you’ll discover that New York Times (NYT) will simply block your IP address. Using a rotating proxy service to cycle IPs is a must in this case.

Otherwise, automatic location, usage, and bot detection algorithms will frequently block your IP address.

Our rotational proxy server Proxies API is a basic API that will instantly solve any IP Blocking issues.

- There are thousands of high-speed spinning proxies scattered over the globe.

- With our IP rotation service.

- Our User-Agent-String rotation is automated (which simulates requests from different, valid web browsers and web browser versions).

- With our CAPTCHA-solving technology that works automatically.

Hundreds of our customers have used a simple API to tackle the problem of IP restrictions.

In any programming language, a basic API like the one below can be used to access the entire system.

You don’t even have to run Puppeteer because we render JavaScript behind the lines, so all you have to do is get the data and analyze it in any language (Node, Puppeteer, PHP, etc.) or framework including Scrapy or Nutch.

In all the above cases, you can just call the URL using render support.

curl https://xbyte.io/?key=API_KEY&url=https://example.com

You can contact us for getting genuine results.