Today we will see how we could scrape Amazon Best Sellers Products using BeautifulSoup and Python in an easy and elegant manner.

The objective of this tutorial blog is to start real-world problem solving whereas keeping that simple so that you become familiar as well as fins practical results quickly.

So, initially, we need to ensure that we have installed Python 3 and if not, just install it before proceeding.

After that, you can install BeautifulSoup using:

pip3 install beautifulsoup4

We would also require library’s requests, soupsieve, and lxml to scrape data, break that down to XML, as well as utilize CSS selectors and install it using:

pip3 install requests soupsieve lxml

When it gets installed open the editor as well as type in:

# -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests

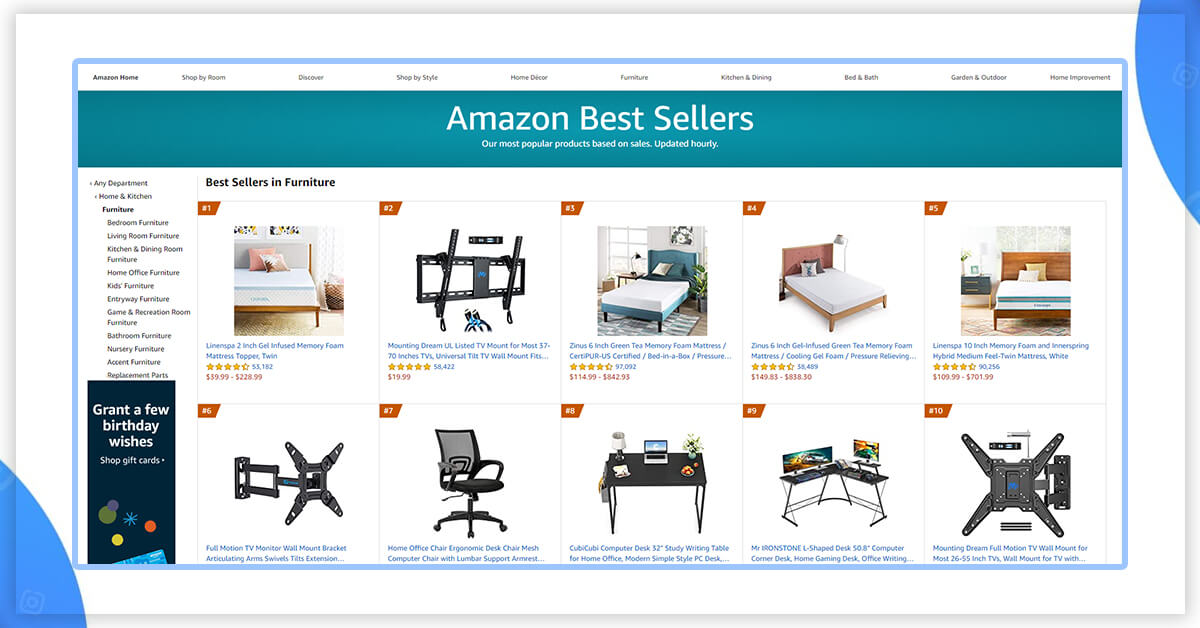

Then, go to Amazon’s Best Sellers Product listing page as well as review the information we can have.

That’s how that looks:

Let’s go back to the code now. Let’s get the data through pretending that we are using a browser given here:

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url = 'https://www.amazon.in/gp/bestsellers/garden/ref=zg_bs_nav_0/258-0752277-9771203'

response=requests.get(url,headers=headers)

soup=BeautifulSoup(response.content,'lxml')

Now, let’s save it as scrapeAmazonBS.py.

In case, you run that:

python3 scrapeAmazonBS.py

You would observe the entire HTML page.

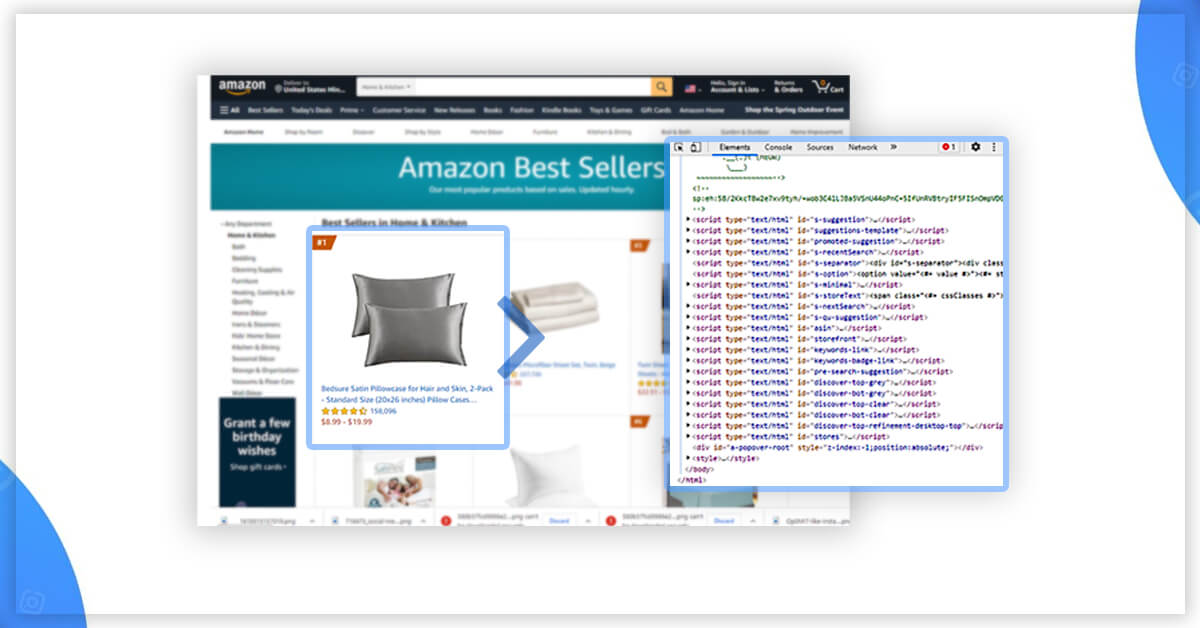

Now, let’s utilize CSS selectors for getting the required data. To do it, let’s use Chrome again and open inspect tool.

We have noticed that all these individual products’ data are given with a class called ‘zg-item-immersion’. We could scrape this with CSS selector named ‘.zg-item-immersion’ very easily. Therefore, the code will look like this:

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url = 'https://www.amazon.in/gp/bestsellers/garden/ref=zg_bs_nav_0/258-0752277-9771203'

response=requests.get(url,headers=headers)

soup=BeautifulSoup(response.content,'lxml')

for item in soup.select('.zg-item-immersion'):

try:

print('----------------------------------------')

print(item)

except Exception as e:

#raise e

print('')

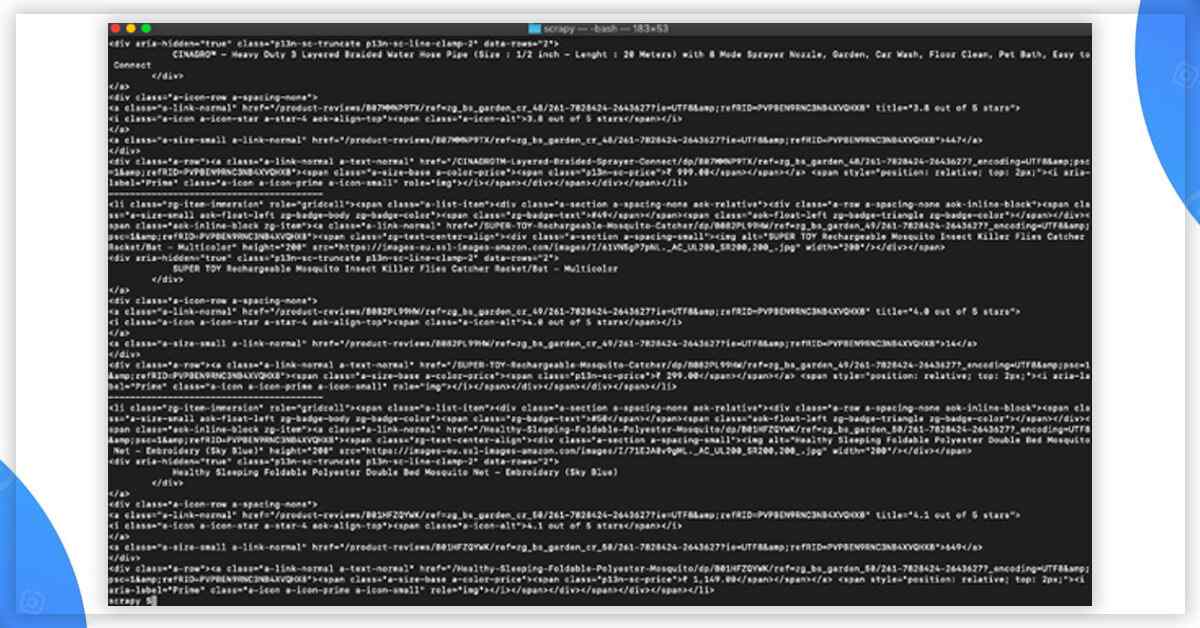

It will print all content in all the elements, which hold the products’ data.

Now, we can choose classes within these rows, which have the required data.

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url = 'https://www.amazon.in/gp/bestsellers/garden/ref=zg_bs_nav_0/258-0752277-9771203'

response=requests.get(url,headers=headers)

soup=BeautifulSoup(response.content,'lxml')

for item in soup.select('.zg-item-immersion'):

try:

print('----------------------------------------')

print(item)

print(item.select('.p13n-sc-truncate')[0].get_text().strip())

print(item.select('.p13n-sc-price')[0].get_text().strip())

print(item.select('.a-icon-row i')[0].get_text().strip())

print(item.select('.a-icon-row a')[1].get_text().strip())

print(item.select('.a-icon-row a')[1]['href'])

print(item.select('img')[0]['src'])

except Exception as e:

#raise e

print('')

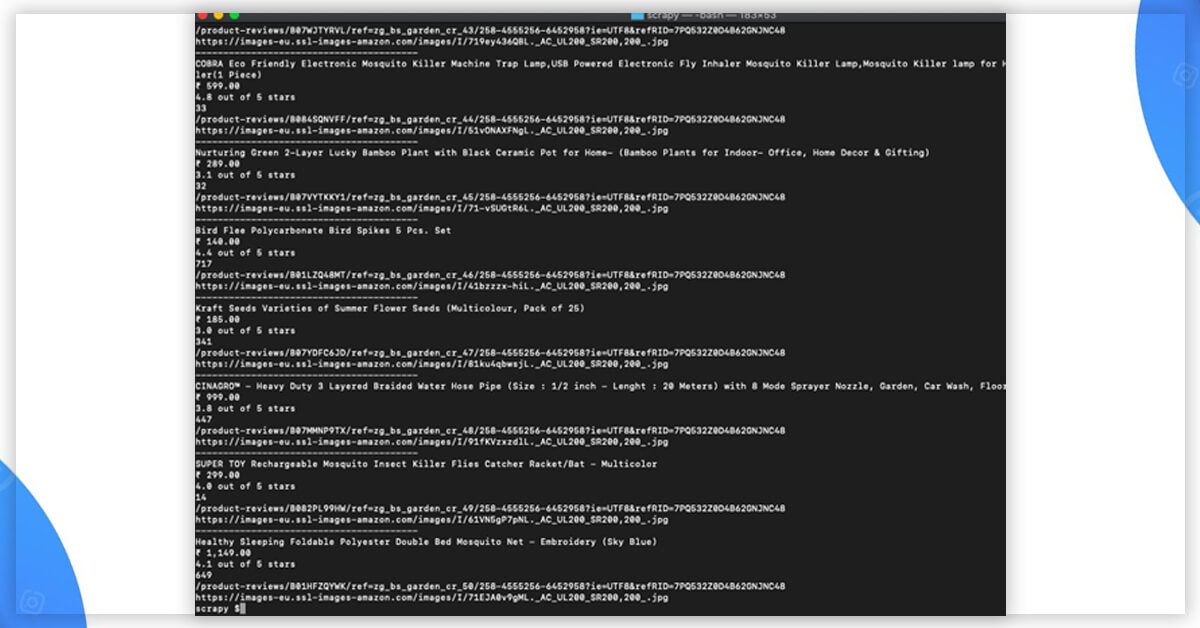

In case, you run that, it would print all the information:

That’s it!! We have got the results!!

In case, you wish to utilize this in the production as well as wish to scale thousands of links then you would find that you would get the IP blocked very easily. In this situation, using the rotating proxy services for rotating IPs is nearly a must. You could use services like Proxies APIs for routing your calls with millions of domestic proxies.

In case, you wish to scale crawling speed as well as don’t wish to set up an individual infrastructure, then you can use X-Byte Enterprise Crawling’s web scraper to easily scrape thousands of URLs with higher speeds.