Introduction: Why Smart Scraping Starts with Smart Tools

Businesses across industries are relying on high-quality, real-time data to make decisions, analyze trends, and train AI algorithms. Whether it’s competitor pricing, market sentiment, or being able to aggregate reviews and content—data scraping is the only way to collect all those details, meaning just standard web scrapers can’t keep up with the volume—especially as websites are increasingly dynamic and newer anti-scraping technologies are emerging.

That’s where AI scraping tools set in. Using machine learning, natural language processing (NLP), and automation, AI scrapers can extract and obtain the data at scale without becoming too cumbersome. Identifying the most suitable AI scraper is crucial to provide accurate, timely data and to ensure compliance with global regulations.

This guide will walk you through all you need to know: how AI scrapers work, features to look out for, a comparison of the top tools, and how to select the right solutions for your use case. By utilizing the most effective scraping techniques, you can expand your data operations, delegate tasks to the scraper, and enhance the value and efficiency of your data. The only remaining task is to transform raw data into business intelligence.

What Is AI-Powered Data Scraping?

AI-powered data scraping is the automated extraction of data from web pages using artificial intelligence. Traditional scrapers depend on fixed rules; AI scrapers adapt to changing structures of websites and learn patterns via machine learning. AI scrapers can also clean, filter, and analyze data in real-time, making them natural for a more dynamic web environment. AI scrapers can distinguish between relevant and irrelevant data, providing a higher-quality output.

The use of AI scrapers is beneficial to industries like e-commerce, finance, and market research to perform tasks like monitoring prices, tracking news, and analyzing customer sentiment, turning a variety of raw data into useful information with a high turnaround in an automated manner with limited human involvement.

How AI Enhances Web Scraping Efficiency?

Artificial intelligence has revolutionized the practice of web scraping by automating tasks that previously required significant manual effort, such as parsing content, cleaning noisy data, and identifying relevant patterns. Conventional scrapers adhere to a set of rules and often cease operations when a website undergoes any changes. By contrast, AI-powered scrapers are adaptive. They learn the data’s behavior and modify their extraction logic intelligently, so they can act appropriately when the web structures change.

Benefits of AI in Web Scraping:

- Scale Automation: AI scrapers handle the volume of data scraping at scale and in real-time with reduced human oversight.

- Increased Accuracy: AI scrapers rinse and correct anomalies in scraped data, which helps to increase the consistency and reliability of datasets.

- Structural Adaptability: These tools react to design changes of layout, pop-ups, and dynamic content such as JavaScript elements, all while reducing downtime and eliminating the need for maintenance.

There are numerous ways businesses can leverage AI web scrapers, from competitive intelligence to sourcing user sentiment and large-scale research processes. AI web scrapers allow you to quickly and affordably extract and process large, complex datasets; furthermore, AI is conducive to allowing registered/automated tedious and technical scraping behaviors. Decorative AI webscraping tools give your business time back in your team’s process, enabling you to abstract or mitigate the human effort in analysis or strategic thinking, or even just getting to the actual business decision that needs some data investment.

What Are The Key Features to Look For in an AI Data Scraping Tool?

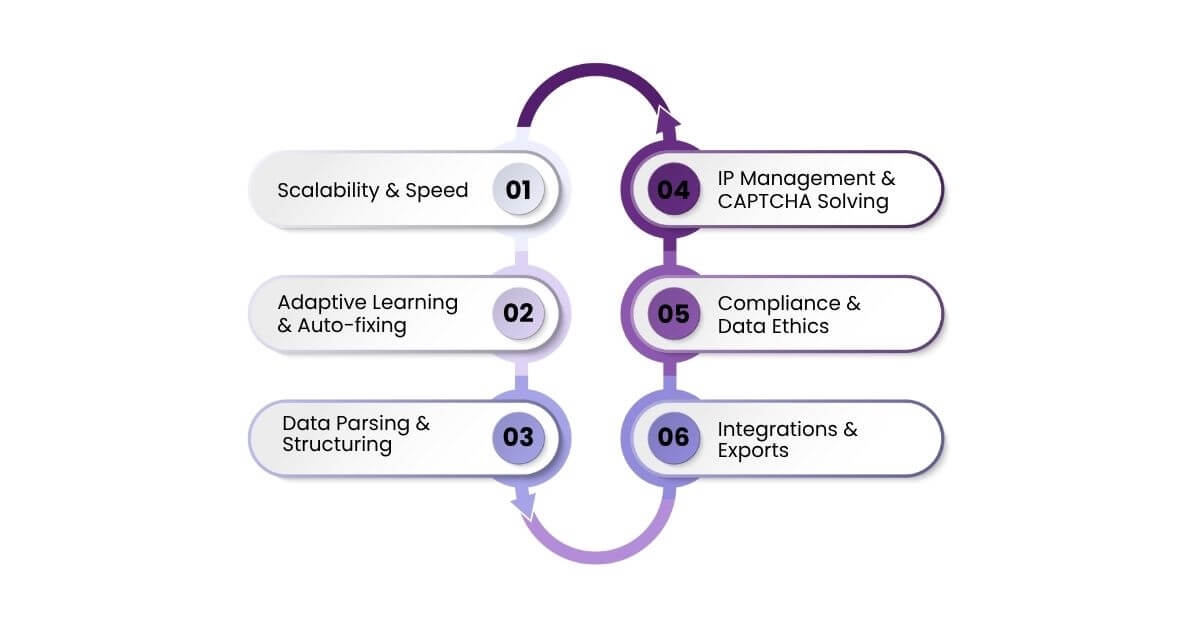

Choosing an AI web scraping tool is more than just dazzling dashboards or marketing. It’s about finding the right balance of features, scalable architecture, and compliance. Here are the important features that you should focus on:

- Scalability and Speed

Look for tools that use cloud-based architectures and that support or can handle multiple tasks in a parallel way. This will allow you to scale up operations without sacrificing speed and data integrity. Features like task queues, distributed architecture, or load balancing are best for enterprise-level workloads.

- Adaptive Learning and Auto-fixing

Websites are often changing their structure from one day to the next. Traditional scrapers may complain and require a change in code if they can’t find the data they were commanded to scrape! An AI web scraping tool with adaptive learning could recognize changes (such as a structure change) and automatically modify the scraping logic when needed, based on your training or specifications. Some tools would notify you if their identified structural changes resulted in a loss of data validity.

- Data Parsing and Structuring

At the very least, people often avoid using raw HTML without structure. Choose your tools based on advanced parsing options like entity recognition, content tagging, and summarizing text. These features help put unstructured data into a usable form.

- IP Management and CAPTCHA Solving

Like anything else in large volumes, scraping too much too fast might set off anti-bot routines and change what data you can scrape! Look for tools that can include IP rotation lists, CAPTCHA-solving capabilities, and randomized user agents to minimize any risk of being blocked.

- Compliance and Data Ethics

As an organization or individual, you must keep in mind GDPR, CCPA, and other privacy laws. Your organization or scrapers must fulfill their obligations with respect to scraping. Tools need to respect robots.txt, guarantee opt-out, and maintain audit logs.

- Integrations and Exports

Finally, you will need to consider how easily the tool you are moving to integrates with your processes. Tools need to support REST APIs, web service triggers (webhooks), and export formats including CSV, JSON, or SQL, depending on your processes.

What Are The Best AI Web Scraping Tools in 2025?

Here’s a list of the best AI-powered scraping tools for 2025, along with their best use case and core features:

1. Xbyte.io

Best for: Scalable custom, enterprise-grade scraping

Features:

- AI-enabled data extraction and cleanup

- Smart element recognition on dynamic pages

- Built-in CAPTCHA solving and rotating IPs

- The platform features a no-code interface that allows enterprises to create customizable workflows.

- Export to CSV, JSON, or directly to cloud-based apps (like AWS, BigQuery)

- Great for producing AI-ready datasets for advanced analytics and automation at scale

Xbyte.io is a full-service AI scraping platform designed for enterprises that need precision, scalability, and compliance. Whether you’re tracking competitors, scraping real-time product data, or building custom market intelligence feeds, X-Byte Enterprise Crawling helps you achieve clean data delivered in a structured format from a secure cloud-first architecture.

2. Scraping Intelligence

Best for: no-coders and small- to mid-sized companies

Features:

- AI-enabled visual scraping with a point-and-click setup

- Adaptive recognition of data fields on dynamic pages

- CAPTCHA solving and rotating IPs included

- A No-code interface with customizable workflows

- One-click export to CSV, JSON, or Google Sheets

- Perfect for teams building lightweight ML-ready datasets for reports or dashboards

Scraping Intelligence provides the best interface for non-coders looking to scrape structured web data quickly and reliably. It is a good choice for startups, researchers, and small organizations that need quick, robust insights.

3. 3i Data Scraping

This tool is best suited for development teams and technical professionals who require full control.

Features:

- AI-assisted data extraction with custom script logic

- Smart adaptive handling of dynamic JavaScript-heavy sites

- Actor-based automation and scalable API workflows

- Built-in CAPTCHA solving and rotating proxy support

- Output to JSON, XML, or cloud storage endpoints

- Best for building complex scraping tasks tied to ML models or custom backends.

3i Data Scraping gives developers control with custom logic, APIs, and support for automation. This flexibility makes it ideal for embedding scraping tasks as part of broader interactions in the data engineering pipeline.

4. iWeb Scraping

This tool is best suited for large-scale scraping due to its built-in proxy infrastructure.

Features:

- AI-enabled pattern detection and data tagging

- Residential IPs for geo-sensitive scraping

- Browser-based scraping with dynamic rendering support

- Drag-and-drop logic to build custom workflows

- Export to CSV, JSON, or stream responses to any cloud app.

- Best suited for projects that require secure, location-specific collection of data for an AI analytics product

iWeb Scraping brings together scraping power and superb proxy support, making it easily the best option for businesses looking to scale into localized pricing, availability, or SEO data projects.

5. Web Screen Scraping

This tool is best for scraping structured and visual data to deliver real-time insights.

Features:

- OCR-based AI scraping for images, tables, and PDFs

- Smart element mapping and auto-labeling

- Built-in CAPTCHA handling and IP shuffling

- A no-code UI with custom logic, scheduling, and output options for automation

- You can export data to CSV, JSON, or XML formats, or live sync it to a cloud app destination.

- So particularly good for transforming complex web visuals into clean, structured datasets for ML or BI platforms

Web screen scraping is especially capable of unstructured scraping and semi-structured scraping of data also—even when that data is embedded in images or graphical dashboards—making it a powerful option for intelligence gathering.

Each tool has its own niche, so you should select the one that best fits the volume, complexity, and internal capacity of your data scraping.

What Are The Future Trends in AI Data Scraping?

As data extraction technologies rapidly advance, several trends are redefining the future:

- Predictive Scraping: Tools will determine when content changes are going to occur and crawl then, so there will not be a multitude of requests made for nothing.

- Autonomous AI Agents: Future scrapers will not be limited by just scripts; they will make decisions on what, when, and how to scrape using reinforcement learning.

- Generative AI Integration: Scrapers will summarize or rewrite content extracted from web page sources in real time using LLMs (large language models).

- Ethical Scraping Guidelines: Expect built-in “ethical scrapers” that follow data collection best practices that are legal, transparent, and fair.

- No-Code/Low-Code Approach: This trend will empower non-developers (because let’s face it, many are not developers)—marketers and analysts—to deploy extensive and complex scrapers using drag-and-drop features.

- End-to-End Automation: Moving data from scraped sources will be accomplished with very little manual effort, from scraped data cleaned to properly formatted data to full integration with 3rd-party applications, all occurring in the background or at least automated for most of the process.

And getting ahead of the game with any of these trends will ultimately provide your business a significant competitive advantage.

Conclusion: Build Smart, Scrape Smart

The right AI web scraping tool can take your data strategy to the next level—unlocking insights, streamlining processes, and speeding up innovation. As we have illustrated, there are many options in the marketplace. You have everything from free solutions for simpler data extractions to enterprise-grade solutions built for speed, scale, and compliance.

Xbyte.io is an unparalleled choice for any company looking to extract data at scale, with precision, and reliability. They also have great features, including custom scraping workflows, GDPR compliance, and great customer support to make your data extraction seamless and future-proof.

Ultimately, effective data collection, which is planned and executed thoughtfully, begins with informed choices. By choosing a tool that best reflects your technical capacity, data aspirations, and legal compliance, you will set your data collection operation up for success, scalability, and ethics.