Sentiment analysis is one of the most widely used applications of natural language processing and text analytics, with a variety of sites, publications, and courses dedicated to the subject. Sentiment analysis appears to be the best to operate on subjective material, in which people express their thoughts, feelings, and mood. Sentiment analysis is commonly utilized in the real world to assess corporate investigations, feedback survey data, social media posts, and reviews for movies, places, etc.

Terminologies

1. Text Corpus

A Text Corpus consists of several text documents that is as simple as a single sentence, or complicated as a multi-paragraph document.

2. Sentiment Analysis

Sentiment analysis is also known as opinion analysis or opinion harvesting. The basic idea is of using various tricks from text analytics, NLP (Natural Language Processing), Machine Learning, and languages to scrape necessary data from unformatted text.

3. Sentiment Polarity

Sentiment Polarity is the numeric result that is allocated to both the positive and negative factors of the text document that is dependent on subjective factors like particular words and phrases that will express feelings and emotions. Natural sentiment generally consists of 0 polarities as it does not show the particular sentiment. The positive sentiment that will consist >0, and negative <0.

Here, we will show a step-by-step process that will make a model performing Sentiment Analysis on a huge Movie database. The information was gathered from the Internet Movie Database (IMDB).

We concentrate on analyzing a large corpus of movie reviews and gather opinions. We are attempting to extract sentiment from a big corpus of movie reviews. We’ll use both an unsupervised lexicon-based model and a typical supervised machine learning model to conduct our research.

The main goal is to estimate how people will react to various movie reviews from the Internet Movie Database (IMDb).

Dataset

Based on the content of the reviews, this data consists of 50,000 movie reviews that were pre-labeled with “good” and “negative” sentiment different classifiers. Hence, our task will be to expect the opinion of 15,000 labeled movie reviews and the use of other 35,000 reviews to training our supervised models.

Setting up Dependencies

We will use various Python tools and frameworks dedicated to text analytics, Natural Language Processing, and machine learning. We must first install Pandas, NumPy, SciPy, scikit-learn, seaborn, matplotlib, and NLP libraries before initiating the project.

After installing nltk with pip or conda, we need to type the following code from a Python or ipython terminal.

import nltk

nltk.download('all', halt_on_error=False)

To install the library and receive the English model dependencies for spacy, write the following code as administrator in a Unix shell/windows command line.

$ conda config --add channels conda-forge

$ conda install spacy

$ python -m spacy download en

Text Pre-Processing and Normalization

The important step before going into the phase of feature engineering and modeling involves cleaning, pre-processing, and normalizing information to get textual elements such as phrases and words to a customized standard.

This allows document corpus standardization, which aids in the development of significant features and the reduction of noise supplied by a variety of elements such as irrelevant symbols, special characters, XML and HTML tags, and so on. Use the text normalizer.py utility to perform the following tasks.

Important Libraries

import spacy

import pandas as pd

import numpy as np

import nltk

from nltk.tokenize.toktok import ToktokTokenizer

import re

from bs4 import BeautifulSoup

from contractions import CONTRACTION_MAP

import unicodedata

nlp = spacy.load('en_core_web_sm', disable=['ner'])

doc = nlp(u"I don't want parsed", disable=['parser','tag','entity'])

tokenizer = ToktokTokenizer()

stopword_list = nltk.corpus.stopwords.words('english')

stopword_list.remove('no')

stopword_list.remove('not')

Cleaning Text:

Unnecessary material such as HTML elements, frequently appears in our text and adds little value when analyzing sentiment. As a result, we must ensure that they are removed before extracting features. This is done using the strip html tags(…) method.

def strip_html_tags(text):

soup = BeautifulSoup(text, "html.parser")

stripped_text = soup.get_text()

return stripped_text

Deleting Accented Characters.

In our database, we deal with reviews of the English language hence we assure that characters of any format, especially accented characters are transformed and standardized into ASCII characters.

def remove_accented_chars(text):

text = unicodedata.normalize('NFKD', text).encode('ascii', 'ignore').decode('utf-8', 'ignore')

return text

Expanding Contractions

Contractions are abbreviated versions of words or phrases in the English language. By eliminating key letters and sounds, these abbreviated versions of preexisting words and phrases are generated. For instance, expand don’t to do not and I’d to I Would.

def expand_contractions(text, contraction_mapping=CONTRACTION_MAP):

contractions_pattern = re.compile('({})'.format('|'.join(contraction_mapping.keys())), flags=re.IGNORECASE|re.DOTALL)

def expand_match(contraction):

match = contraction.group(0)

first_char = match[0]

expanded_contraction = contraction_mapping.get(match)\

if contraction_mapping.get(match)\

else contraction_mapping.get(match.lower())

expanded_contraction = first_char+expanded_contraction[1:]

return expanded_contraction

expanded_text = contractions_pattern.sub(expand_match, text)

expanded_text = re.sub("'", "", expanded_text)

return expanded_text

Removing Special Characters

This can be accomplished with the help of simple regexes. If you don’t want numbers in your normalized corpus, you can keep them or remove them. Remove special characters(…) is a function that assists us in removing special characters.

def remove_special_characters(text):

text = re.sub('[^a-zA-z0-9\s]', '', text)

return text

Removing Stop Words

Words with little or no significance especially while developing meaningful features from the text are known as stop words. Words, such as a, an, the, and others are considered to be phrases. The function remove_stopwords(…) will help remove stopwards and retain words with the most significance and context in a corpus.

def remove_stopwords(text, is_lower_case=False):

tokens = tokenizer.tokenize(text)

tokens = [token.strip() for token in tokens]

if is_lower_case:

filtered_tokens = [token for token in tokens if token not in stopword_list]

else:

filtered_tokens = [token for token in tokens if token.lower() not in stopword_list]

filtered_text = ' '.join(filtered_tokens)

return filtered_text

Lemmatization

Lemmatization is similar to stemming in which it removes language affixes to get at a word’s base form. To keep lexicographically correct words, we’ll exclusively use Lemmatization in our normalization pipeline. This is where the function lemmatize text(…) comes in handy.

def lemmatize_text(text):

text = nlp(text)

text = ' '.join([word.lemma_ if word.lemma_ != '-PRON-' else word.text for word in text])

return text

Building Text-Normalizer

We will utilize all the components and hold them all together in the following function known as normalize_corpus(…), which is used to take a document corpus as input and return the similar corpus with cleaned and normal text documents.

def normalize_corpus(corpus, html_stripping=True, contraction_expansion=True,

accented_char_removal=True, text_lower_case=True,

text_lemmatization=True, special_char_removal=True,

stopword_removal=True):

normalized_corpus = []

# normalize each document in the corpus

for doc in corpus:

# strip HTML

if html_stripping:

doc = strip_html_tags(doc)

# remove accented characters

if accented_char_removal:

doc = remove_accented_chars(doc)

# expand contractions

if contraction_expansion:

doc = expand_contractions(doc)

# lowercase the text

if text_lower_case:

doc = doc.lower()

# remove extra newlines

doc = re.sub(r'[\r|\n|\r\n]+', ' ',doc)

# insert spaces between special characters to isolate them

special_char_pattern = re.compile(r'([{.(-)!}])')

doc = special_char_pattern.sub(" \\1 ", doc)

# lemmatize text

if text_lemmatization:

doc = lemmatize_text(doc)

# remove special characters

if special_char_removal:

doc = remove_special_characters(doc)

# remove extra whitespace

doc = re.sub(' +', ' ', doc)

# remove stopwords

if stopword_removal:

doc = remove_stopwords(doc, is_lower_case=text_lower_case)

normalized_corpus.append(doc)

return normalized_corpus

Load Dataset and Normalize the Data

Load the IMDb dataset after importing the appropriate libraries. The dataset will then be divided into training and testing datasets. The normalized corpus(…) method will be used to standardize the information.

1st Approach – Undergoing Sentiment Analysis through Unsupervised Lexicon-Based Models

Unsupervised sentiment opinion models depend on good knowledge bases, taxonomies, lexicons, and datasets that includes precise information on words or phrases such as emotion, mood, partiality, and impartiality. A lexicon model that uses lexicon and also known as dictionary or vocabulary of words that specifically match sentiment classification.

These lexicons often include a set of words related to positive and negative emotion, polarity (the magnitude of a negative or positive score), mood, parts of speech (POS) tags, subjectivity classifiers (strong, weak, neutral), and modality, among other things. There are various other lexicon models, used for opinion analysis which include AFINN Lexicon, SentiWordNet Lexicon, and VADER Lexicon.

Model Training, Forecasting, and Progress Analysis

1. Sentiment Analysis

One of the most basic and often used lexicons for sentiment analysis is the AFINN lexicon. There are over 3300+ words in total, each with a polarity score. A built-in function for this lexicon exists in Python

2. Sentiment Analysis with SentiWordNet

SentiWordNet is a sentiment lexicon derived from the WordNet database where every term is related with numeric scores which indicates positive and negative sentiment data.

3. Sentiment Analysis with VADER

VADER is Valence Aware Dictionary for Sentiment Reasoning model is used for the text opinion analysis which is sensitive for both polarity (positive/negative) and intensity (strength) of emotions.

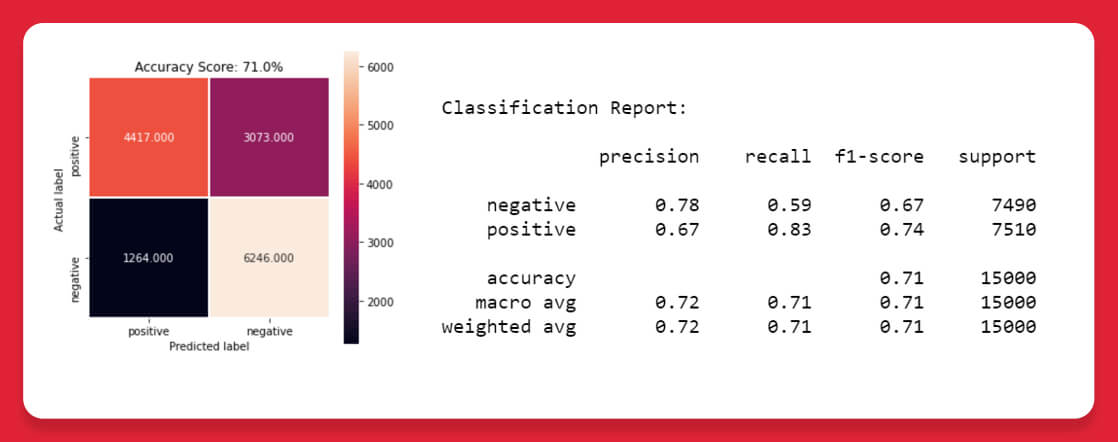

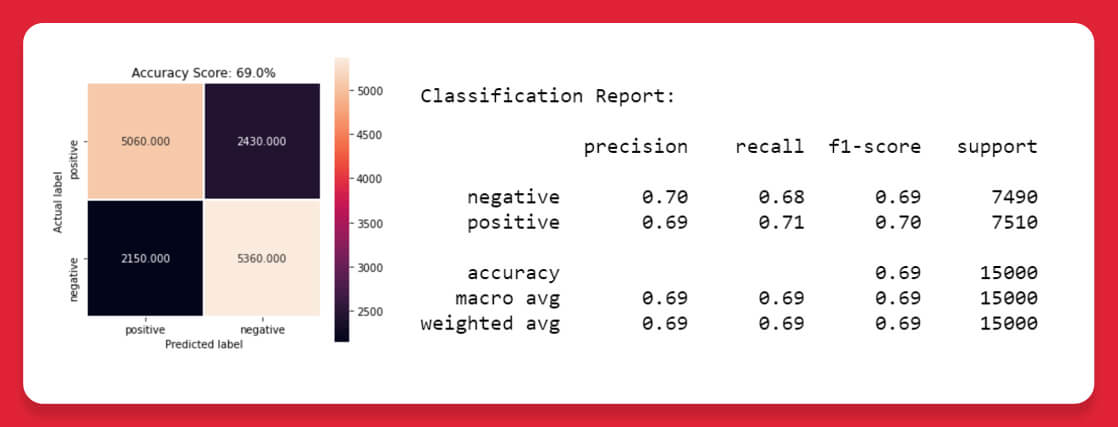

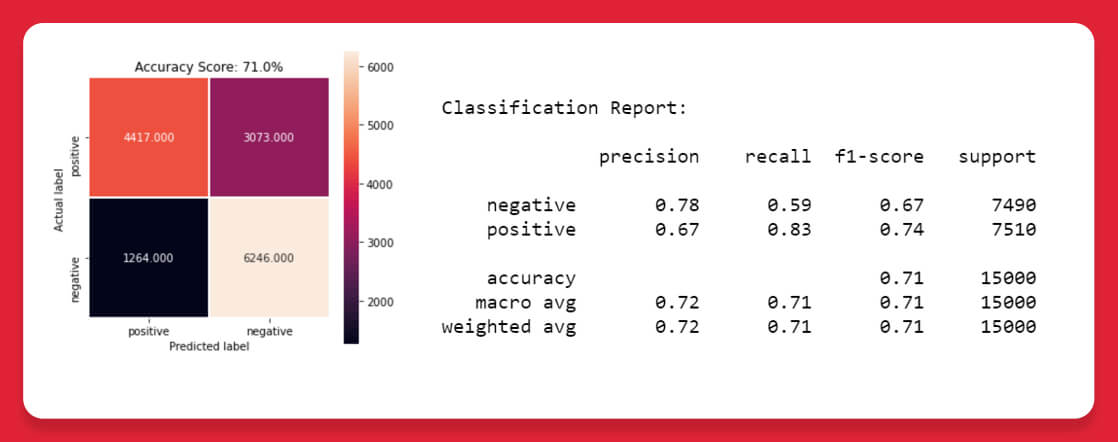

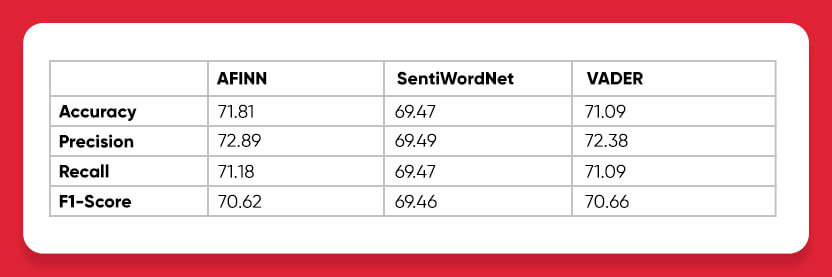

Which Unsupervised Model Works Best?

It is found that AFINN lexical model has better accuracy of 71.18% and works best among all three lexical models. It is also observed that the performance of the other models is near to that of the AFINN model on the given data.

2nd Approach: Sentiment Classification with Supervised Learning

Using supervised Machine Learning to develop a model to interpret the textual data and forecast the sentiment of text-based evaluations is another option. To be more explicit, classification models will be used to solve this challenge.

Feature Engineering

Word embedding is a method that uses vectors to represent the text. The following are the most popular word embedding:

1. BoW: Bag of words

The Bag of Words (BoW) model is the most basic type of numerical text representation. A phrase can be represented as a bag of words vector, just like the term itself (a string of numbers).

2. TF-IDF Term Frequency-Inverse Document frequency

The term frequency-inverse document frequency statistic is a quantitative expression of how essential a phrase is to a page in a catalog or corpus.

Model Training, Prediction, and Performance Assessment

1. To determine the likelihood of a binary event occurring and to deal with classification concerns, logistic regression is utilized.

Logistic Regression with BoW features

Logistic Regression using TF-IDF features

2. Stochastic Gradient Descent (SGD)

SGD (Stochastic Gradient Descent) is a straightforward but effective optimization approach for determining the values of the parameters of functions that reduce an objective function. In other words, it’s utilized to learn discriminative binding strengths like SVM and Logistic regression.

SVM using BoW features

SVM using TF-IDF features

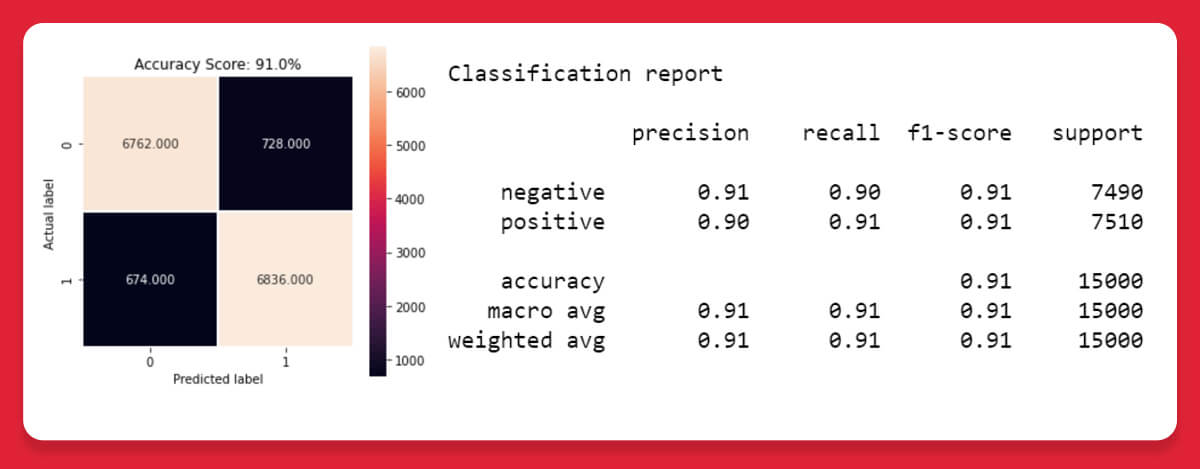

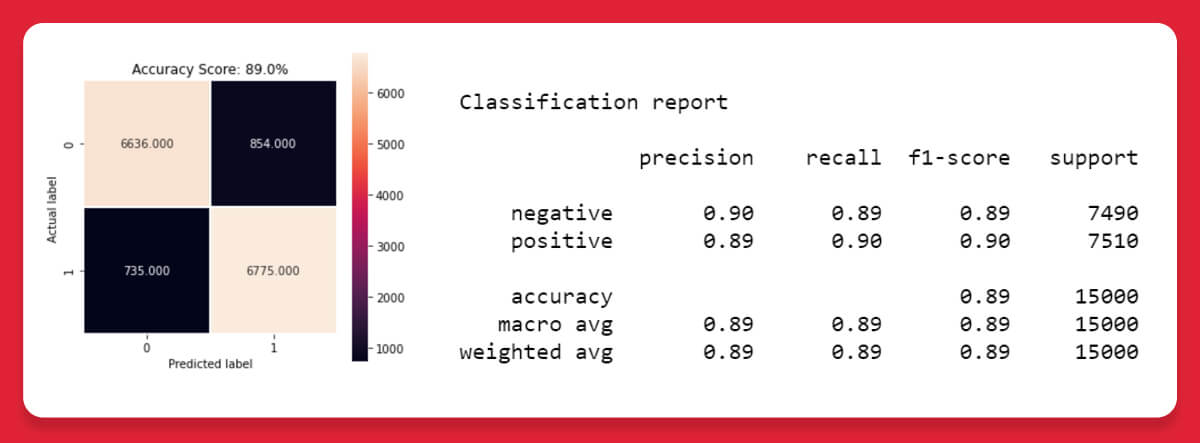

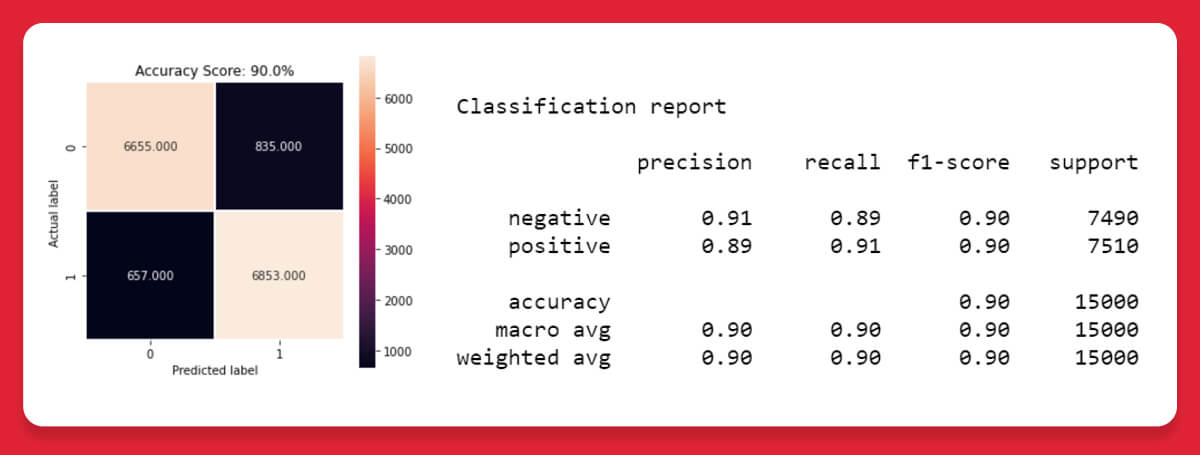

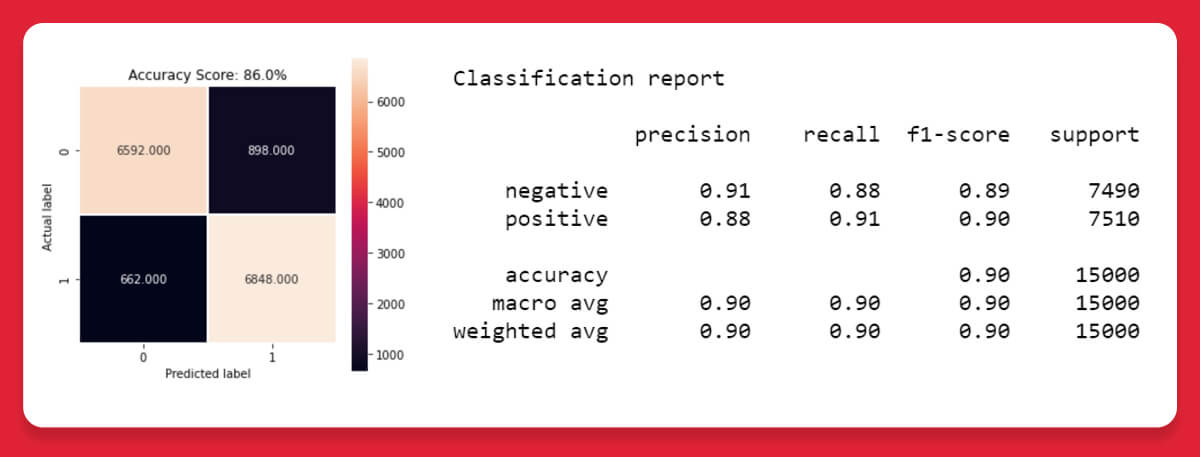

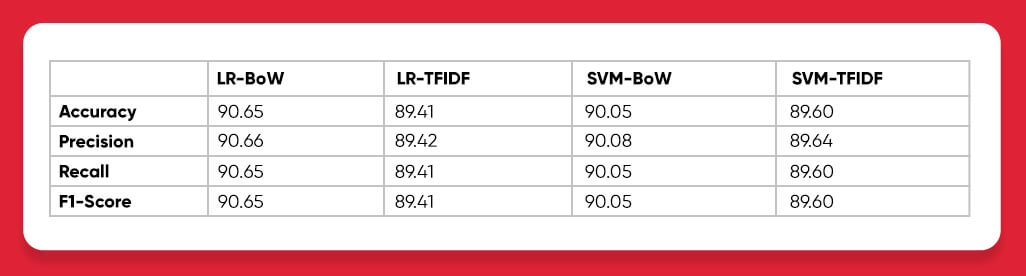

Which Model Performs Best?

We have discovered that the Logistic Regression model on Bag of Word characteristics performs the best, with an accuracy of 90.65%. Other models’ performance is likewise extremely similar to this one, as can be shown.

Conclusion

By utilizing Unsupervised Lexicon-based models, it is observed that effective Lexicon model as AFFIN that has an accuracy of 71%. Where on using traditional supervised ML models there is a precision of 89-90%. With an accuracy of 90.65%, a logistic regression model trained with bag-of-words features outperformed all other models and an F1 score of about 0.9. It can be concluded that typical supervised models outperform lexical models by comparing the top models from both supervised and unsupervised learning.

For any queries, contact X-Byte Enterprise Crawling today!