For some, the stock market represents a tremendous risk since they lack the necessary information to make better selections. People spend a lot of time picking which Café to visit, but not nearly as much time deciding which stock to invest in. It is due to the fact that individuals have far less time, but this is where AI can help. Automatic summarization and online scraping appear to help us obtain the knowledge we need to make the best decisions.

Module References

1. Web Scraping Modules

For web scrapers, the request module is a blessing. It enables developers to retrieve the target webpage’s HTML code.

Unless you’re a web developer, BeautifulSoup will come in handy because it breaks down a complex HTML page into a legible and scrapable soup object.

2. Standard Modules

It’s a well-known technique in a data developer’s toolbox for dealing with enormous amounts of data and gaining inference or seeking information through direct correlation, combining, filtering, and expanded data analysis.

To put it another way, it makes doing mathematical operations on the data. The heart of this module is the use of matrices and array calculations. Pandas is also based on it.

Consumers, of obviously, like to see cool images, and visuals communicate a fair bit better than text on a screen. Matplotlib will take care of the rest.

3. Sentiment Analyzer Module

It works by analyzing text data and inferring feelings from it. When it comes to Natural Language Processing, Hugging Face Robots and NLTK have a competitive advantage in the current market.

During the first phase of my project, you can employ a light-weight sentiment analyzer.

Transformers Pipeline Sentiment

Transformer’s arsenal includes a sentiment analyzer.

4. Article Summarization

It’s a simple abstractive summary python module that assists you in summarizing a text.

Transformers(Financial-Summarization-Pegasus)

A deep learning toolkit primarily for NLP projects. Pegasus financial summary will be used in this project.

1. Install and Import Dependencies

Install pip… Essentially, we’re just using run command in the background to download the latest the appropriate packages in our system so that we can access them in our code.

For the sake of convenience, pip will install all of the required packages for this project.

2. Summarization Modules

The summarizing models reduce the provided material to a logical and succinct summary.

Example: Financial-summarization-pegasus (Huggingface): It is pre-trained on financial language in order to extract the best summary from financial data.

Input:

In the largest financial buyout this year, National Commercial Bank (NCB), Saudi Arabia’s top lender by assets, agreed to buy rival Samba Financial Group for $15 billion. According to a statement issued on Sunday, NCB will pay 28.45 riyals (US$7.58) each Samba share, valuing the company at 55.7 billion riyals. NCB will issue 0.739 new shares for every Samba share, which is at the lower end of the 0.736–0.787 ratio agreed upon by the banks when they signed an initial framework deal in June. The offer represents a 3.5 percent premium over Samba’s closing price of 27.50 riyals on Oct. 8 and a 24 percent premium over the level at which the shares traded before the talks were made public. The merger talks were initially reported by Bloomberg News. The new bank will have total assets of more than 220 billion dollars, making it the third-largest lender in the Gulf area. The entity’s market capitalization of 46 billion dollars is almost identical to Qatar National Bank’s.

Output:

The NCB will pay 28.45 riyals per Samba share. The deal will create the third-largest lender in the Gulf area.

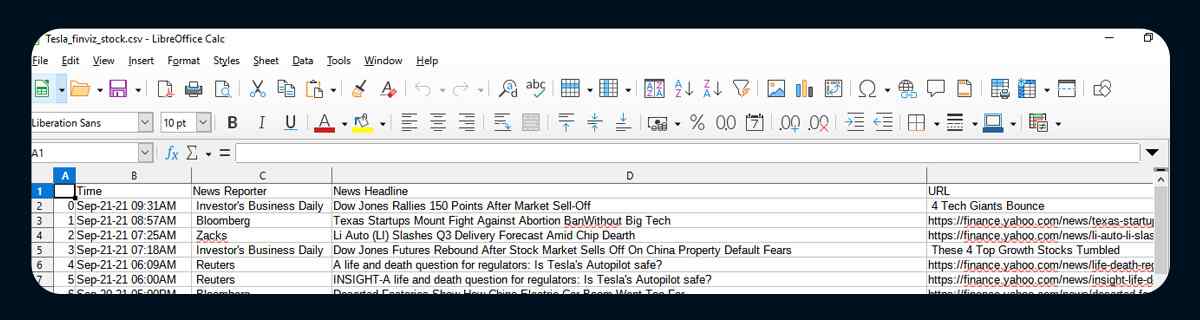

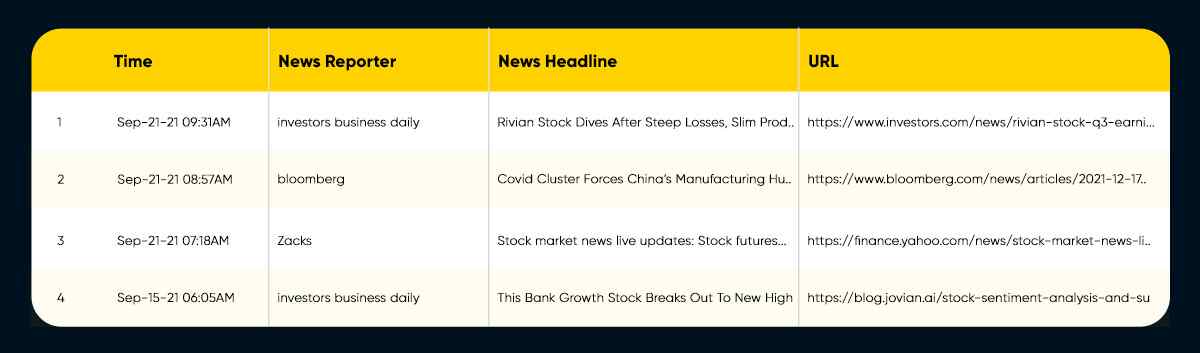

3. A News and Sentiment Pipeline: Finiviz website

Finiviz is the website that is being considered in this pipeline. It’s a web-based application that lists securities and the most recent stock stories in chronological order. The goal of this pipeline is to extract the URLs, as well as their headlines and dates, and do sentiment analysis on the headlines.

User Defined Functions used in Pipeline 1:

1. Function: Finiviz_parser_data(ticker):

Using the requests library, this method collects data from the Finviz website. The downloaded item should thereafter have a response code of at least 200.

The HTML response is parsed and returned as soup using the Beautiful Soup class. It should be mentioned that soup is a bs4 food. BeautifulSoup.

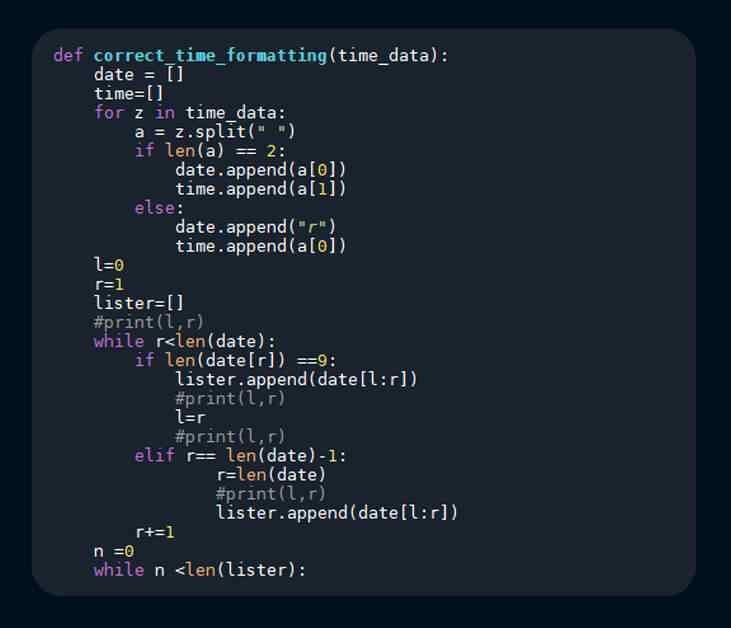

2. Function: correct_time_formatting(time_data)

This function converts the Finiviz website’s incorrect date and time format to a standardized format.

Before Execution 0 Sep-20–21 07:53AM 1 06:48AM 2 06:46AM 3 12:01AM 4 Sep-19–21 06:45AM 5 Sep-18–21 05:50PM 6 10:34AM After Execution 0 Sep-20–21 07:53AM 1 Sep-20–21 06:48AM 2 Sep-20–21 06:46AM 3 Sep-20–21 12:01AM 4 Sep-19–21 06:45AM 5 Sep-18–21 05:50PM 6 Sep-18–21 10:34AM

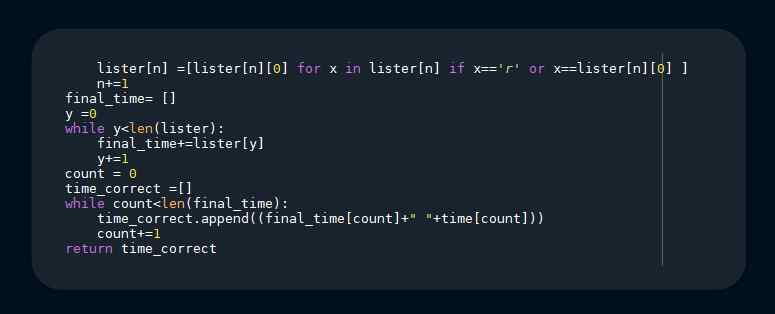

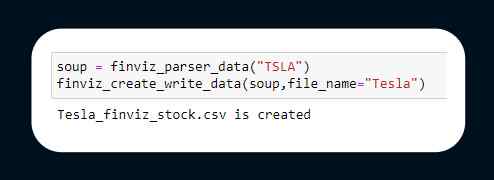

3. Function: finviz_create_write_data(soup,file_name=’’MSFT”)

The file_name is customizable since the soup is supplied as a position argument and the file name is passed as a keyword parameter.

Finviz_create_write_data (soup, file name=”Amazon”) is an example.

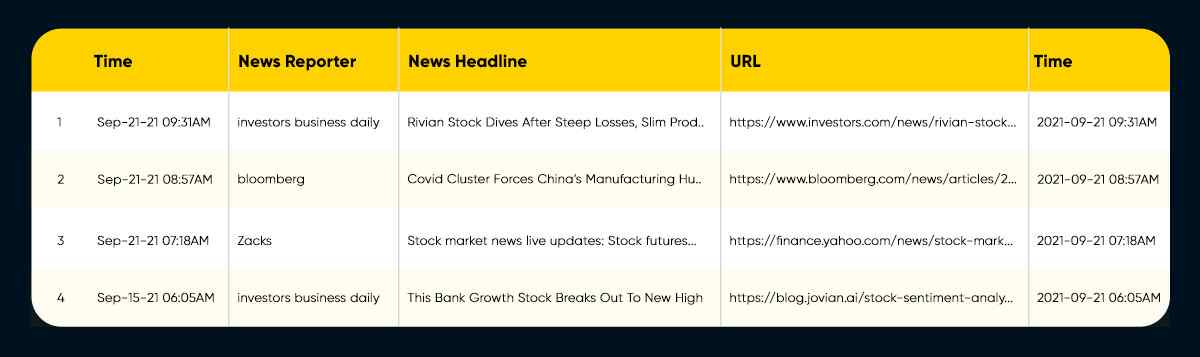

The code extracts the URL, time, News Reporter, and News headline, among other things.

It uses Pandas to generate a data frame, publishes it to a CSV, then returns the data frame.

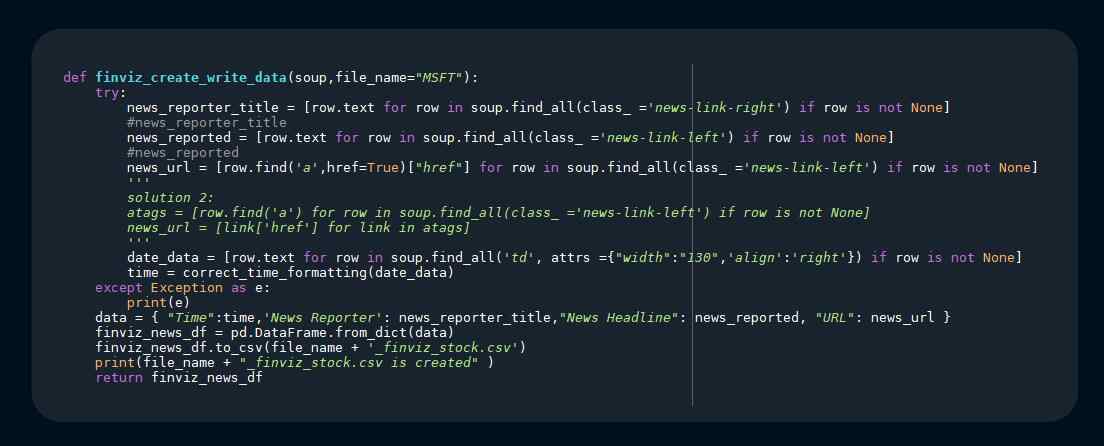

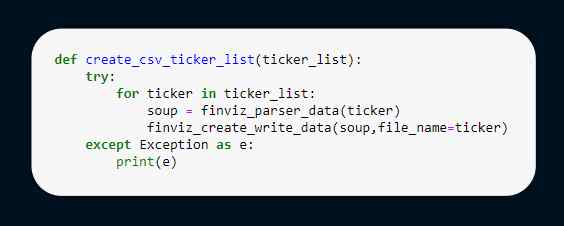

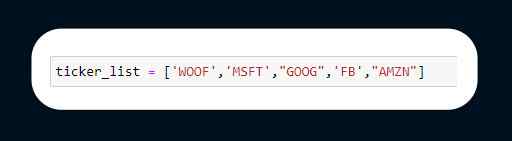

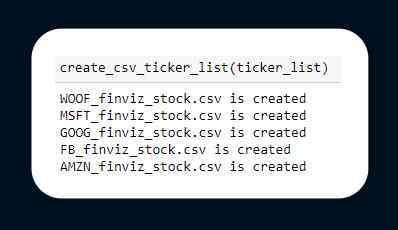

4. Function: create_csv_ticker_list(ticker_list):

This program simplifies the process of adding several stocks to a ticker list.

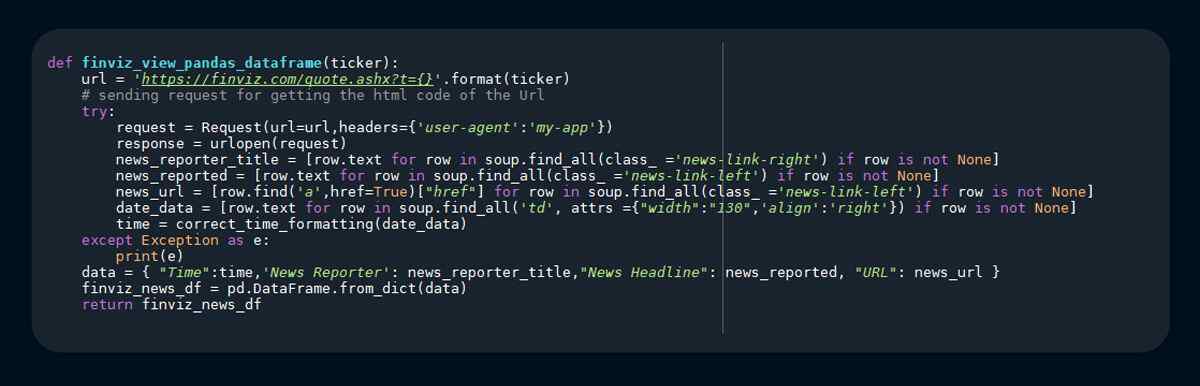

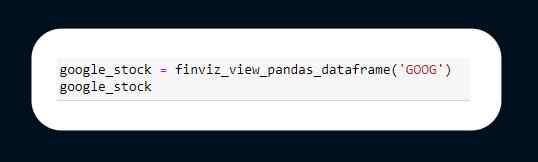

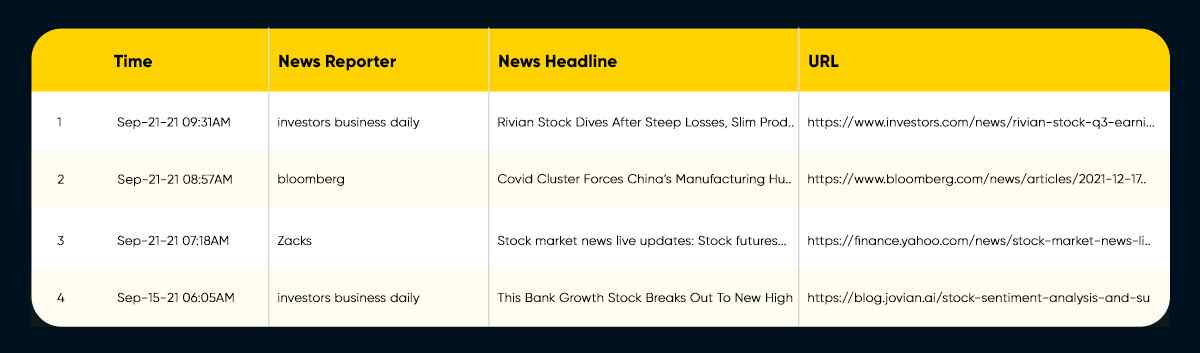

5. Function: def finviz_view_pandas_dataframe(ticker)

This function assists in the analysis process when an analyst has to do calculations on the data frame from a certain stock.

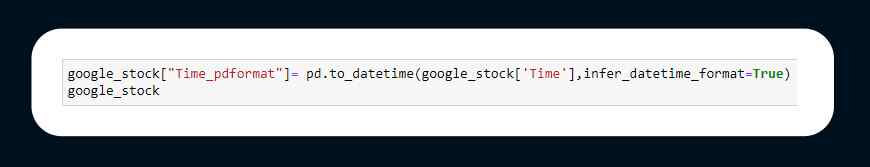

Take an example of Google stock and analysis

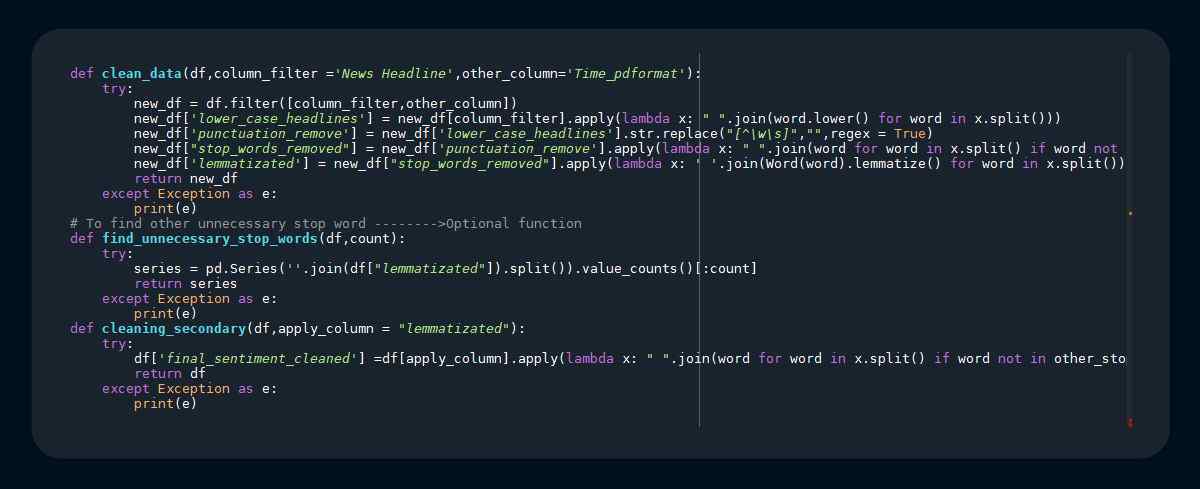

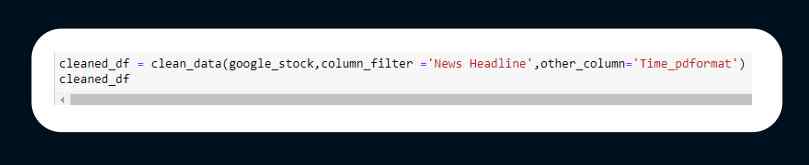

6. Function: clean_data(df, column_filter=”News Headline’, othe_column=Time”)

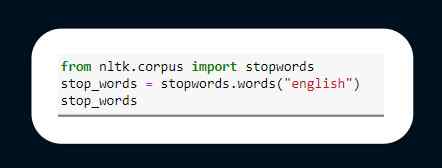

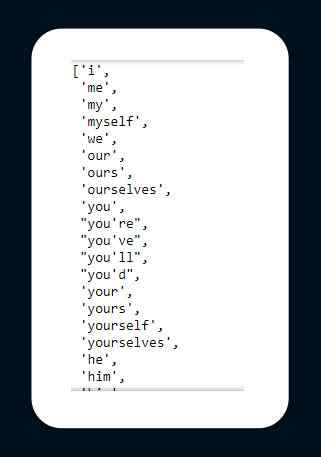

When the text is cleaned, such as lower casing, eliminating punctuation marks, removing stop words, and lemmatizing the text, the emotion analyzer that we employ, if efficient like transformers or lower effecient analyzers, performs much better.

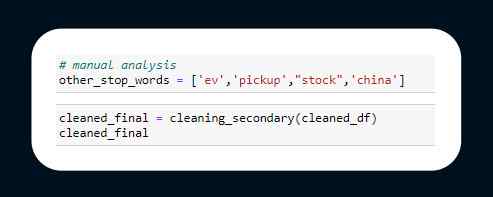

7. Function: (Optional)find_unnecessary_stop_words(df, count) & cleaning_secondry(df, apply_column = “lemmatized”):

The other stop phrases must be found manually, and these functions help with that.

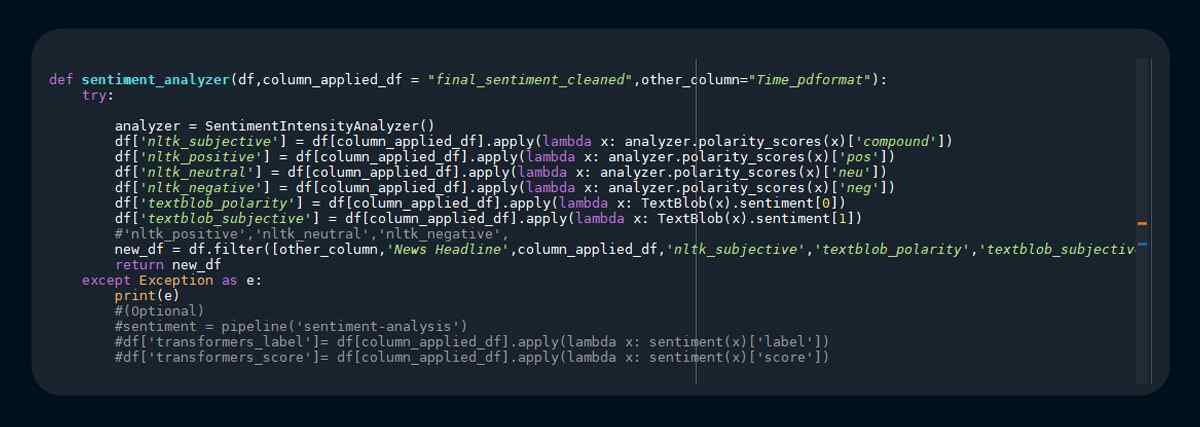

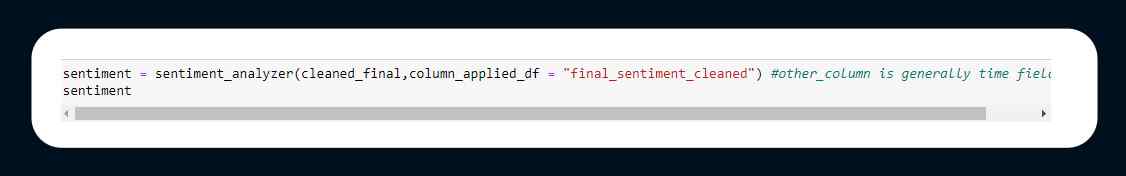

8. Function: sentiment_analyzer(df, column_applied_df = “final_sentiment_cleaned”, other_column-=”Time_pdformat’)

With df as input, the programme basically employs sentiment analyzers like nltk vader and textblob.

Steps to Reproduce

Step 1:

Using the user created functions finviz_parser_data and finviz_create_write_data, make a tesla stock CSV file.

Step 2:

Create a ticker list of at least the stocks you want and provide it to the function create_csv_ticker_list as an argument.

Step 3:

To perform individual analysis on your selected stock, establish a stock data frame.

Step 4:

Pandas includes a function that converts a data time item to a timestamp. Using pd.to datetime on the data frame’s time column.

Step 5:

Import Stop Words in The Desired Language

Step 6:

Clean the Data Frame by passing it via preset clean functions.

Step 7:

Conduct sentiment analysis on the cleansed data’s last column and assess the results.

Step 8:

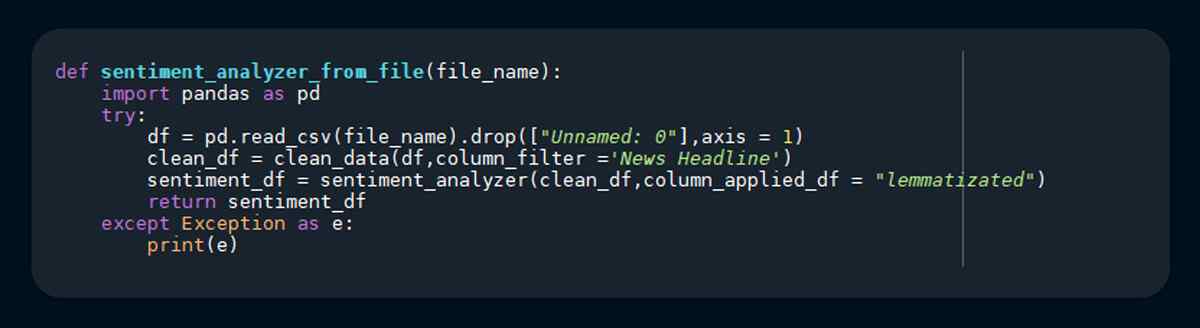

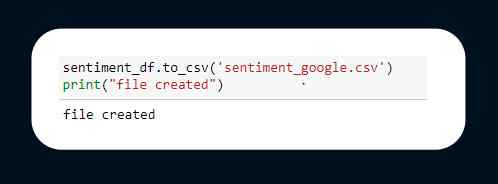

Remember that we wrote a predefined method to analyze sentiment from CSV data.

Step 9:

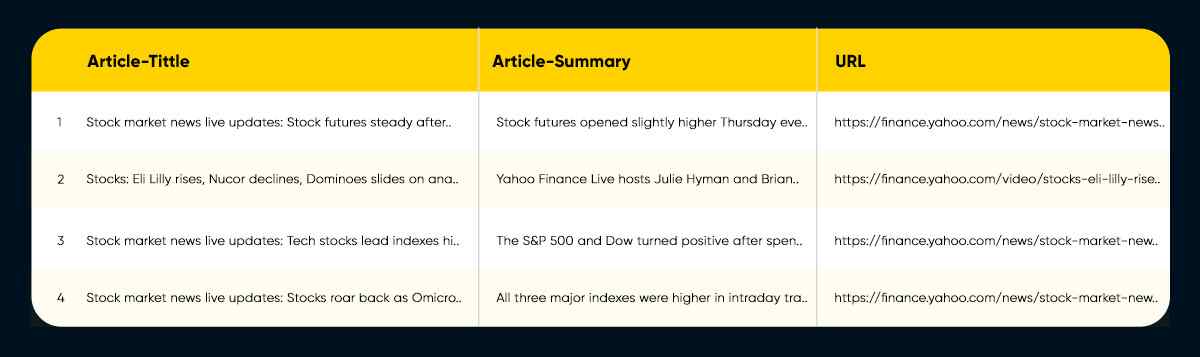

The next step is to extract the news article summaries from the extracted URLS. Because some articles may result in a 403 ERROR, all of them cannot be scraped properly.

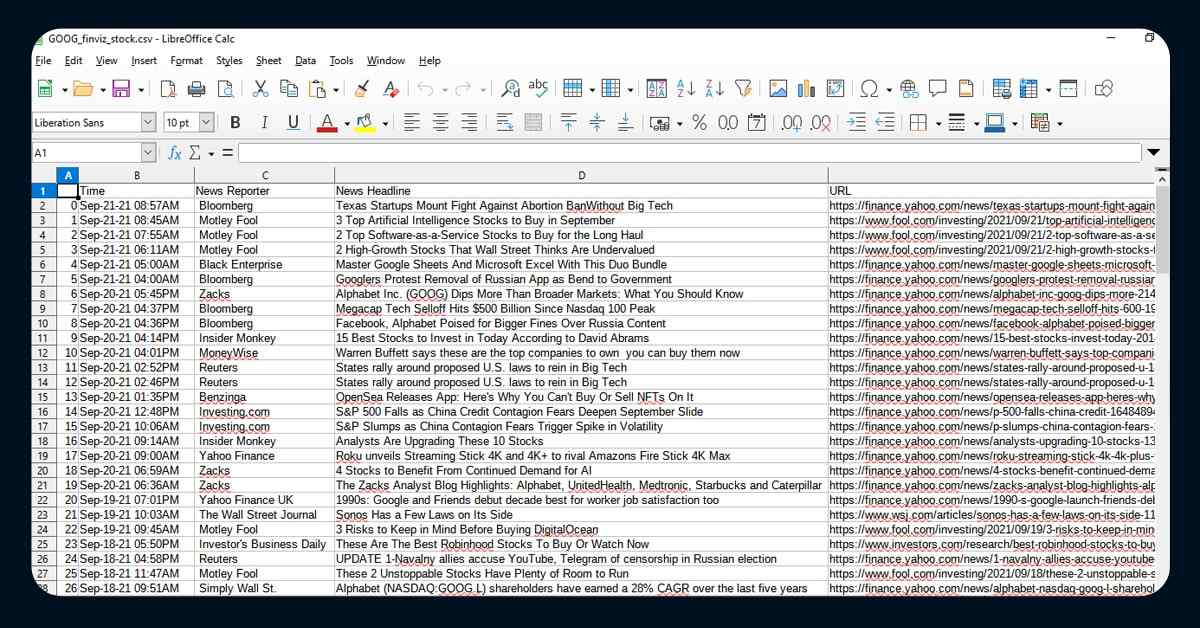

Example of one of the files:

Summarizing Pipeline 1:

- To download our ticker’s CSV file, we passed a ticker value to the function.

- Created a ticker list, which was then utilized to scrape several tickers and their related CSV files.

- Obtained stock data for a particular ticker.

- Removed the information from the News headline.

- (Optional) Using the function provided, manually declare the other stop words list and eliminate those words.

- Run sentiment analysis on the News Headlines that have been cleansed.

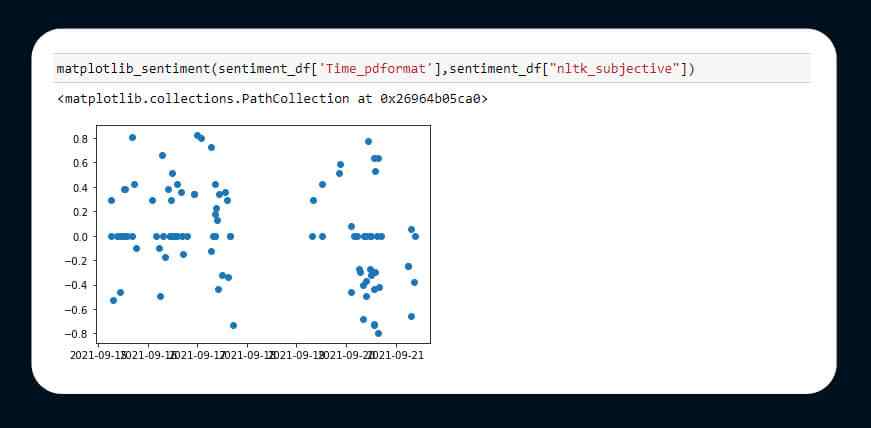

- Used a basic scatter plot to analyze the emotion.

- Using a data frame and a csv file, you can scrape news items.

Functions Used in Pipeline 2

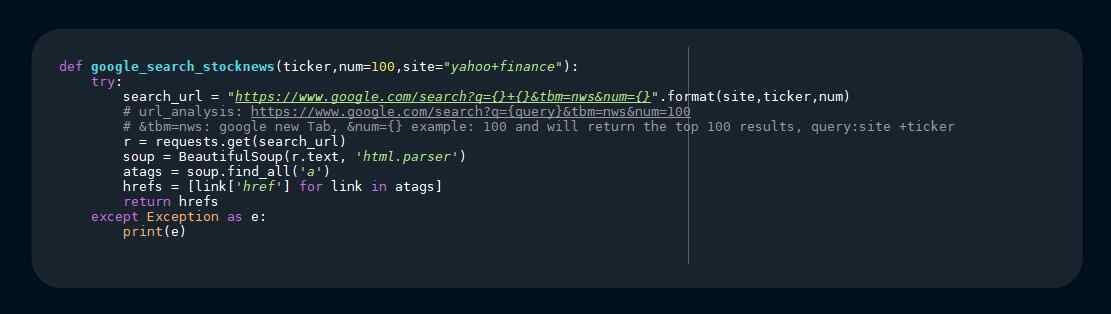

1. **def

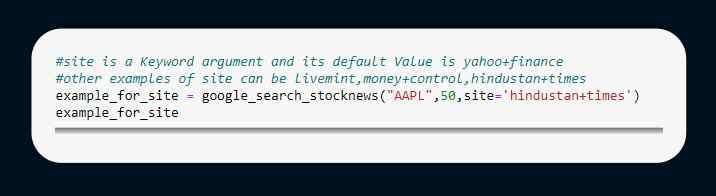

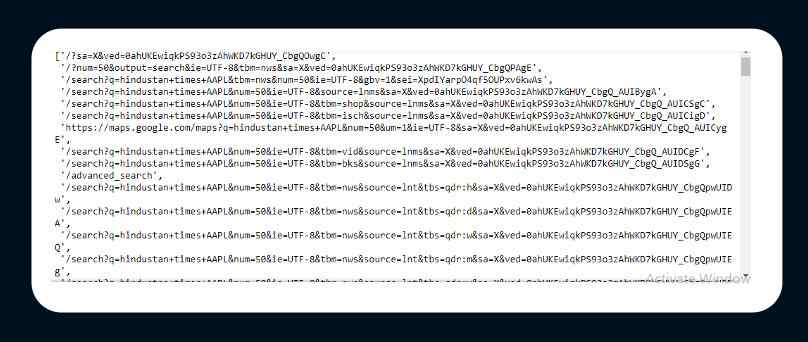

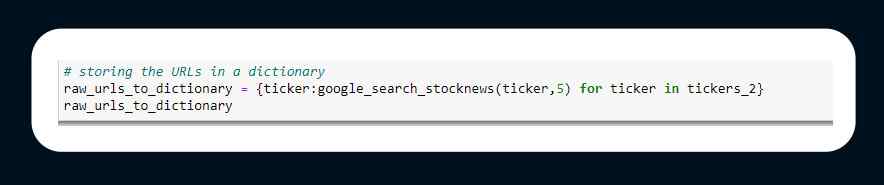

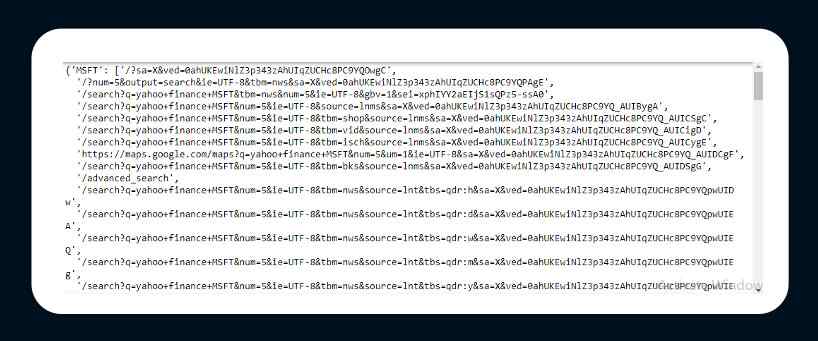

google search stocknews (ticker,num=100,site=”yahoo+finance”) **: The “ticker” is used as a positional argument, “num” is the number of pages to search, and “site” can be any trustworthy website.

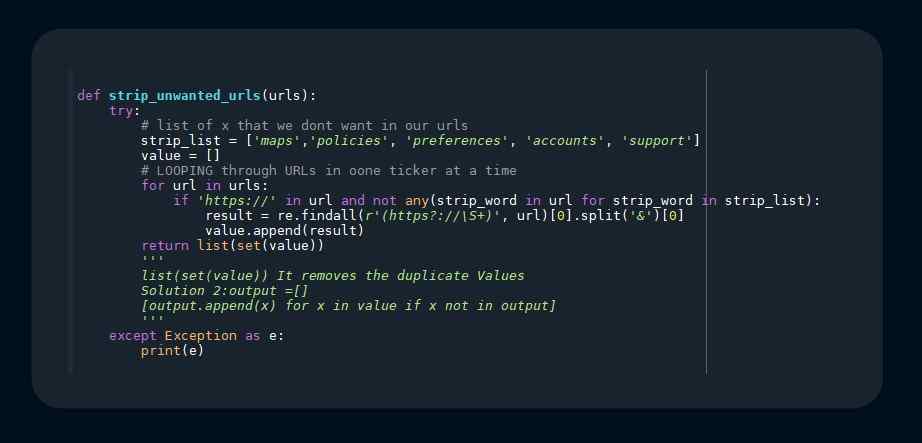

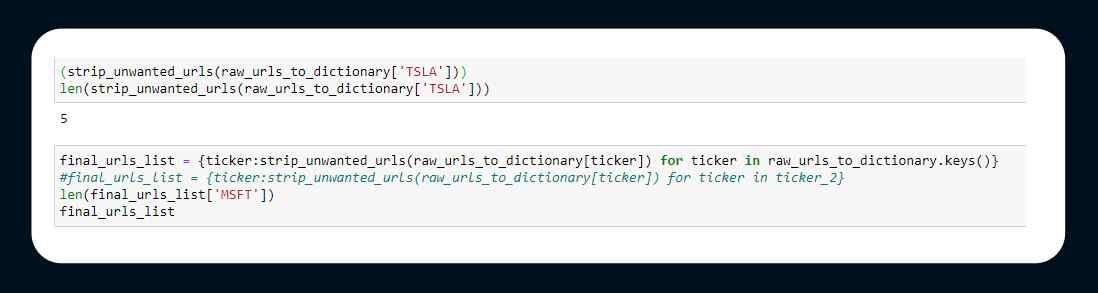

2. **def strip_unwanted_urls(urls)**:

It removes the dirty urls from the list and filters the urls that fit the standard, as the name implies.

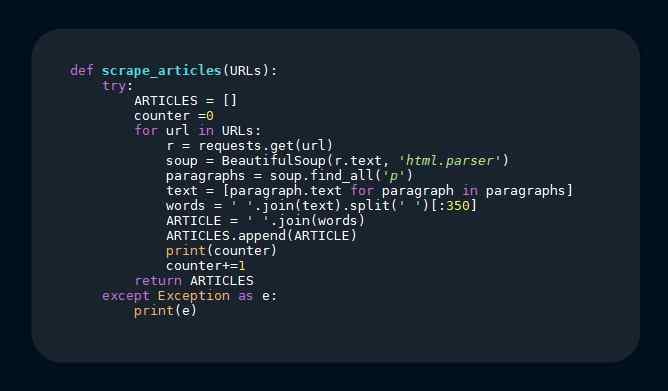

3. **def scrape_articles(URLs):**

The method scrapes the Url for text and parses it to a maximum of 350 words.

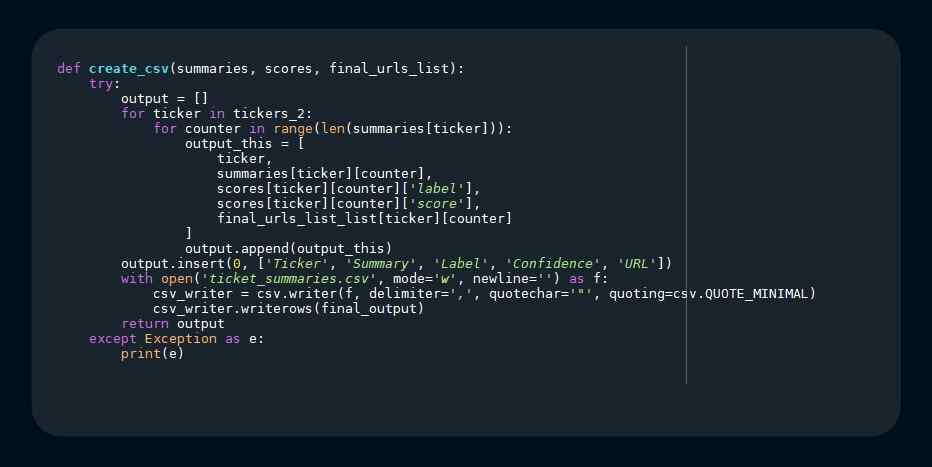

4. **def create_csv(summaries, scores, final_urls_lists):**

As we export all of the needed information to a CSV file, this is self-explanatory.

Reproducing Steps:

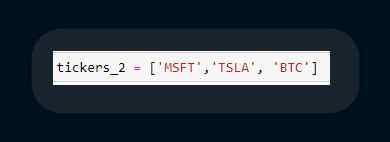

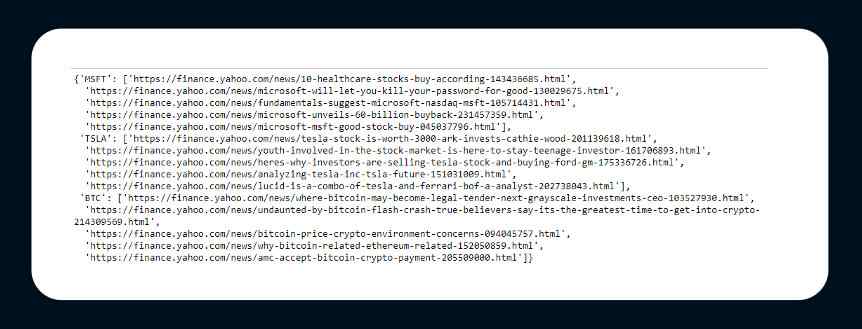

Step 1: Creating a ticker list and passing it to the function 1:

Step 2:

Step 3: To build the final URLs list, remove any unneeded URLs:

Summarizing Pipeline 2:

- Scrape the corresponding ticker and Nerws agency URLs.

- Remove any URLs that you don’t want from the URL list.

- Look for comparable URLs in news articles.

- Using the Pegasus model, summarized the scraped articles.

- Make a CSV file with all of the required fields.

For any further queries, contact X-Byte Enterprise Crawling today or request for a quote!!!