Use Python to extract as well as process data from web

For NLP (Natural Language Processing), cleaning data is very important even more when the data comes from the web. In this blog we will see a genuine example about web scraping as well as data pre-processing for Stoic philosophy text generators.

The data that we would be utilizing is Epistulae Morales Ad Lucilium or Moral Letters given to Lucilius provided by expatriate Roman senator Seneca in the late Stoa.

Epistulae

These letters given are resourced from WikiSource. The page includes the listing of all letters (124), where every letter is controlled in the own page.

Initially, we must scrape HTML from the content pages. For that, we utilize Python’s requests as well as BeautifulSoup libraries.

import requests from bs4 import BeautifulSoup # import page containing links to all of Seneca's letters # get web address src = "https://en.wikisource.org/wiki/Moral_letters_to_Lucilius" html = requests.get(src).text # pull html as text soup = BeautifulSoup(html, "html.parser") # parse into BeautifulSoup object

It provides us a BeautifulSoup object that has raw HTML we have provided using html. It’s time to look at this.

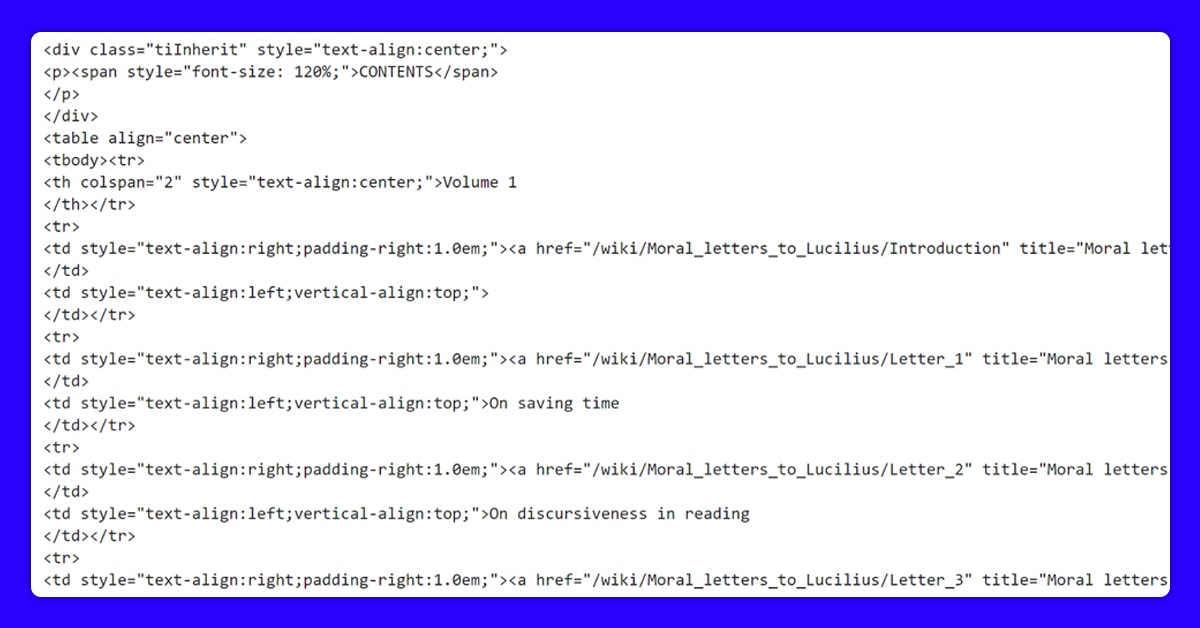

Here, we have to scrape local paths with each letter. A BeautifulSoup object helps us scrape all the elements having soup.find_all(‘a’). Although, this returns different elements, therefore, we require to filter for those that link with the letters.

We perform this with regular expressions that is very easy, we create our regex for searching anything, which starts with Letters trailed by one or more spaces as well as finally end with 1-3 digits \d{1,3}. It provides us re.compile(r”^Letter\s+\d{1,3}$”).

Through applying the regex to a list of different elements given by BeautifulSoup, we would have the following.

Unum Epistula Legimus

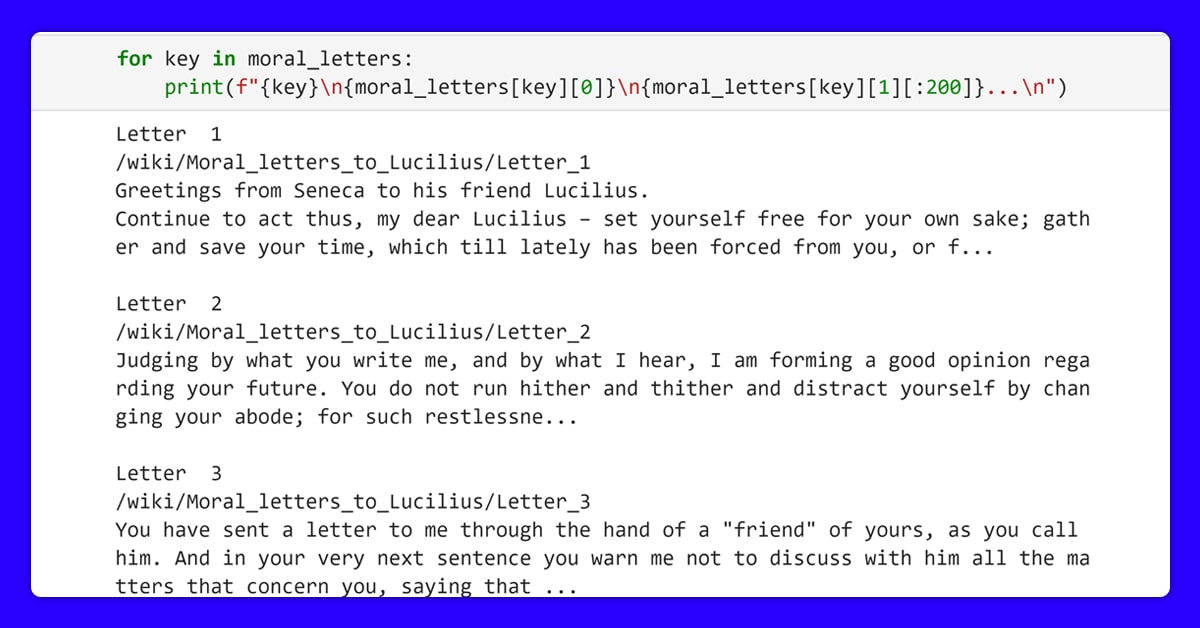

Now we require to define the function for parsing HTML from every page. It is actually very easy because the letter text is contained in <p> elements in the page.

Thus, similar to earlier, we scrape all the <p> elements. Then, we do a smaller amount of data formatting for making text more legible, before returning letter text.

# create function to pull letter from webpage (pulls text within <p> elements

def pull_letter(http):

# get html from webpage given by 'http'

html = requests.get(http).text

# parse into a beautiful soup object

soup = BeautifulSoup(html, "html.parser")

# build text contents within all p elements

txt = '\n'.join([x.text for x in soup.find_all('p')])

# replace extended whitespace with single space

txt = txt.replace(' ', ' ')

# replace webpage references ('[1]', '[2]', etc)

txt = re.sub('\[\d+\]', '', txt)

# replace all number bullet points that Seneca uses ('1.', '2.', etc)

txt = re.sub('\d+. ', '', txt)

# remove double newlines

txt = txt.replace("\n\n", "\n")

# and return the result

return txt

Omnis Epistulae

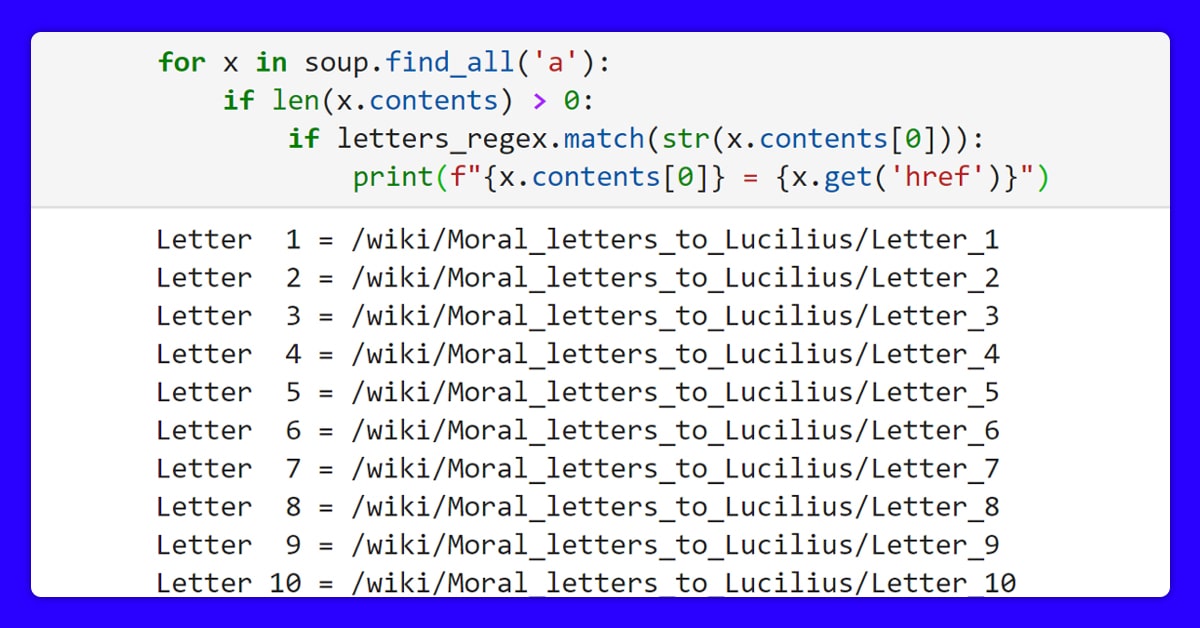

Using pull_letter function as well as letters_regex, we could read all the letters that we would place in the moral_letters.

# compile RegEx for finding 'Letter 12', 'Letter 104' etc

letters_regex = re.compile("^Letter\s+[0-9]{1,3}$")

# create dictionary containing letter number: [local href, letter contents] for all that satisfy above RegEx

moral_letters = {

x.contents[0]:

[x.get('href'), pull_letter(f"https://en.wikisource.org{x.get('href')}")]

for x in soup.find_all('a')

if len(x.contents) > 0

if letters_regex.match(str(x.contents[0]))

} # pull_letter function defined in hello_lucilius_letter.py

At the given point, we get all the required data, neatly saved in the moral_letters.

Praeparatio

Formatting data in the NLP-friendly format is very easy to write, although much more distracted.

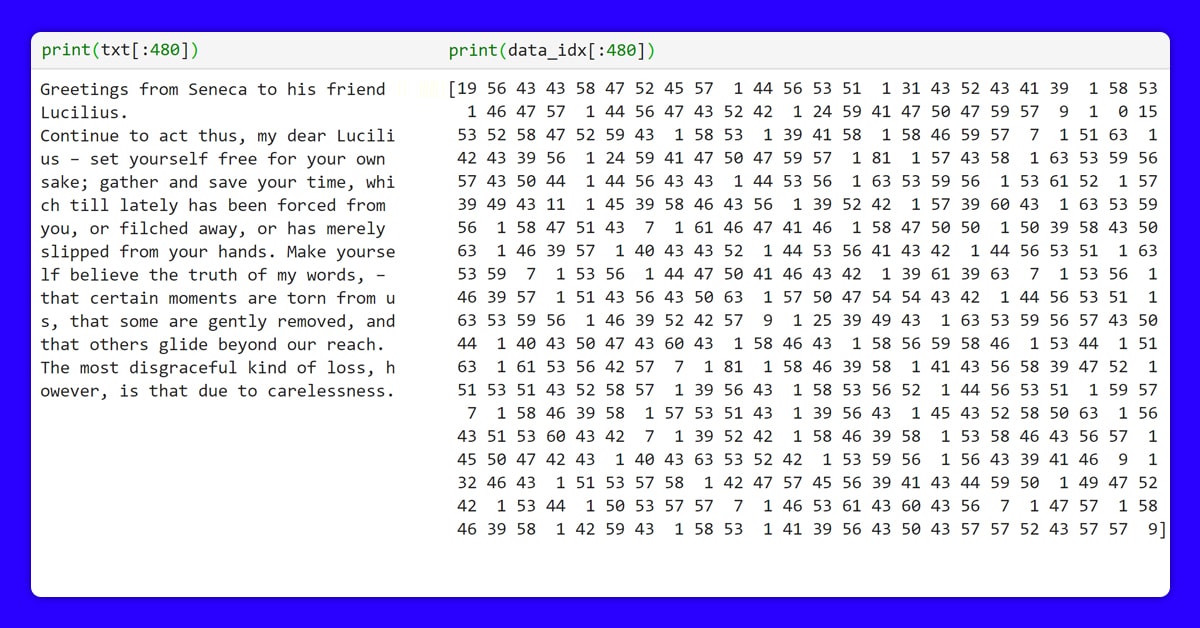

We would require to convert text that we get at any moment to numbers, making a numeric data representation that we would call data_idx.

For doing so, we would make a char2idx dictionary that stands for character-to-index. It converts characters like ‘a’, ‘b’, ‘c’ into indices like 0, 1, and 2.

Each character in the char2idx has to map to the unique index. To do this, we have to create the set of different characters in data (the set is having unique values). The set of characters is recognized as a vocabulary that we would describe as vocab.

# join the moral letters dictionary into a single string

txt = "\n".join([moral_letters[key][1] for key in moral_letters)

# create vocab from text string (txt)

vocab = sorted(set(txt))

# create char2idx mappings from the vocabulary

char2idx = {c: i for i, c in enumerate(vocab)}

# converting data from characters to indexes

data_idx = np.array([char2idx[c] for c in txt])

Therefore, let’s read the initial letter from the Seneca in new format.

Though it looks like rubbish, that is exactly what we need while formatting the NLP text data.

Now all those remains is shuffling and slicing our data, then this is ready to get inputs in the model. Here, we have used tensorflow.

Simplex Munditiis

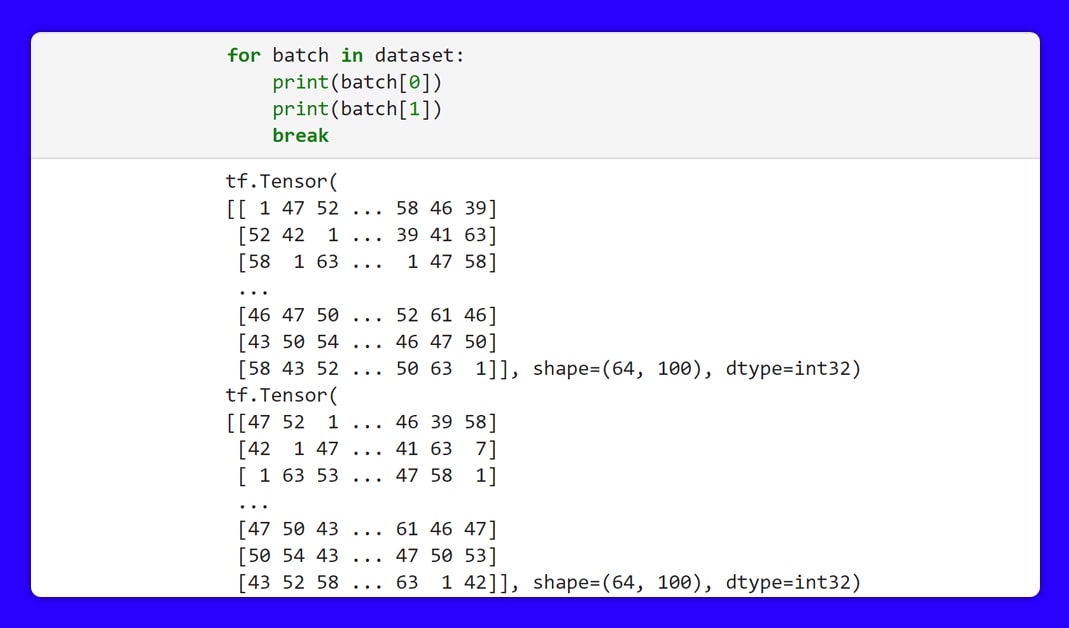

TensorFlow helps utilize tf.data.Dataset, the API we could use for simplifying the dataset revolution procedure. To make a dataset from Numpy array data_idx, we utilize tf.data.Dataset.from_tensor_slices.

Throughout training, we would only look at the text segment at once. For splitting data into series we utilize the Dataset .batch technique.

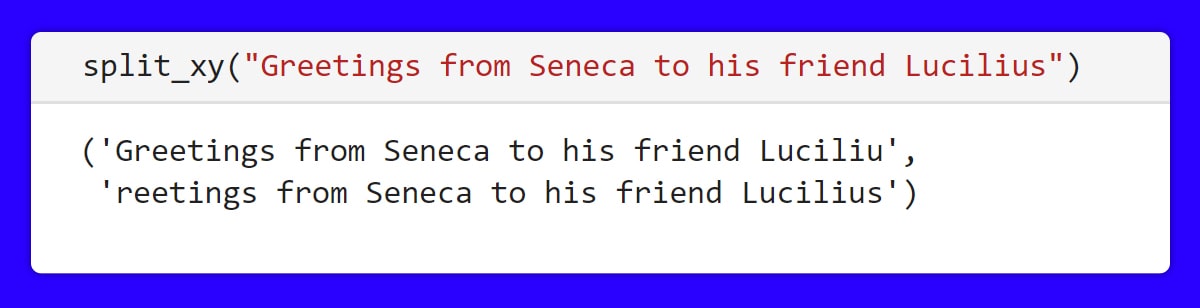

Because it is a text producer, our targeted data will consist of input data, moved one character frontward. For that, we would define the function named split_xy.

In the end, we make batches of 64 sequences using .batch that are shuffled with .shuffle.

# define the input/target data splitting function

def split_xy(seq):

input_data = seq[:-1]

target_data = seq[1:]

return input_data, target_data

SEQLEN = 100 # the number of characters in a single sequence

BATCHSIZE = 64 # how many sequences in a single training batch

BUFFER = 10000 # how many elements are contained within a single shuffling space

# create training dataset with tf dataset api

dataset = tf.data.Dataset.from_tensor_slices(data_idx)

# batch method allows conversion of individual characters to sequences of a desired size

sequences = dataset.batch(SEQLEN + 1, drop_remainder=True)

dataset = sequences.map(split_xy) # mapping dataset sequences to input-target segments

# shuffle the dataset AND batch into batches of 64 sequences

dataset = dataset.shuffle(BUFFER).batch(BATCHSIZE, drop_remainder=True)

Within dataset, we had 175 batches (because of len(txt) / (SEQLEN * BATCHSIZE)). Each single batch has an input as well as targeted array, created by split_xy function.

This data is now fully prepared, ready to be input into a model for training.

Qui Conclusioni

In spite of outward look of complexity, the execution of Machine Learning is not a huge task, kept for the happiest minds of the time.

Now, we are living in the time where we could teach the computer to repeat philosophy from a few of the ultimate minds in the human history. It is fairly easy to perform also.

This is an interesting time and we hope that we all will make the most out of it.

Please drop your comments and ask us if you have any queries. For more information about these services, contact X-Byte Enterprise Crawling or ask for a free quote!