At X-Byte, we have decided to utilize it like one more incentive for improving our Python skills! In the end, we will be able to perform two things:

- Extract maximum search results from key real estate sites in Lagos, Nigeria as well as create a database having all listings are available.

- Utilize the collected listings for doing some Visualizations and EDA, and ultimately try and find insights from visualizations.

The website we would be extracting is the real estate site Privateproperty.com.ng in Lagos, as they get many real estate lists to extract. Chances are that you choose some other website. However, you should adopt a code quickly.

Before starting with code snippets, let’s briefly summarize what we would be doing. We will utilize the results page from easy searches in private property sites where we can specify a few parameters earlier (like properties for rent) for asking the entire listing of results within Lagos.

Then, we have to utilize a command to request a website response. The result would be an HTML code that we would utilize to get the elements that we wish for the final table. When deciding what to get from every search results property, we require a loop to open all search pages and extract data.

Let’s Start!

DATA SCRAPING PROCEDURE

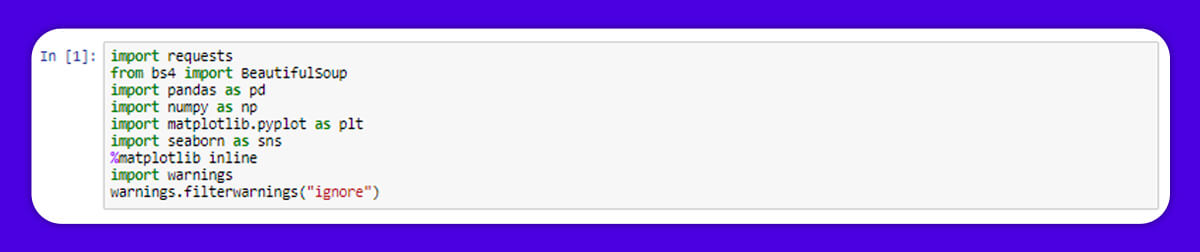

Import Libraries

Like most projects, we must import modules to get used. We would utilize Beautiful Soup to take care of HTML that we would be fetching. So, ensure the website you want to access permits data scraping. You may add “/robots.txt” to the original domain. Within this file, you can see if you follow guidelines about what is permitted to extract.

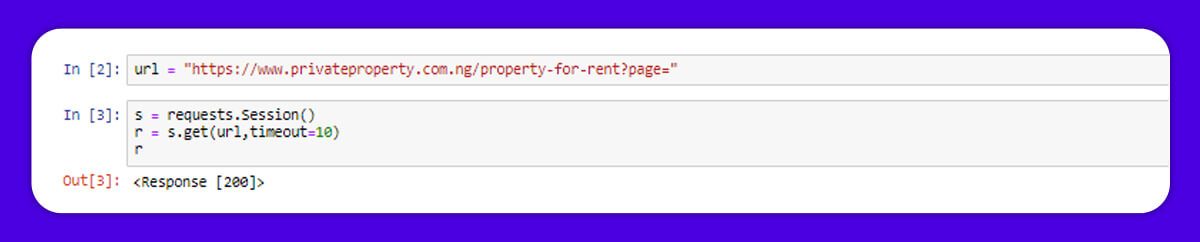

Define a URL path and we could test if we may communicate with a website. Among the problems with Python Requests is, if you don’t mention timeout, it will continue trying till last stage! This could be good for certain conditions however, not in most cases. So, it’s good to set the timeout value for every request. You may get many codes from the command, however, if you find “200” it’s generally a sign, which you’re good to have. You could see the listing of codes here.

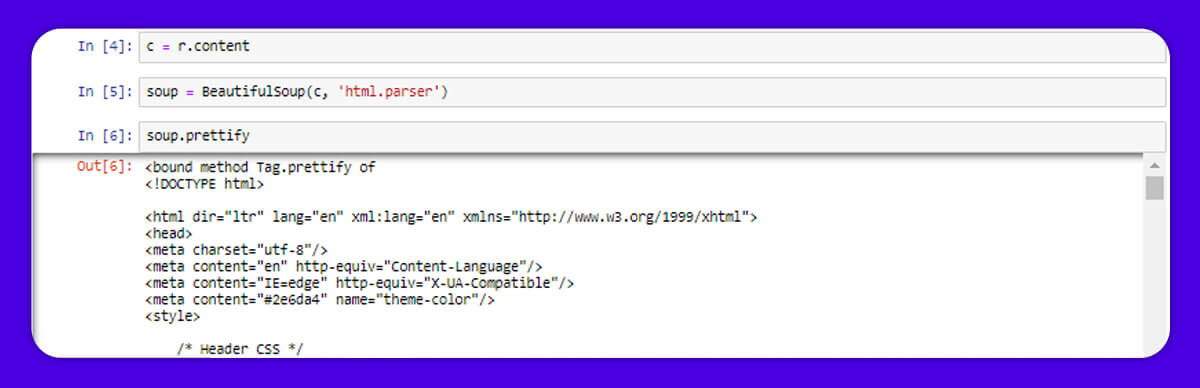

Time for Making Soup!

Now, we are ready to start searching whatever we have from a website. We have to define BeautifulSoup object, which would assist us read the HTML.

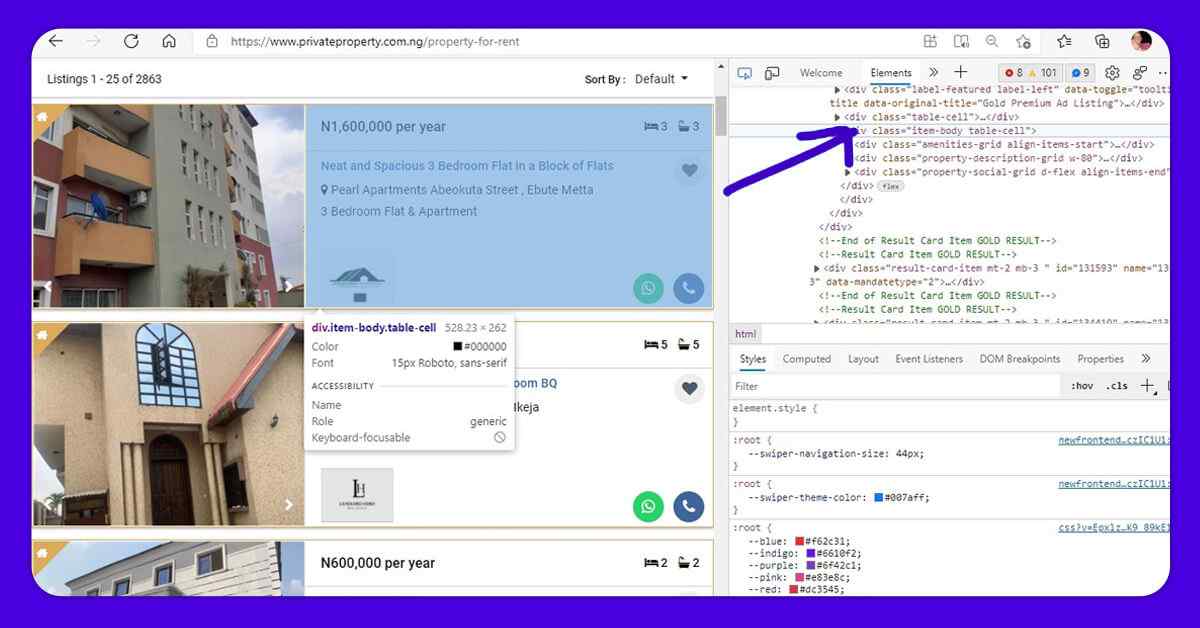

A significant part of creating a data scraping tool is directing through a source code of web pages we’re extracting from. The amount of text given is only a part of the entire page. You may also discover the position in HTML document of any particular object including the pricing of properties. Just right click and choose inspect or press Ctrl +Shift + I.

Prettify() function in the BeautifulSoup will help us view about how tags are nested within the document.

Before scraping the features, we wish to recognize every result in a page. To know which tags we must call, we could follow them from a price tag to the top till we reach somewhat, which looks like a main container for every result. We can see that below:

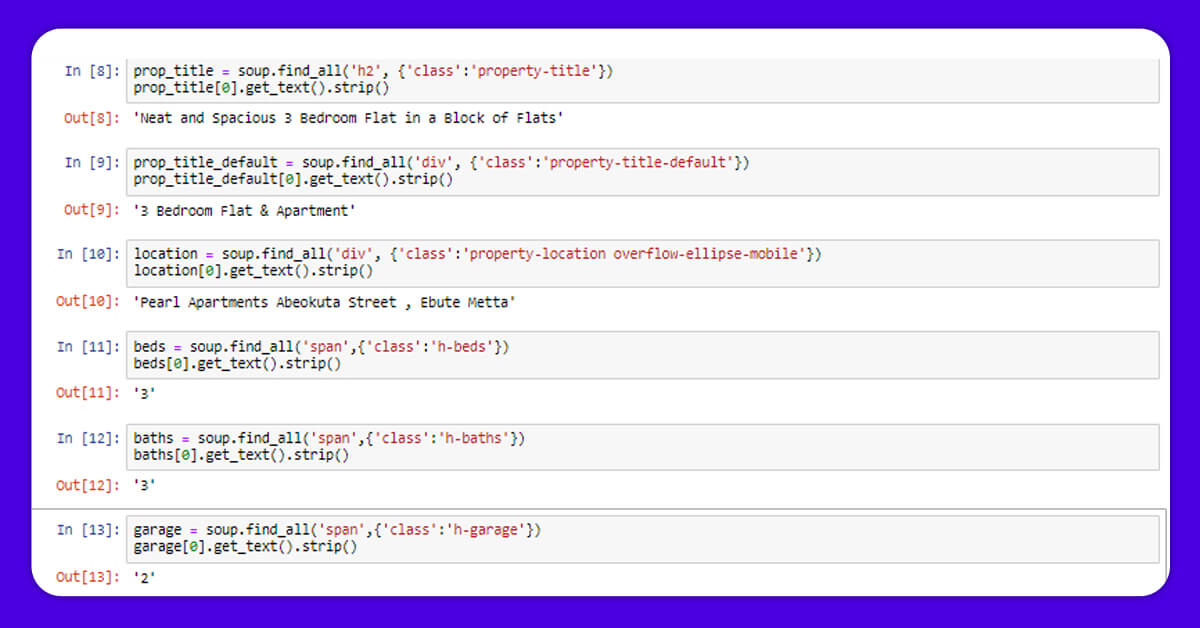

The examples given here should be sufficient to do the research. You could have learned a lot by working with the HTML structure and manipulating the values, which were getting returned until you find what you need.

Let’s Extract Some Pages!

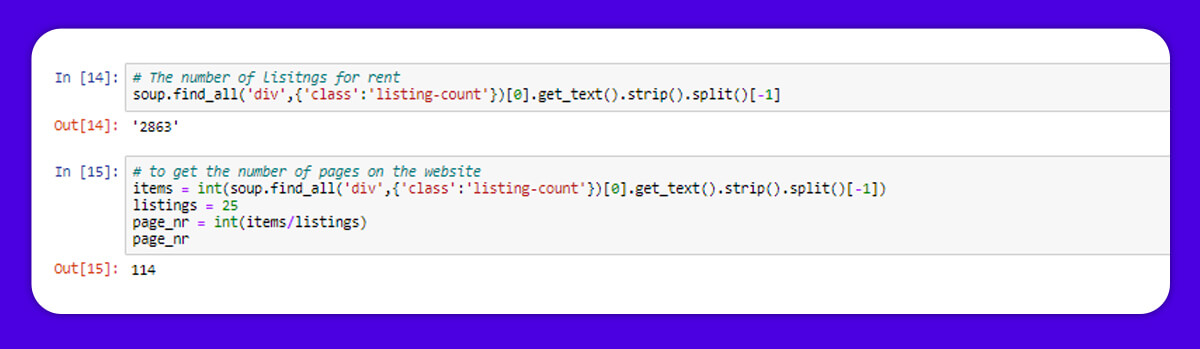

When you’re happy with different fields to scrape as well as you found the way of scraping them from every result container, then it’s time to set the base of crawler that we offer. First, we have to check total pages accessible for extracting then we create a base crawler. During time of checking, you have 114 pages and 2,863 listings.

Creating a Base Crawler

l=[]

base_url="https://www.privateproperty.com.ng/property-for-rent?page="

items = int(soup.find_all('div',{'class':'listing-count'})[0].get_text().strip().split()[-1])

listings = 25

page_nr = int(items/listings)

for page in range(1,int(page_nr),1):

print(base_url+str(page))

r= requests.get(base_url+str(page))

c= r.content

soup= BeautifulSoup(c,"html.parser")

classes = ["item-body table-cell"]

for class_ in classes:

real=soup.find_all("div",{"class":class_})

for i in list(range(0,len(real))):

d={}

d["page" ] = page

try:

d["title"]=real[i].find('h2', {'class':'property-title'}).get_text().strip()

except (IndexError,TypeError,AttributeError) as e:

d["title"]= None

try:

d["description"]=real[i].find('div', {'class':'property-title-default'}).get_text().strip()

except (IndexError,TypeError,AttributeError) as e:

d["description"]= None

try:

d["bedrooms"]= real[i].find('span',{'class':'h-beds'}).get_text().strip()

except (IndexError,TypeError,AttributeError) as e:

d["bedrooms"]= None

try:

d["bathrooms"]= real[i].find('span',{'class':'h-baths'}).get_text().strip()

except (IndexError,TypeError,AttributeError) as e:

d["bathrooms"]= None

try:

d["garage"] = real[i].find('span',{'class':'h-garage'}).get_text().strip()

except (IndexError,TypeError,AttributeError) as e:

d["garage"] = None

try:

d["location"] = real[i].find('div', {'class':'property-location overflow-ellipse-mobile'}).get_text().strip()

except (IndexError,TypeError,AttributeError):

d["location"] = None

try:

d["location_s"] = real[i].find('div', {'class':'property-location overflow-ellipse-mobile'}).get_text().strip().split()[-1]

except(IndexError,TypeError,AttributeError):

d['location_s'] = None

try:

d["price"]=real[i].find('div', {'class':'info-row price'}).get_text().strip().split()[0]

except (IndexError,TypeError,AttributeError) as e:

d["price"] = None

l.append(d)

#print(l)

df = pd.DataFrame(l)

print(df.head())

At X-Byte, we have extracted over 2,500 properties as well as now have the original dataset. Still, there’s some cleaning as well as pre-processing to perform however, we have already completed a complicated part.

Data Visualization

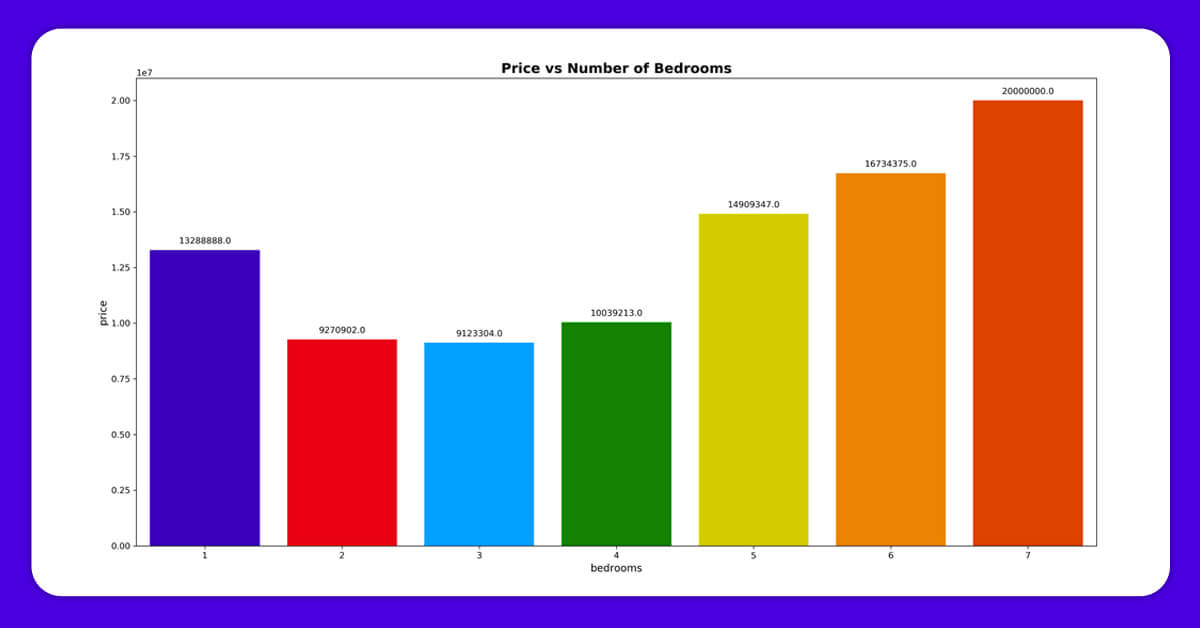

1. How do Total bedrooms affect the pricing of a building?

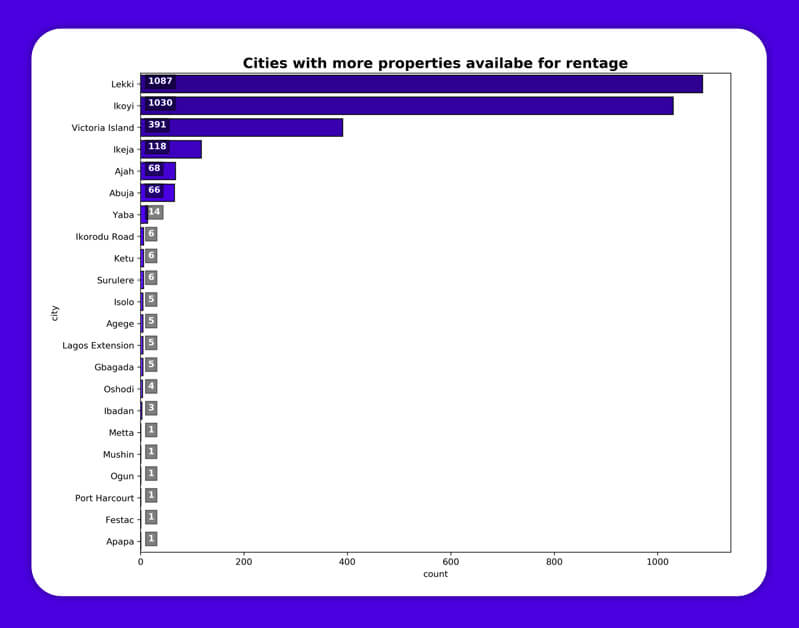

2. It is the plot, which shows cities having maximum properties accessible for rent

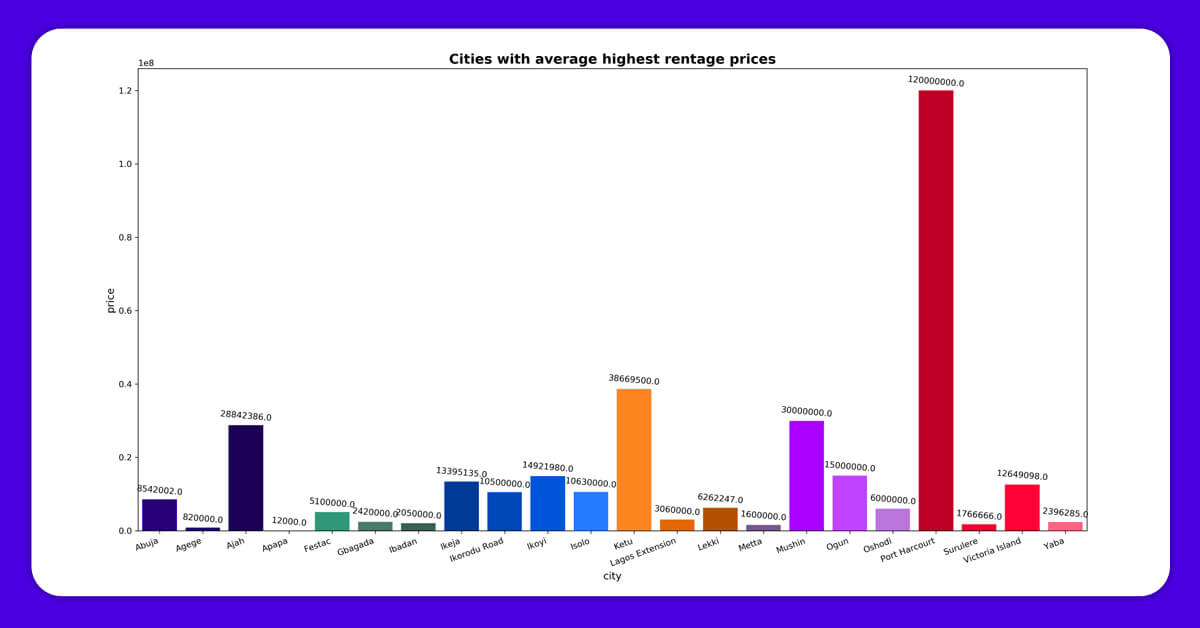

3. This plot indicates average pricing of buildings for every city.

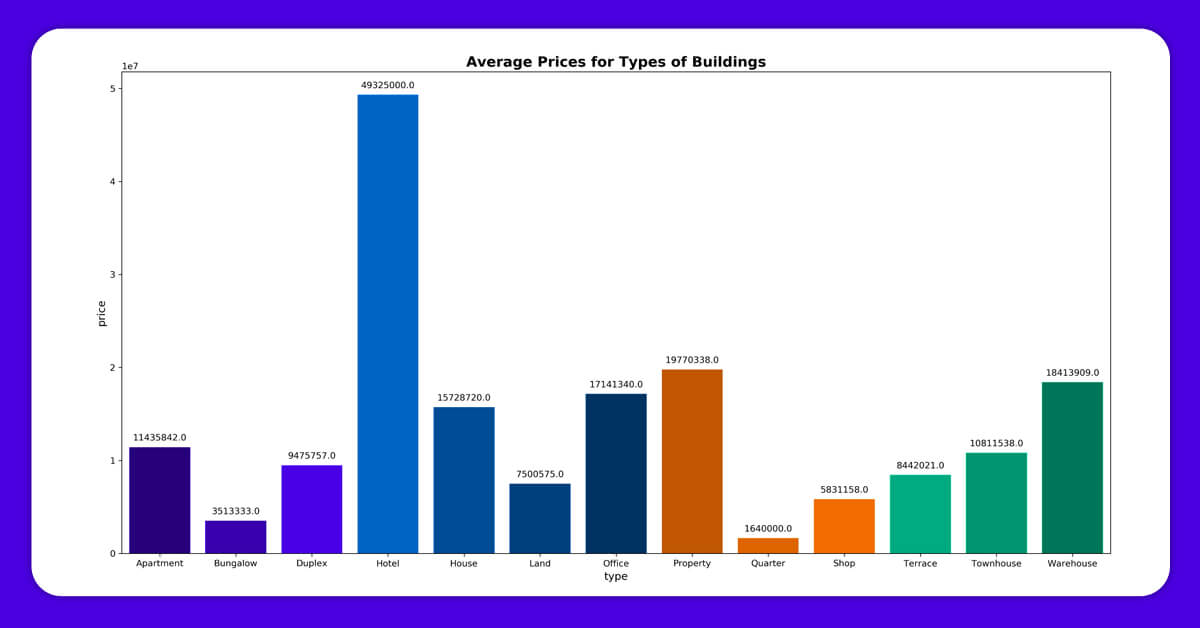

4. This plot indicates average prices for various kinds of buildings

Conclusion

This project showed an easy way of getting real estate property data from the property website with BeautifulSoup, data cleaning and pre-processing, and data visualization with Matplotlib and Seaborn libraries.

If you want to scrape real estate property data using Python, contact X-Byte Enterprise Crawling or ask for a free quote!