The majority of developers believe that data scraping is very hard, slow, and difficult to measure — particularly when you use headless browsers. However, the fact is that you can extract the latest websites without using any headless browsers. This is fast, easy, and extremely scalable.

Rather than using Puppeteer, Selenium, or other headless browsers, it’s easy to use Python Requests to exhibit how that works. We will show you how to scrape data from the public APIs, which most contemporary websites use in the front end.

In customary web pages, your objective is to parse HTML as well as scrape the relevant data. In contemporary sites, the visible end would not have lots of HTML as the data gets fetched asynchronously once the first request is made. The majority of people are using headless browsers as they can implement JavaScript, make more requests, as well as parse the entire page.

Scraping Public APIs

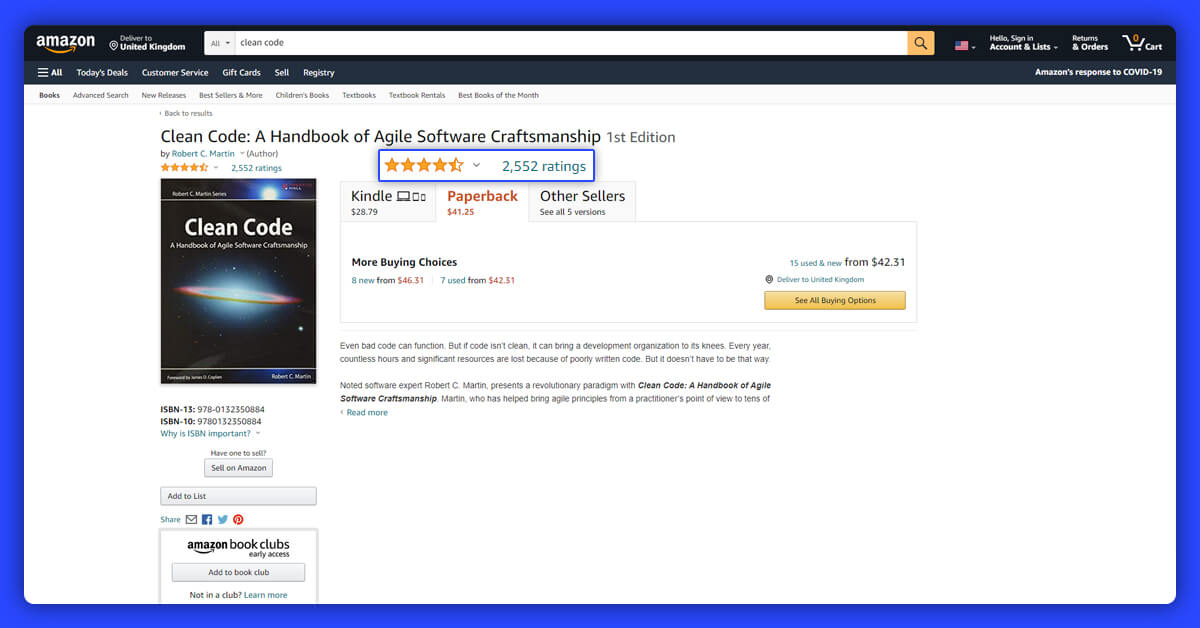

Let’s see how you can use APIs, which websites use for bringing data. We will extract Amazon products reviews as well as show how you can do the similar thing. In case, you follow a procedure that we have given, you might feel surprised how simple that is to do.

The objective is to scrape all the product reviews for specific products.

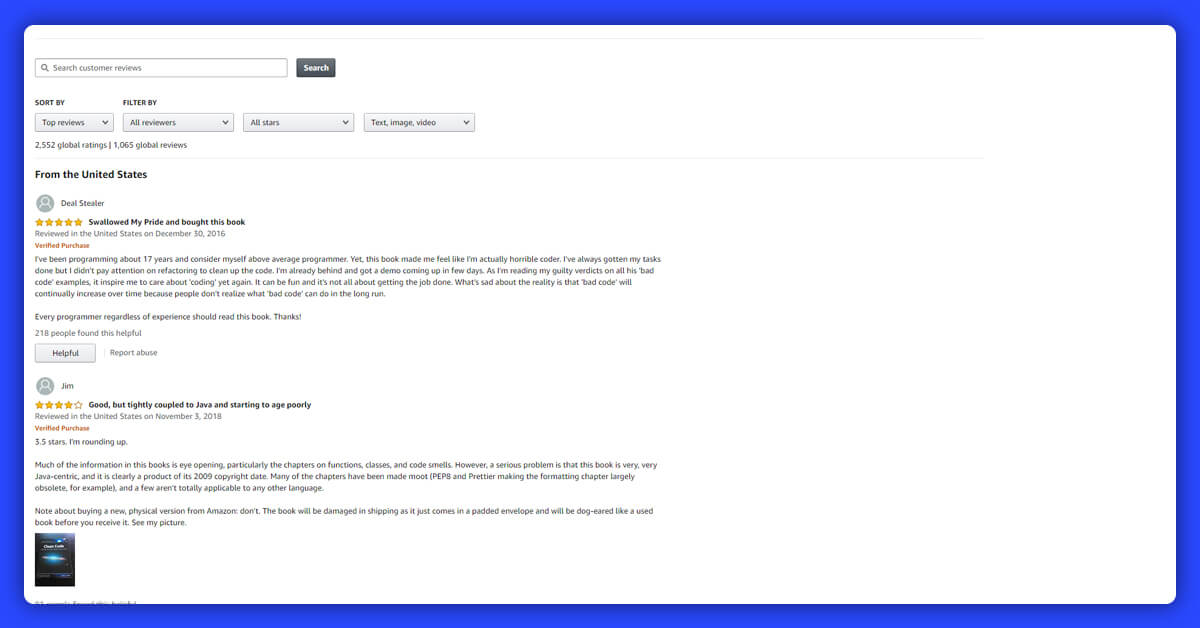

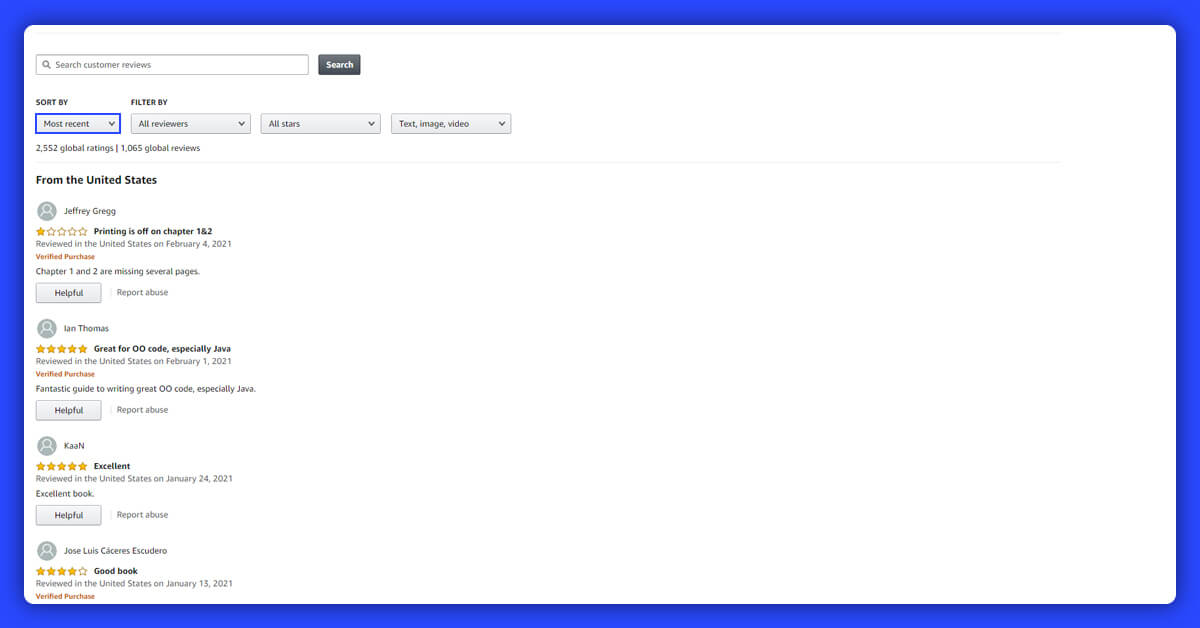

After going at product pages, click on ratings, and go to the option “See all reviews” and that’s what we have observed:

There are personal reviews. Our objective is to scrape data from pages, without using the headless browsers for rendering the pages.

The procedure is easy and will need some browser developers’ tools. We require to trigger updates in reviews to get the requests, which brings reviews. The majority of browsers track network requests while opening the developers’ tools, so ensure they get open before triggering an update.

At times, due to analytics code and tracking running on the pages, you might have different events on every click however, if you search them then you will find the requests, which bring the real data.

At times, due to analytics code and tracking running on the pages, you might have different events on every click however, if you search them then you will find the requests, which bring the real data.

Normally, you will get data in the understandable JSON format, which you could easily transform as well as store.

Here, the given format is not looking like JSON, HTML, or JavaScript however, it has the pattern, which is easily comprehensible. Later, we will show how to utilize a Python code for parsing it, although there is a strange format.

After the initial preparation, we need to move towards the codes. You could easily write a code for requests in the selected programming language.

For saving time, we like to utilize a cURL converter. Firstly, we copy the requests as cURL through double-clicking on that as well as choosing “Copy as cURL” like you can observe in the last screenshot. Then we paste it in the converter for getting the Python codes.

Note 1 : Many ways are there to do the procedure, we just make it easier. In case, you have just created the request with attributes and headers which are utilized then you will be fine.

Note 2 : When we want to do experiments with requests, we import cURL command within Postman so that we can work with requests as well as understand how an endpoint works. However, in this guide, we’ll do everything with code.

import requests

import json

from bs4 import BeautifulSoup as Soup

import time

headers = {

'authority': 'www.amazon.com',

'dnt': '1',

'rtt': '250',

'user-agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36',

'content-type': 'application/x-www-form-urlencoded;charset=UTF-8',

'accept': 'text/html,*/*',

'x-requested-with': 'XMLHttpRequest',

'downlink': '6.45',

'ect': '4g',

'origin': 'https://www.amazon.com',

'sec-fetch-site': 'same-origin',

'sec-fetch-mode': 'cors',

'sec-fetch-dest': 'empty',

'referer': 'https://www.amazon.com/Clean-Code-Handbook-Software-Craftsmanship/product-reviews/0132350882/ref=cm_cr_arp_d_viewopt_srt?ie=UTF8&reviewerType=all_reviews&sortBy=recent&pageNumber=1',

}

post_data = {

'sortBy': 'recent',

'reviewerType': 'all_reviews',

'formatType': '',

'mediaType': '',

'filterByStar': '',

'pageNumber': '1',

'filterByLanguage': '',

'filterByKeyword': '',

'shouldAppend': 'undefined',

'deviceType': 'desktop',

'canShowIntHeader': 'undefined',

'reftag': 'cm_cr_getr_d_paging_btm_next_2',

'pageSize': '10',

'asin': '0132350882',

'scope': 'reviewsAjax1'

}

response = requests.post('https://www.amazon.com/hz/reviews-render/ajax/reviews/get/ref=cm_cr_arp_d_paging_btm_next_2',

headers=headers, data=post_data)

Let’s understand what we are doing here. We took headers as well as post body from requests in a browser. We have removed the headers, which are not necessary as well as kept the ones, which are required to make a request look genuine.

The most significant header is User-Agent. Without User-Agent, you may expect to get blocked several times.

The data we’re passing to post requests, we pass language, product ID, preferred sort, as well as some other parameters that we won’t explain here. It’s very easy to know what kind to pass through playing around using filters in an actual page as well as see how these requests alter. The paging is easy as it describes a pageSize as well as pageNumber that is self-explanatory. When the paging is not understandable, you could work with a page to see how these requests change.

In post data, we have been passing all the parameters as it is. A few of the important ones include:

pageNumber: The present page number

pageSize: Number of results every page

asin: Product ID

sortBy: Active sort

The pageSize and pageNumber parameters are easy to understand. We have realized how that works through changing the type on the real pages as well as searching at how request changes within Network tabs. For ID (asin), we are looking at a page link that we would notice it exists there (in bold):

https://www.amazon.com/Clean-Code-Handbook-Software-Craftsmanship/dp/0132350882/#customerReviews

To know what every parameter does with the undocumented APIs, you have to get updates in a page to observe how each parameter changes.

A significant note about pageSize is, it can assist us in reducing the total requests required to fetch data we need. However, usually no APIs will allow you to enter any pages size required. That’s why we started with 10 as well as went equal to 20 in which the results has stopped growing. Therefore, we are using the maximum page size of 20.

Note : Whenever you use non-default pages size, you’re making that easier for a targeted site to block, so it should be completed carefully.

The following step is understanding how paging works as well as loop requests. You can have three key ways of handling paging:

Page numbers : That’s when the page numbers are passed in every request, 1 for first page, 2 for second page and more.

Offset: The offsets are very common. In case, every page provide ten results, the second page would have z balance of ten, then third page the offset of 20 till you reach the end result.

Cursor : One more common way of handling paging is by using cursors. The initial page doesn’t need one, but response from the initial request will provide you cursor for next requests till you reach its end.

Here, the API utilizes page numbers to get through pages in an endpoint. We have to find out what suggests the end of requests therefore, we can utilize it in the loop.

To perform that, we have to reach last pages to observe what happens. Here, an easy indicator is that a “Next Page” button is inactivated that you can observe in the given screenshot. We’ll remember to break the loop whenever we write the code.

To perform that, we have to reach last pages to observe what happens. Here, an easy indicator is that a “Next Page” button is inactivated that you can observe in the given screenshot. We’ll remember to break the loop whenever we write the code.

page = 1

reviews = []

while True:

post_data['pageNumber'] = page

response = requests.post('https://www.amazon.com/hz/reviews-render/ajax/reviews/get/ref=cm_cr_arp_d_paging_btm_next_2',

headers=headers, data=post_data)

data = response.content.decode('utf-8')

for line in data.splitlines():

try:

payload = json.loads(line)

html = Soup(payload[2], features="lxml")

# Stop scraping once we reach the last page

if html.select_one('.a-disabled.a-last'):

break

review = html.select_one('.a-section.review')

if not review:

# Skip unrelated sections

continue

reviews.append({

'stars': float(review.select_one('.review-rating').text.split(' out of ')[0]),

'text': review.select_one('.review-text.review-text-content').text.replace("\n\n", "").strip(),

'date': review.select_one('.review-date').text.split(' on ')[1],

'profile_name': review.select_one('.a-profile-name').text

})

except Exception as e:

pass

print(str(len(reviews)) + ' reviews have been fetched so far on page ' + str(page))

page += 1

time.sleep(2)

We need to break down the code to observe how it does:

# We set the new page each time post_data['pageNumber'] = page # ... # And at the end of the loop we increase the page counter page += 1

Therefore, we need to split lines so that we could parse them. Through looking at responses from APIs, every line is either the array or the string, which contains &&&. We took the “ask for forgiveness and not the permission” approach through wrapping every json.loads in the try or except block. It will ignore &&& lines as well as concentrate on ones, which are JSON arrays.

After that one-by-one, we practice each line. A few lines are for top as well as bottom sections with don’t having the real reviews, therefore we can carefully ignore these. Lastly, we need to scrape relevant data from real reviews:

# We load the line as JSON

payload = json.loads(line)

# And we parse the HTML using Beautiful Soup

html = Soup(payload[2], features="lxml")

# Stop scraping once we reach the last page

if html.select_one('.a-disabled.a-last'):

break

review = html.select_one('.a-section.review')

# When the review section is not found we move to the next line

if not review:

continue

It’s time to clarify how you could parse texts from HTML that needs knowledge about CSS selectors as well as some fundamental processing.

reviews.append({

# To get the stars we split the text that says 1.0 out of 5 for example, and just keep the first number

'stars': float(review.select_one('.review-rating').text.split(' out of ')[0]),

# For the text we extract it easily but we need to remove the surrounding new lines and whitespace

'text': review.select_one('.review-text.review-text-content').text.replace("\n\n", "").strip(),

# The date takes some simple splitting once more

'date': review.select_one('.review-date').text.split(' on ')[1],

# And the profile name is just a simple selector

'profile_name': review.select_one('.a-profile-name').text

})

We didn’t go very deep in the specifics — our objective is to clarify the procedure as well as not Python or Beautiful Soup, the particular tools we have used. It is the procedure you need to follow with all programming languages.

The present version of a code goes by the pages as well as scrapes the profile name, stars, date, as well as comments from every review. Every review gets stored in the list, which you could then process or save further. As scraping could fail any time, you have to save data usually and ensure you may easily restart the scrapers from where they get failed.

In case of a real-life example, which are a few more things, which you might need to do:

Using a more healthy solutions for scraping, which supports concurrent proxies, requests, pipelines to process and save data, as well as more.

Parse all the dates to have the standard format.

Scrape all the data pieces you can.

Produce a system for running updates as well as refresh data.

All the headless browsers are good for scraping data from the websites, however, they are heavier. They are more dependable and less expected to breakdown than accessing the undocumented APIs however there are many times while accessing the public APIs is much quicker and makes more sense. Let’s take an example:

This API won’t change often

You require to have data once

Speed is important

The API gives more data than a page itself

Many valid reasons are there for using web scraping APIs. You can even claim that it’s more reverent towards a server as it takes lesser requests as well as doesn’t need loading of the static assets.

Scrape respectfully through adding different delays between the requests as well as keeping parallel requests to similar domains to the minimum. The objective of web data scraping is to use and analyze data as well as create something valuable out of that, not to source problems or stoppages to the servers.

X-Byte Enterprise Crawling can help you in scraping modern websites without headless browsers with professional web scraping services.