Have you dreamed about going on a dream vacation however, housing prices have kept you away from that? Or you don’t have time to constantly look for the options? If you are interested in this, then you will enjoy this blog.

We have created a data scraper, which will extract Airbnb listing posts data based on user inputs (date, location, total guests, guest types) as well as put data in a well-formatted Pandas DataFrame, it would filter data depending on the prices (this will keep posts that price is inside a user’s range) as well as finally this will send an auto email to a user having filtered posts. You just need to run a couple of python scripts and you will get the results.

Let’s check the information. Clone project is available at:

https://xbyte.io/Airbnb_scrapy

Python Modules and Libraries

For this assignment we have mainly utilized these libraries:

- Selenium: A widely used framework to test applications. This is a great framework as it helps in getting websites through a driver, which can click as if you were surfing on a website.

- BeautifulSoup: A wonderful python library, which helps you get data from XML and HTML files.

- Smtplib: It outlines an SMTP (Simple Mail Transfer Protocol) customer session object, which can be utilized to send different emails.

- Pandas: This is an open-source data analysis tool, which becomes useful when comes to working with data. Its key data structures include Dataframes and Series.

To make way with this project, you have to download a WebDriver (a tool, which gives capabilities to navigate web pages) to utilize Selenium. Since we have used Chrome here, we have downloaded a ChromeDriver. You can also download it from here:

ChromeDriver: https://chromedriver.chromium.org

Source Code

Let’s go through this code. Read comments to understand more.

import requests

from bs4 import BeautifulSoup

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.action_chains import ActionChains

import time

import pandas as pd

# This is the path where I stored my chromedriver

PATH = "/Users/juanpih19/Desktop/Programs/chromedriver"

class AirbnbBot:

# Class constructor that takes location, stay (Month, Week, Weekend)

# Number of guests and type of guests (Adults, Children, Infants)

def __init__(self, location, stay, number_guests, type_guests):

self.location = location

self.stay = stay

self.number_guests = number_guests

self.type_guests = type_guests

self.driver = webdriver.Chrome(PATH)

# The 'search()' function will do the searching based on user input

def search(self):

# The driver will take us to the Airbnb website

self.driver.get('https://www.airbnb.com')

time.sleep(1)

# This will find the location's tab xpath, type the desired location

# and hit enter so we move the driver to the next tab (check in)

location = self.driver.find_element_by_xpath('//*[@id="bigsearch-query-detached-query-input"]')

location.send_keys(Keys.RETURN)

location.send_keys(self.location)

location.send_keys(Keys.RETURN)

# It was difficult to scrape every number on the calendar

# so both the check in and check out dates are flexible.

flexible = location.find_element_by_xpath('//*[@id="tab--tabs--1"]')

flexible.click()

# Even though we have flexible dates, we can choose if

# the stay is for the weekend or for a week or month

# if stay is for a weekend we find the xpath, click it and hit enter

if self.stay in ['Weekend', 'weekend']:

weekend = self.driver.find_element_by_xpath('//*[@id="flexible_trip_lengths-weekend_trip"]/button')

weekend.click()

weekend.send_keys(Keys.RETURN)

# if stay is for a week we find the xpath, click it and hit enter

elif self.stay in ['Week', 'week']:

week = self.driver.find_element_by_xpath('//*[@id="flexible_trip_lengths-one_week"]/button')

week.click()

week.send_keys(Keys.RETURN)

# if stay is for a month we find the xpath, click it and hit enter

elif self.stay in ['Month', 'month']:

month = self.driver.find_element_by_xpath('//*[@id="flexible_trip_lengths-one_month"]/button')

month.click()

month.send_keys(Keys.RETURN)

else:

pass

# Finds the guests xpath and clicks it

guest_button = self.driver.find_element_by_xpath('/html/body/div[5]/div/div/div[1]/div/div/div[1]/div[1]/div/header/div/div[2]/div[2]/div/div/div/form/div[2]/div/div[5]/div[1]')

guest_button.click()

# Based on user input self.type_guests and self.number_guests

# if type_guests are adults

# it will add as many adults as assigned on self.number_guests

if self.type_guests in ['Adults', 'adults']:

adults = self.driver.find_element_by_xpath('//*[@id="stepper-adults"]/button[2]')

for num in range(int(self.number_guests)):

adults.click()

# if type_guests are children

# it will add as many children as assigned on self.number_guests

elif self.type_guests in ['Children', 'children']:

children = self.driver.find_element_by_xpath('//*[@id="stepper-children"]/button[2]')

for num in range(int(self.number_guests)):

children.click()

# if type_guests are infants

# it will add as many infants as assigned on self.number_guests

elif self.type_guests in ['Infants', 'infants']:

infants = self.driver.find_element_by_xpath('//*[@id="stepper-infants"]/button[2]')

for num in range(int(self.number_guests)):

infants.click()

else:

pass

# Guests tab is the last tab that we need to fill before searching

# If I hit enter the driver would not search

# I decided to click on a random place so I could find the search's button xpath

x = self.driver.find_element_by_xpath('//*[@id="field-guide-toggle"]')

x.click()

x.send_keys(Keys.RETURN)

# I find the search button snd click in it to search for all options

search = self.driver.find_element_by_css_selector('button._sxfp92z')

search.click()

# This function will scrape all the information about every option

# on the first page

def scraping_aribnb(self):

# Maximize the window

self.driver.maximize_window()

# Gets the current page sourse

src = self.driver.page_source

# We create a BeautifulSoup object and feed it the current page source

soup = BeautifulSoup(src, features='lxml')

# Find the class that contains all the options and store it

# on list_of_houses variable

list_of_houses = soup.find('div', class_ = "_fhph4u")

# Type of properties list - using find_all function

# found the class that contains all the types of properties

# Used a list comp to append them to list_type_property

type_of_property = list_of_houses.find_all('div', class_="_1tanv1h")

list_type_property = [ i.text for i in type_of_property]

# Host description list - using find_all function

# found the class that contains all the host descriptions

# Used a list comp to append them to list_host_description

host_description = list_of_houses.find_all('div', class_='_5kaapu')

list_host_description = [ i.text for i in host_description]

# Number of bedrooms and bathrooms - using find_all function

# bedrooms_bathrooms and other_amenities used the same class

# Did some slicing so I could append each item to the right list

number_of_bedrooms_bathrooms = list_of_houses.find_all('div', class_="_3c0zz1")

list_bedrooms_bathrooms = [ i.text for i in number_of_bedrooms_bathrooms]

bedrooms_bathrooms = []

other_amenities = []

bedrooms_bathrooms = list_bedrooms_bathrooms[::2]

other_amenities = list_bedrooms_bathrooms[1::2]

# Date - using find_all function

# found the class that contains all the dates

# Used a list comp to append them to list_date

dates = list_of_houses.find_all('div', class_="_1v92qf0")

list_dates = [date.text for date in dates]

# Stars - using find_all function

# found the class that contains all the stars

# Used a list comp to append them to list_stars

stars = list_of_houses.find_all('div', class_ = "_1hxyyw3")

list_stars = [star.text[:3] for star in stars]

# Price - using find_all function

# found the class that contains all the prices

# Used a list comp to append them to list_prices

prices = list_of_houses.find_all('div', class_ = "_1gi6jw3f" )

list_prices = [price.text for price in prices ]

# putting the lists with data into a Pandas data frame

airbnb_data = pd.DataFrame({'Type' : list_type_property, 'Host description': list_host_description, 'Bedrooms & bathrooms': bedrooms_bathrooms, 'Other amenities': other_amenities,

'Date': list_dates, 'Price': list_prices})

# Saving the DataFrame to a csv file

airbnb_data.to_csv('Airbnb_data.csv', index=False)

if __name__ == '__main__':

vacation = AirbnbBot('New York', 'week', '2', 'adults')

vacation.search()

time.sleep(2)

vacation.scraping_aribnb()

A few xpaths are not displayed fully in the snippet, however, you don’t require a well-displayed xpath for understanding the project. Although, if you need a code for personal usage, you can get it from the Github link given here.

The above given code comes with two methods: scraping_aribnb() and search()

Search()

This method utilizes Selenium to go to an Airbnb website as well as fill tabs with information given by a user on a constructor that here is “New York”, “adults”, “week”, and “2”.

Here is the procedure, which a search() method uses in easy English

search: get website address The address will take you to Airbnb's main page Location, Check In, Check out, Guests and Search options will be displayed # Location Find xpath for location Click in it Enter desired location Hit Enter, by hitting enter you will move to the next option (Check In) # Check in - Check out Check In and Check out options are flexible Find xpath for Flexible Click in it Once Flexible is clicked three options will be displayed: Weekend, Week, Month In the constructor the user specifies if the stay is for a week, weekend or month Click on the right option Hit Enter, to move to the next option # Guests The constuctor provides us with number of guests and type of guests (Adults, Children, Infants) Find type of guests xpath click in it as many times as specified on number guests for num in range(int(self.number_guests)): click() # Search Up to this point We have location, flexible check in and check out date, guests Find xpath for search The xpath did not work Find the css selector that contains the search button Click in it

Scraping_aribnb()

Once the Search() has taken care of providing information as well as taking us to different accessible alternatives, scraping_aribnb() deals with extracting data of every alternative on the first page as well as save data in the csv file.

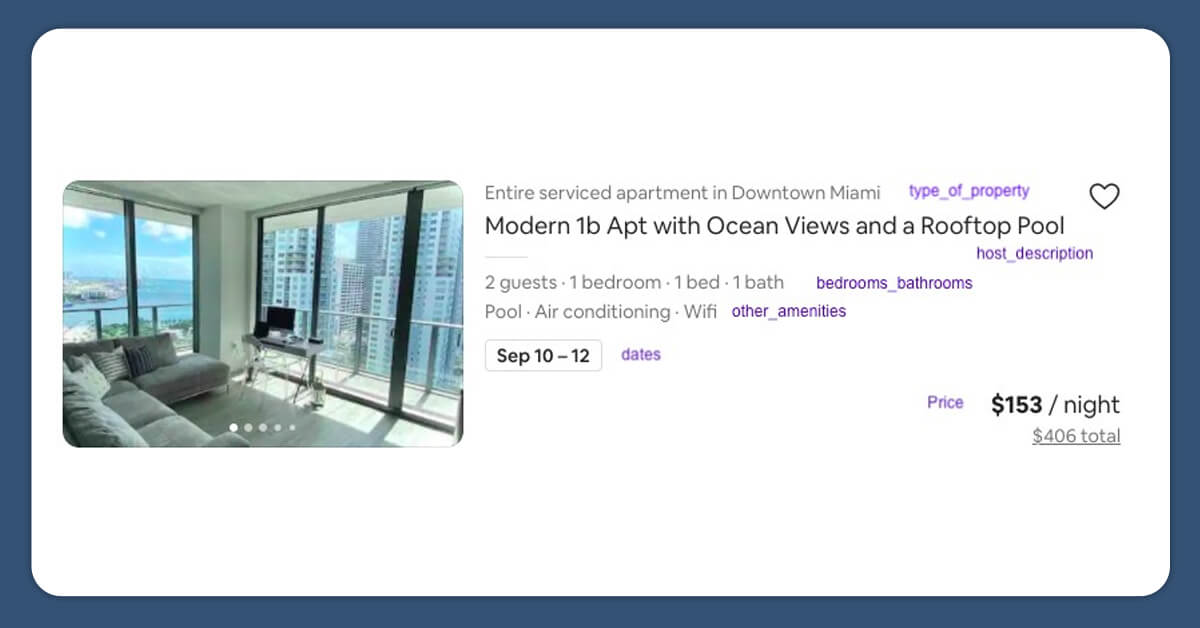

Just go through all the data, which a single post provides

Six columns have filled with the data from each post. (The post doesn’t come on dataset)

Let’s go through the procedure that scraping_aribnb() technique follows in easy English:

scraping airbnb posts:

maximize the window

src = get current page source (HTML code)

soup = BeautifulSoup(src, features='lxml')

list_of_houses = with the beautifulsoup object find

the class containing all the posts with all

its information

# list_type_property

type_of_properties = list_of_houses.find (all) the class

containing all type of properties

list_type_property = [i.text for i in type_of_properties]

# list_host_description

host_description = list_of_houses.find (all) the class

containing all host descriptions

list_host_description = [i.text for i in host_description]

# bedrooms_bathrooms, other_amenities

number_of_bedrooms_batrooms = list_of_houses.find (all) the class

containing all the amenities

There are two types of amenities: Bedrooms and badrooms and others

bedrooms_bathrooms = list_bedrooms_bathrooms[::2]

other_amenities = list_bedrooms_bathrooms[1::2]

# list_prices

prices = list_of_houses.find (all) the class

containing all the prices

list_prices = [i.text for i in prices]

put each list into a dictionary and then put it into a data frame

save it

We Have Got a Good Dataset. What Next?

Now, we have to understand how much a user is ready to pay for his housing and depending on the amount, we would filter a dataset as well as send a user with the most reasonable options.

We have created a traveler.py file, which takes user inputs and filters a dataset. We have decided to do that on a diverse file.

Just go through the code and read comments to understand more.

import pandas as pd

import smtplib

from email.mime.multipart import MIMEMultipart

from email.mime.application import MIMEApplication

from email.mime.text import MIMEText

from password import password

class Traveler:

# Email Address so user can received the filtered data

# Stay: checks if it will be a week, month or weekend

def __init__(self, email, stay):

self.email = email

self.stay = stay

# This functtion creates a new csv file based on the options

# that the user can afford

def price_filter(self, amount):

# The user will stay a month

if self.stay in ['Month', 'month']:

data = pd.read_csv('Airbnb_data.csv')

# Monthly prices are usually over a $1,000.

# Airbnb includes a comma in thousands making it hard to transform it

# from string to int.

# This will create a column that takes only the digits

# For example: $1,600 / month, this slicing will only take 1,600

data['cleaned price'] = data['Price'].str[1:6]

# list comp to replace every comma of every row with an empty space

_l = [i.replace(',', '') for i in data['cleaned price']]

data['cleaned price'] = _l

# Once we got rid of commas, we convert every row to an int value

int_ = [int(i) for i in data['cleaned price']]

data['cleaned price'] = int_

# We look for prices that are within the user's range

# and save that to a new csv file

result = data[data['cleaned price'] <= amount]

return result.to_csv('filtered_data.csv', index=False)

# The user will stay a weekend

elif self.stay in ['Weekend', 'weekend', 'week', 'Week']:

data = pd.read_csv('Airbnb_data.csv')

# Prices per night are usually between 2 and 3 digits. Example: $50 or $100

# This will create a column that takes only the digits

# For example: $80 / night, this slicing will only take 80

data['cleaned price'] = data['Price'].str[1:4]

# This time I used the map() instead of list comp but it does the same thing.

data['cleaned price'] = list(map(int, data['cleaned price']))

# We look for prices that are within the user's range

# and save that to a new csv file

filtered_data = data[data['cleaned price'] <= amount]

return filtered_data.to_csv('filtered_data.csv', index=False)

else:

pass

def send_mail(self):

# Create a multipart message

# It takes the message body, subject, sender, receiver

msg = MIMEMultipart()

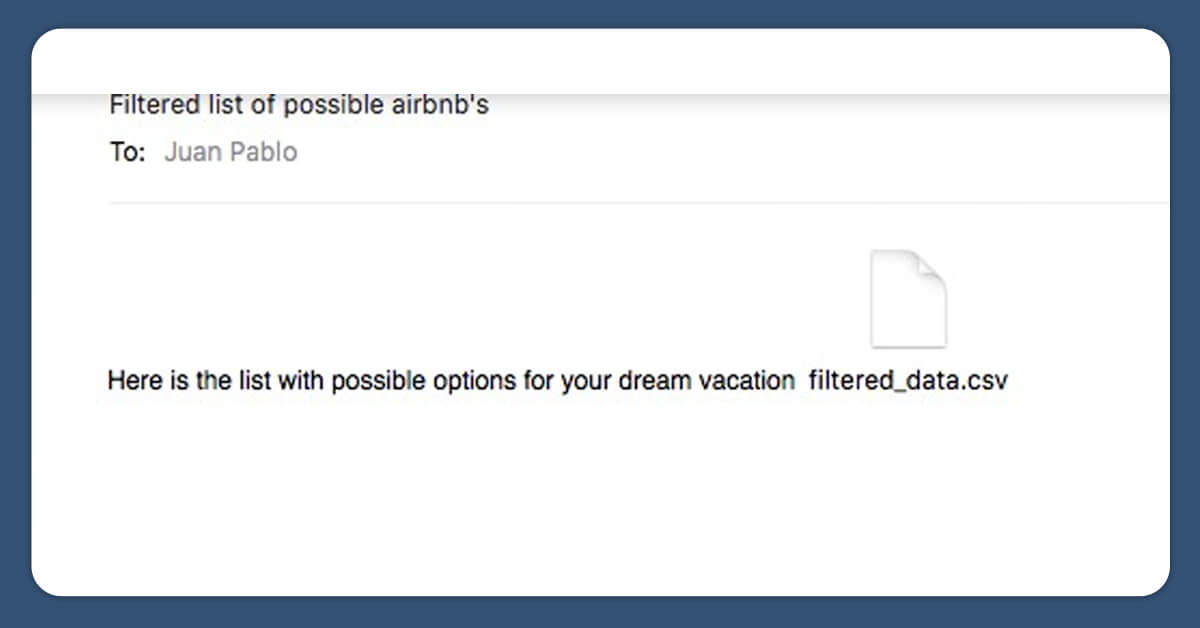

MESSAGE_BODY = 'Here is the list with possible options for your dream vacation'

body_part = MIMEText(MESSAGE_BODY, 'plain')

msg['Subject'] = "Filtered list of possible airbnb's"

msg['From'] = 'projects.creativity.growth@gmail.com'

msg['To'] = self.email

# Attaching the body part to the message

msg.attach(body_part)

# open and read the CSV file in binary

with open('filtered_data.csv','rb') as file:

# Attach the file with filename to the email

msg.attach(MIMEApplication(file.read(), Name='filtered_data.csv'))

# Create SMTP object

smtp_obj = smtplib.SMTP('smtp.gmail.com', 587)

smtp_obj.starttls()

# Login to the server, email and password of the sender

smtp_obj.login('projects.creativity.growth@gmail.com', password)

# Convert the message to a string and send it

smtp_obj.sendmail(msg['From'], msg['To'], msg.as_string())

smtp_obj.quit()

if __name__ == "__main__":

my_traveler = Traveler( 'juanpablacho19@gmail.com', 'week' )

my_traveler.price_filter(80)

my_traveler.send_mail()

The Traveler class is having two methods: send_email()and price_filter(amount)

Price_filter(amount)

This technique takes amount of money a user is ready to spend as well as filters the datasets to get some new datasets having accessible results as well as it makes a newer CSV with newer results.

Send_email()

This technique utilizes smtplib library for sending an email to users with filtered CSV files attached to that. The message body about an email is “This is the list having all possible alternatives for dream vacations”. The email is automatically sent after running a traveler.py file.

How to Run?

Preferably, you need to run an airbnb_scrapy.py file initially so this collects the most current data as well as a traveler.py file to filter data as well as send emails.

Python traveler.py

Python airbnb_scrapy.py

Conclusion

It is another example about how influential Python is. This project provides you great understandings about web scraping having BeautifulSoup, data analysis and data cleaning with Pandas, an application testing having Selenium as well as email automation using smtplib.

Being a data analyst, you would not have data kindly formatted, or a company, which you require data from might not get an API, therefore, at times, you will need to utilize web scraping skills for collecting data.

In case, you have ideas about how a code could be improved or in case, you wish to increase on a project, then feel free to contact us!