In the era, of big data, companies recognize the importance of e-commerce business. Photos, product IDs, product characteristics, brand and, many other data points are incredibly helpful for a variety of applications, but they are also difficult to achieve. To acquire a competitive advantage over the other providers, product data feeds from e-commerce sites are leveraged. Despite other efforts to prevent, it is one of the most dependable and straightforward ways to keep track of your competitors and the market.

The following are the most popular use cases for e-commerce product feeds:

- Price monitoring (Comparing prices from other websites and providing the best deals)

- Sites Affiliation (content usage for creating traffic to their site using the search engine for commission)

- Policy Development (Web scraping will play an important role by supplying précised and ready to use data)

- Decision making (Making decision is possible by analyzing the information)

The most common data scraped from e-commerce websites are:

- Price

- Product name

- Product type

- Product details

- Customer reviews

- Customer ratings

- Product ranks

- Manufacturer and brand

- Deals and discounts

Let us discuss 3 large e-commerce companies which you can scrape and what measures are they taking to limit the number of web harvesters.

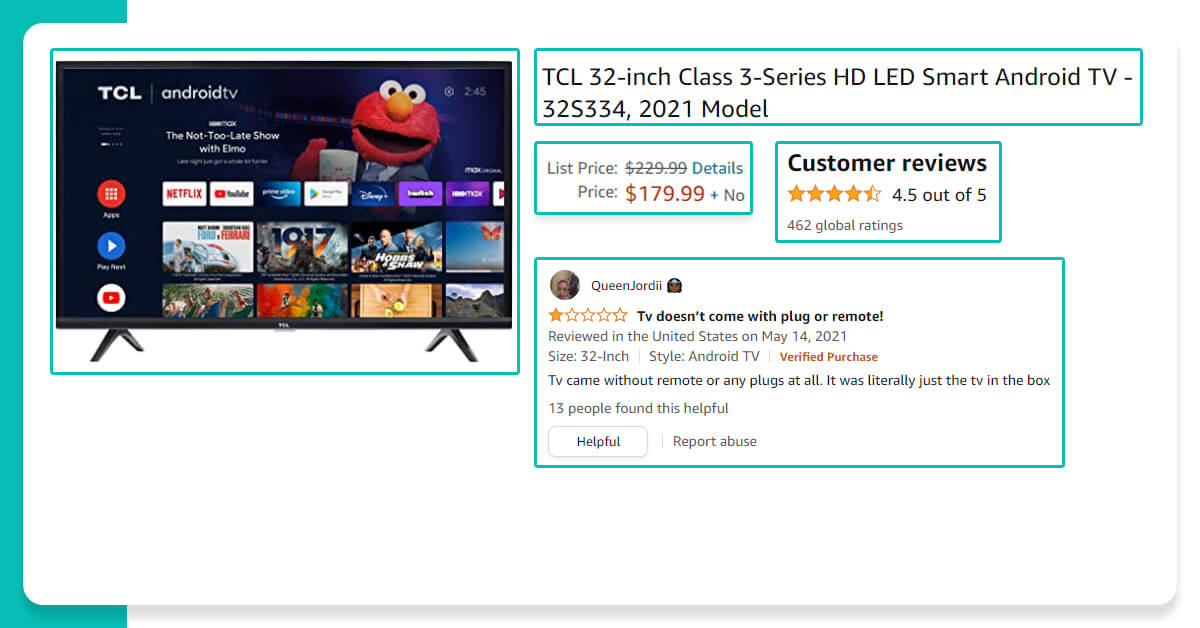

Amazon

Amazon is the world’s largest e-commerce website that avails millions of products to sell. Its information is used for a variety of purposes. While extracting a large website such as Amazon: The actual web scraping services (making queries and parsing HTML) becomes a minimal portion of your application while scraping a large website like Amazon. Rather, you can offer a significant amount of work on to determining how to run the entire crawl smoothly and efficiently. Anybody who has attempted to scrape data from Amazon knows how difficult it is.

The scraper must scrape effectively to fulfill your requirement. The level of difficulty in retrieving data is determined by Amazon’s anti-scraping algorithms.

Amazon appears to use IP-based captcha the majority of the time, even though there are several methods to filter bots at the application level (completely automated public turing test to tell computers and humans apart). This means that if you download a large number of pages within the same IP address at a high rate, Amazon will prompt you to complete a captcha “).

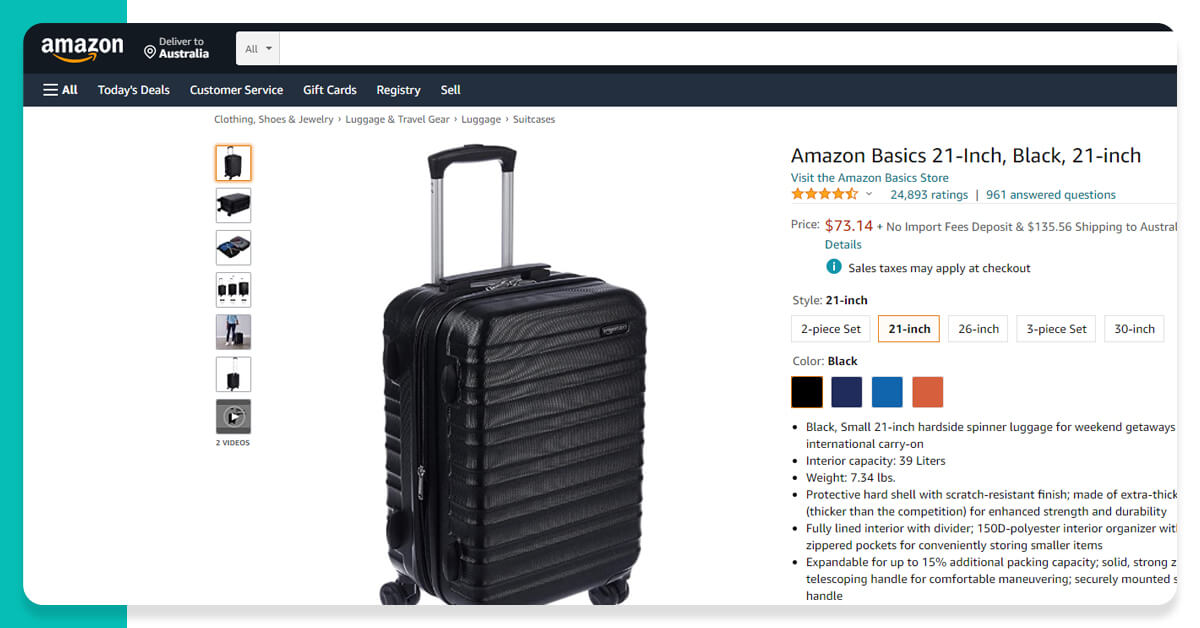

Walmart

Walmart is the world’s largest retailer, including over 10,000 locations worldwide, and yearly revenues approaching USD 500 billion. However, its web business is only a minor fraction of the whole picture. Walmart is emphasizing its e-commerce business to maintain stable sales growth in the future years, as it is approaching saturation in the United States due to its extensive presence.

This, in itself, is a significant draw for web-harvesting companies and/or people. Web harvesters are usually looking for the following information when they scrape Walmart for data:

- Details about a product that isn’t available through the Product Advertising API

- Keeping an eye on an item’s price, inventory count/availability, quality, and other factors.

- Examining how a specific brand is sold on Walmart

- Monitoring Walmart Marketplace sellers.

- Monitoring Walmart product reviews.

Like Amazon, Walmart’s website employs bot-specific systems. Bot detection tools such as traffic analysis, identification of existing bots, and machine learning to predict expected site visitor behavior are all critical components of a successful methodology. Walmart employs to protect its data.

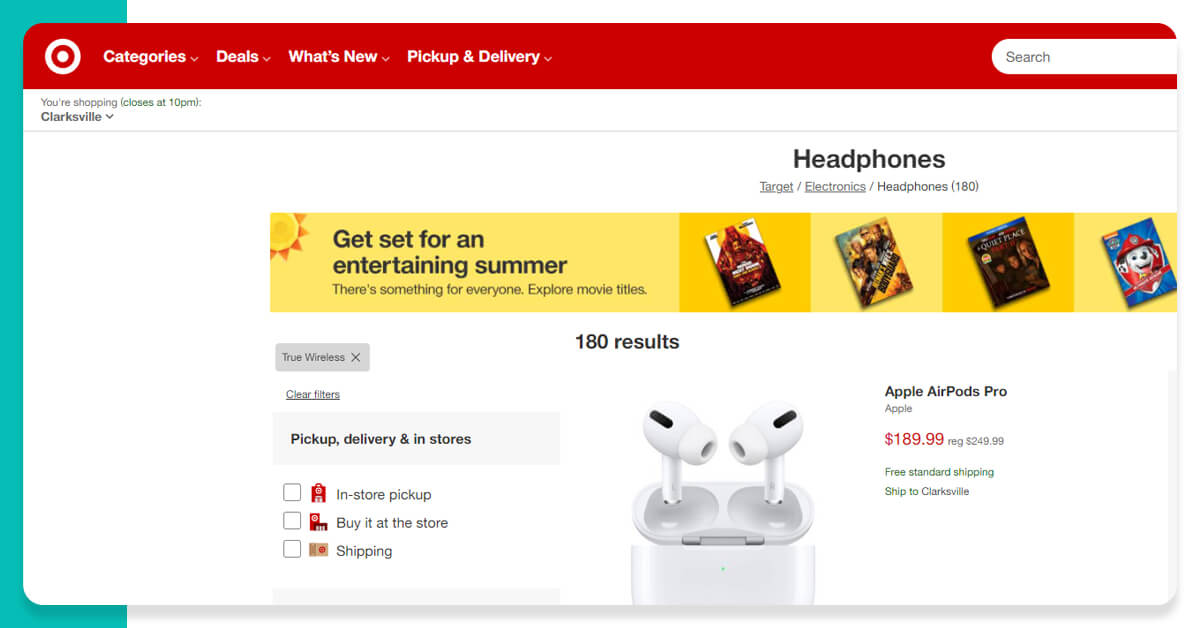

Target

Target.com has collaborated with Amazon.com since 2011. The e-commerce giant’s infrastructure enabled the Target.com website while Amazon.com manages almost all call centers and fulfillment functions.

However, much has changed since 2001. E-Commerce has advanced substantially, and Target properly noted the significance of a multi-channel approach in 2011, bringing ecommerce operations in-house. Due to sale items and limited-time deals, which behave as candy to web scrapers, it became another apparent subject for web data harvesters from that point.

Target.com also safeguards its information by enforcing rate limits, identifying suspicious activity, employing captcha, and temporarily blocking IP addresses.

Web harvesters can easily scrape pricing and product information from target.com using various sources of extracting websites, hence while doing that the aggregated data is fed with an analytical engine, that allows competitors to match the prices and product in real-time.

Furthermore, competing bots frequently add products to the basket and build hundreds of them only to abandon them. Such fraudulent behavior will reduce inventory activity in real-time and display out-of-stock for a genuine buyer.

To summarize, every store is a perfect eCommerce world might have an API with which scrapers could communicate. It would be instantaneous, updated, and capable of supporting a wide range of levels of complexity.

Because there would be a universal set backed by all retailers; it would have been simple and intuitive to use; however, e-commerce businesses are very possessive of their very own information for understandable reasons, and web scrapers find new ways of extracting the desirable data every day, causing websites to create new ways to protect it.

Are you looking for any solutions for continuous monitoring of e-commerce websites? Contact, X-Byte Enterprise Crawling today.