Because of the enormous amount of data available on websites, there are a valuable source of information in areas where organized, easy-to-use datasets are lacking. Web scraping is used by numerous businesses to improve their services and operations, including price comparison websites like Trivago and employment forums like indeed.

The data on most websites is frequently difficult to find. Although websites aren’t designed to be scraped for information, there are several tools available to bypass this and collect datasets and visualization.

What is Web Scraping?

Web scraping is a method for extracting information from websites in a targeted, automated manner. Although it can be performed manually, automating the procedure reduces the risk of human error and allows you to gather millions of data points in minutes. It enables you to collect non-tabular or poorly structured information from websites and convert it to a useable, proper manner, such as a.csv file or spreadsheet, for archiving or analysis.

Web scraping can be used for a variety of purposes in research and journalism, including:

- Collecting data from real estate websites and gathering online article comments and other discussions for research to detect changes in the real estate market (e.g. using text mining)

- Collecting information on the membership and activities of online organizations collecting reports from a variety of web pages

Project Goals

The goal of the project was to construct a dashboard using evaluations from any firm using the consumer review website Trustpilot as a source of information. This would necessitate a number of steps:

Examine the webpage to determine its HTML structure.

- Use BeautifulSoup to loop through the elements of the webpage and save them in a DataFrame.

- Upload the data into Elasticsearch using the Elastic API.

- Kibana, Elastic’s dash boarding software, was used to create the dashboard.

Inspecting the Webpage

It is possible to search for the webpage using Google Chrome by right-clicking anywhere on the page and selecting “View page source,” which brought up the HTML code for the page in a new window:

To narrow the sections that were significant, namely the review blocks, you can use a username from the actual homepage and searched for it in the HTML code, which got straight to the HTML code for the reviews.

Figure 2 shows how this helped me focus on the code for the reviews, with the block being enclosed in an article with class=”review” and the content of the review being placed in script type=”application/json” data-initial-state=”review-info”> at the top. I could begin Extracting the Data in Python with this information.

Parsing the Web Page using BeautifulSoup

Beautiful Soup is a Python package that makes scraping data from web pages a pleasure. It goes on top of an HTML or XML parser and offers Pythonic paradigms for iterating, searching, and changing the parse tree. It can retrieve all of the content from HTML tags as well as isolate titles and links from webpages.

#loop through each of the 276 pages of reviews

for i in range(1, 276):

url = "https://www.trustpilot.com/review/aardy.com?page=

{}".format(i)

result = requests.get(url)

soup = BeautifulSoup(result.content)

reviews = soup.find_all('article', class_='review') for review in reviews:

allUsers.append(review('aside')[0]('a')[0]('div',

class_='consumer-information__details')[0]('div',

class_='consumer-information__name')[0].string) #convert dictionary outputted as a string to a Python

Dictionary using json module

ratingsDic = json.loads(review('script')[0].string)

allRatings.append(ratingsDic['stars']) if(not(review('aside')[0]('a')[0]('div', class_='consumer-

information__details')[0]('div', class_='consumer-

information__data')[0]('div', class_='consumer-

information__location')) == []): allLocations.append(review('aside')[0]('a')[0]('div',

class_='consumer-information__details')[0]('div',

class_='consumer-information__data')[0]('div',

class_='consumer-information__location')[0]('span')

[0].string)

else: allLocations.append("") datesDic = json.loads(review('div', class_='review-content-

header__dates')[0]('script')[0].string)

allDates.append(datesDic['publishedDate'])

reviewDic = json.loads(review('script')[0].string)

allReviewContent.append(reviewDic['reviewHeader'])

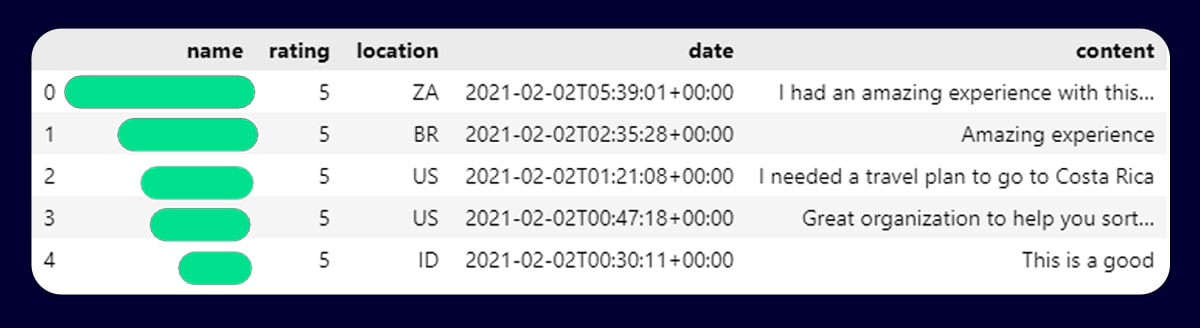

You can construct a for loop to scrape data throughout all 276 pages of reviews for the firm after initializing several empty arrays to store data such as locations, ratings, content, and so on. Save the content of each page in a BeautifulSoup object and then used the find all method to get a list of Tag objects that meet the specified criteria, which in this case was all “articles” with the class “review.”

You can use the JSON module to retrieve the rating, which runs from 1–5 stars, and appended it to the allRatings array after obtaining the customer’s name (which was unnecessary in hindsight due to the anonymization of the data). You might get an IndexError while running the code. Following some investigation, it is noticed that not all customers mentioned their location in their reviews, necessitating the addition of if statement to verify that the “consumer-information location” field was not empty. There is no need to define each tag, level-by-level after you find the customer’s name, rating, and location. As datesDic, which stores the date and the review was written on, shows, we can just mention the class that was interested in without including the hierarchy above that class. As the final stage, you can extract the actual written review, which meant there is one more step left.

review_data = pd.DataFrame(

{

'name':allUsers,

'rating':allRatings,

'location':allLocations,

'date':allDates,

'content':allReviewContent

})

review_data = review_data.replace('\n','',regex=True)

review_data['date']=review_data['date'].str.replace('T', ' ')

review_data['date'] = review_data['date'].map(lambda x: str(x)[:-14])

review_data['date'] = pd.to_datetime(review_data['date'])

The data was ready to be imported into Elasticsearch after saving it in a Pandas DataFrame and changing the date from a String to a DateTime object.

Importing the Information into Elasticsearch

We need to transform the sets of data into dictionaries because Elasticsearch documents are in JSON format.

def generator(data):

for c, line in enumerate(data):

yield{

'_index': 'trustpilot',

'_type': '_doc',

'_id': line.get('id', None),

'_source':

{

'name': line.get('name', ''),

'rating': line.get('rating', ''),

'location': line.get('location', ''),

'date': line.get('date', ''),

'content': line.get('content', None)

}

}

raise StopIteration

You can use Python’s Generator function to assist in accomplishing this. Generators are memory-efficient processing methods for large datasets. They process the data in batches and don’t dedicate memory to all of the results at once, which is ideal when dealing with tens of thousands of reviews. The yield expression is used in generator functions instead of the return expression used in conventional functions; when the function yields, it is halted rather than terminated, and control is passed to the caller. In this scenario, we get a dictionary with the review data we obtained, as well as some basic Elasticsearch information.

#Creating an Elasticsearch instance

ENDPOINT = 'http://localhost:9200/'

es = Elasticsearch(timeout=600, hosts=ENDPOINT)

es.ping()

trustpilot_index = es.indices.create(index='trustpilot', ignore=[400,404])review_dict = review_data.to_dict('records')

try:

res = helpers.bulk(es, generator(df2))

except Exception as e:

pass

It was then time to connect to machine’s local Elasticsearch build. Elasticsearch’s Python client package makes this simple: es.ping() just verifies that the connection has been established correctly. In order to keep all of the review data, you will also need to develop an index. Elasticsearch’s data organization technique is called indices, and it allows the user to segment data in a specific way. In a relational database, an index is similar to a database, with index types corresponding to database tables. Remember that the “_index” dictionary key in the generator function is set to “trustpilot,” therefore this must be the name of the index we generate here.

The next step is to upload the data using one of Python’s utility functions, specifically the bulk () api. The helpers.bulk method is one of the most efficient ways to speed up indexing. The key to speeding up search results and conserving system resources is indexing enormous datasets without putting them into memory.

Creating the Dashboard

You can start visualizing the information that had been obtained now that the data was in Elasticsearch. Looking at data in Figure 3, it’s evident that the dashboard wouldn’t be that comprehensive or extensive as we were working with four columns of information.

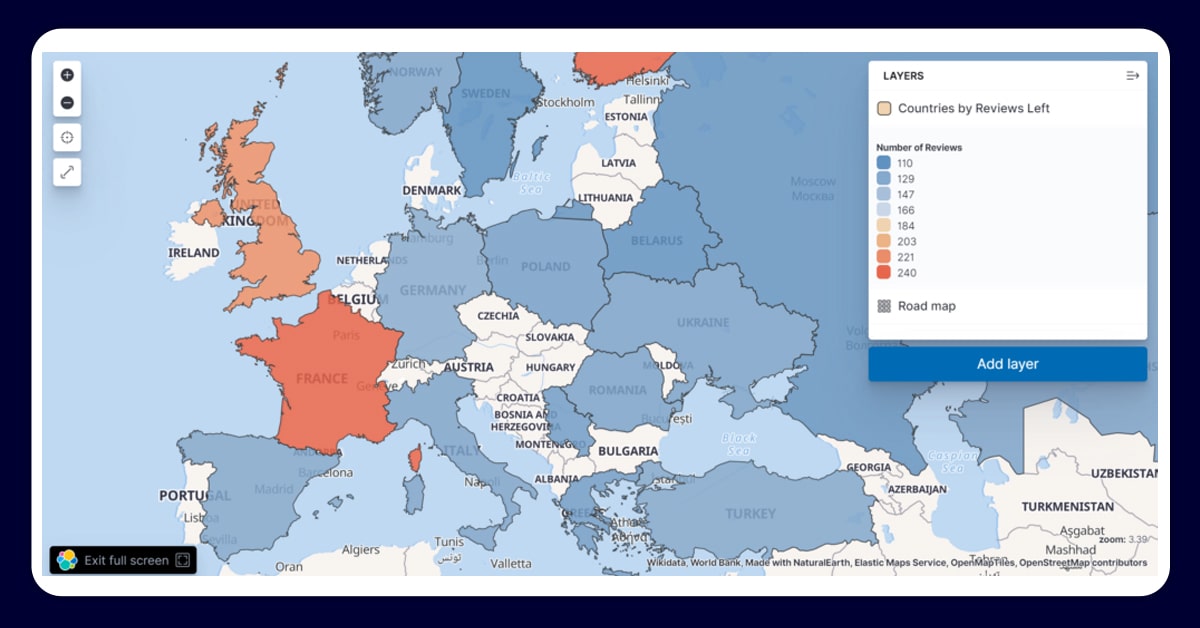

The first graph depicts how well the reviews and comments left has varied over time; we can notice a modest reduction overall, as well as a seasonal trend with highs in the Autumn and lows in the early Summer. Below is a heatmap that illustrates how the number of reviews written varies by country. The fact that the countries extracted from the webpage were in ISO 3166–1 alpha-2 format, and thus recognized by Elasticsearch, made it easier to convert the location to a Geo field in Elasticsearch. Figure 5 demonstrates how the map appears when magnified:

A donut chart displays the most popular terms typed by customers on the right-hand side of the dashboard; the findings reveal a largely good image of aardy.com. However, looking at the bar chart below, which shows the average rating for each country, the best rating is little over 3.5 in Poland, which isn’t exceptionally impressive.

The process of developing these graphics and putting the dashboard together was not unduly difficult, thanks to Kibana’s user-friendly interface. I was particularly impressed with the “drag-and-drop” feature, which automatically finds the optimum visualization for the data selected, allowing you to spend more time focused on the information you want to see rather than deciding on the chart style, which axes to place a field on, and so on.

Final Words

You can be quite satisfied with the outcomes of the web scraping portion of the assignment. Making your own dataset from an average webpage with “untapped” data gave me a satisfying sensation. You will be able to pull data from a webpage that you will be interested in into tabular format so that it could be seen and examined further. The BeautifulSoup package, which was created expressly for tasks like this, performed admirably, and the generator function made uploading the collected data a snap.

Reflecting closely on the visualization side, you can trust that there would have been more possibility to create a more insightful dashboard if you had picked a different topic to focus on instead of reviews. There were limitations on how this data could be presented and how much analysis could be done. Working with data for a sports team, looking into the performance of individual players or the team as a whole, could be a concept for a future project (however, as an Arsenal fan, you might just choose to stick with review data).

Contact X-Byte Enterprise Crawling for any further details.

Request for a quote!!