For businesses, web scraping has opened up dozens of new possibilities. Various businesses can make strategic decisions on publicly available information. Of course, before ever considering incorporating web scraping into your regular company operations, you must first determine what information is useful.

According to the 2019 survey, Search volume accounted for 29% of all website traffic worldwide. These figures show that search engines are packed with useful public data. We’ll talk about search engine scraping, interesting data resources, difficulties, and solutions in this blog.

What is Search Engine Scraping?

Scraping is the automated process of gathering public information from search engines, such as URLs, descriptions, and other data. It’s a type of web scraping services that exclusively scrapes search engines. Understanding what sources of information might be useful in the company or even study would greatly increase the overall effectiveness of web scraping and analysis.

You will need to employ specialized automated programs called search engine scrapers to extract publicly available data from search engines. They enable you to gather search results for any question and provide the information in an organized fashion.

Necessary Data Sources from Search Engines

Typically, businesses collect public information from SERPs (Search Engine Results Pages) to improve their rankings and increase organic website traffic. Some companies also scrape search engines and share their findings with other businesses to help them become more prominent.

Scraping Search Engine Results

Companies obtain the most general details from search engines in the form of keywords related to their sector and SERP rankings. Knowing how to rank well on SERPs might help businesses decide whether it’s worth attempting something that their competitors are doing. Knowing what’s going on in the sector might help you develop your SEO or digital marketing efforts.

Scraping SERP results may also be used to see if search results return relevant results for the queries entered. Companies extract SERP data to see if the search phrases they submitted are correct. This information may alter a company’s overall content and SEO strategy since understanding which search phrases lead to material relevant to their sector can help them focus on the content they require.

Companies may even examine how time and locality affect individual search results by using a sophisticated search engine results scraper powered by proxies. This is especially true of businesses that market their goods or deliver services all over the world.

SEO Monitoring

Using a search scraper, of course, is mostly for SEO monitoring. Meta titles, descriptions, rich content, knowledge graphs, and other public information abound in SERPs. A chance to study this type of data may be quite beneficial, such as providing advice to the content team on what actually works to get the highest possible ranking on SERPs.

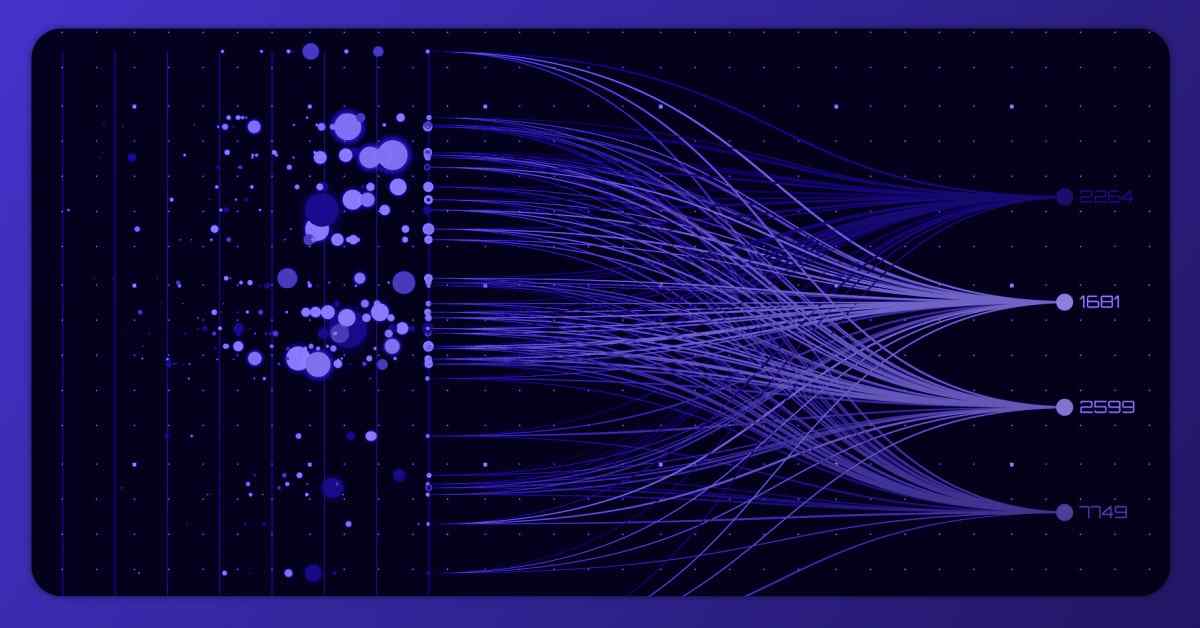

Now that we have our hotel information stored in a Pandas data frame, we can plot the ratings of different hotels against each other to understand better how they differ. It can give us good insight into which hotels are better than others and help us make informed decisions when booking hotels.

Digital Marketing

Scraping search results can also help digital advertisers get an edge by allowing them to monitor how and when their competitors’ adverts appear. Of course, possessing this information does not imply that digital advertisers can duplicate other commercials. They do, however, have the chance to study the industry and trends to develop their plans. The placement of advertisements is critical to achieving positive outcomes.

Scraping Images

Scraping publicly accessible photos from search engines may be useful for a variety of reasons, including trademark protection and optimizing SEO methods.

Brand protection companies look for real items on the internet and pursue copyright holders. Collecting photographs of public items can aid in determining whether or not a thing is genuine.

Using public photos and associated metadata for SEO purposes aids in image optimization for search engines. The ALT texts of photos, for example, are critical because the more key data around an image, the more useful it is to search engines.

Extracting Shopping Results

Many firms market their items on the shopping platforms of the most major search engines. Gathering publicly available data, such as prices, reviews, and product titles and descriptions may be useful for keeping track of and learning about competitors’ brand positioning, pricing, and marketing methods.

Keywords are an important component of online buying systems. Trying multiple keywords and extracting the results of presented items will make you realize the entire ranking system and provide insight into how to maintain your business competitive and profitable.

News Results Scraping

News platforms have become a valuable resource for media scholars and companies, and they are now integrated into the most major search engines. The most recent news from the most prominent news sites is compiled in one location, resulting in a massive public database that may be utilized for a variety of applications.

Analyzing this data may help people become more aware of current trends and what’s going on in various businesses, as well as how news is shown differently depending on where you are, how dynamic sites offer information, and so on. The number of news portals where information may be found is practically limitless. Naturally, online scraping made initiatives involving the analysis of large volumes of news stories more manageable.

Other Data Sources

Researchers can also obtain public data for specific scientific instances from other search engine data sources. Academic search engines for scientific articles from all over the web are one of the greatest examples. Researchers may get a lot of information by collecting data using certain terms and examining what papers are shown. The public data that may be obtained for research includes titles, links, citations, related links, author, publisher, and samples.

Is Web Scraping Legal?

The legality of online scraping is a strongly discussed question among those in the data collection industry. It’s worth noting that online scraping can be lawful if it’s done without breaking any rules governing the source targets or the data itself. With that in mind, we recommend that you get legal advice before engaging in any scraping activity.

How to Extract Search Results?

As we previously stated, scraping search engines is valuable for a variety of commercial objectives, but gathering the necessary data presents a number of obstacles. As search engines adopt more advanced methods of detecting and preventing web scraping crawlers, more steps must be done to avoid being blocked:

1. Use of Proxies for Scraping Search Engines

They provide access to geo-restricted information and reduce the probability of being barred. Proxies are middlemen that assign various IP addresses to users, making it more difficult to track them down. Importantly, you must select the appropriate proxy kind.

2. Rotate IP Address

You should not scrape search engines using the same IP address for an extended period of time. Instead, use IP rotation strategy for your web scraping applications to prevent being blacklisted.

3. Optimizing Scraping Process

You will almost certainly be prohibited if you collect large volumes of data all at once. Large quantities of requests should not be sent to servers.

4. Adjust the Most Common HTTP Headers and Fingerprints

It’s an important but often ignored approach for reducing the likelihood of a web scraper being blacklisted.

5. HTTP Cookies Management

After each IP change, you should deactivate HTTP cookies or clear it. Always test what actually works for your scraping operation on search engines.

Solution for Collecting Data: SERP Scraper API

X-Byte Enterprise Crawling has created the SERP Scraper API, a simpler and more effective alternative for collecting SERP data.

With this powerful tool, you can extract massive amounts of public information in real time from the main search engines. The SERP Scraper API is a helpful tool for keyword research, ad tracking, and brand protection.

Here are few examples of what you can do with SERP Scraper API:

1. Gather the Key Data Points from the Leading SERPs:

You can collect a variety of relevant data pieces, including advertisements, photos, news, keyword data, featured snippets, and more. You’ll need this information to spot frauds and improve your SEO rankings.

2. Target any Country on the Coordinate Level

The SERP Scraper API is powered by a robust proxy network that spans the world. Select any of the 195 nations and extract SERP data down to the city level.

3. Get Clean and Easy-to-Analyze Data

You didn’t have to deal with the jumbled datasheets. Instead, you may start analyzing data as soon as you’ve extracted it. The JSON and CSV formats make the procedure much easier to handle.

4. Look at Past IP blocks and CAPTCHAs

You can stay robust to SERP layout changes and escape anti-scraping efforts used by the search engines due to Proprietary Proxy Rotator.

5. Save the Scraped Data

After the scrape is completed, the results will be transferred to your cloud storage account.

SERP Scraper API is a great scraping partner for overcoming the most frequent search engine scraping issues and extracting the data you need quickly and accurately.

What are the Challenges Faced While Scraping Search Engine?

Scraping SERP data seems to have a great deal of value for organizations of all sizes, but it also has several drawbacks that can make web scraping procedures more difficult. The issue is that it’s difficult to tell which algorithms are good and which are bad. As a result, search engines frequently confuse excellent site scraping bots for bad, resulting in unavoidable bans. Before beginning to scrape SERPs results, everyone should be aware of the security precautions used by search engines.

IP Blocks

IP restrictions can generate lots of new problems if they aren’t properly planned. To begin with, search engines may determine a user’s IP address. When web scraping is active, web scrapers make a large number of queries to the servers to gather the information they need. If the requests come from the same IP address every time, they will be denied since they are not deemed to be from frequent users.

CAPTCHAs

CAPTCHA is another common security technique. When a system believes a user is a bot, a CAPTCHA test appears, asking users to input accurate codes or recognize items in photos. CAPTCHAs can only be handled by the most powerful site scraping programs, which means that CAPTCHAs almost always result in IP restrictions.

Unstructured Data

Successful data extraction is only half of the equation. If the data you’ve retrieved is difficult to interpret and unstructured, your efforts may be in naught. With this in mind, before selecting a web scraping tool, consider what format you want the data to be delivered in.

Final Words

Search engines are chock-full of useful public information. This data may assist businesses in remaining competitive in the market and increasing income, since relying on reliable data ensures more effective business strategies.

The procedure of acquiring this data, is difficult. This approach can be aided by using reliable proxies or high-quality data extraction technologies.

If you are interested to get more information on Scraping Search Engine Results, you can contact X-Byte Enterprise Crawling today or request for a free quote!