Humans have a strong desire to travel, with tourism industry ranking in billions of dollars before the outbreak of Coronavirus last year. However, now that things are starting to calm down, we can see the industry regaining traction.

Finding a destination, checking the availability of hotels and accommodations, and calculating the total cost of the trip all take time and effort.

This is one of the reasons why travel fare aggregators are becoming increasingly popular. The information needed to plan and execute a good trip can be found on travel fare aggregator websites. Scraping data from these websites becomes as crucial as a result.

However, these websites make extracting difficult because they do not want their content scraped in the first place. As a result, determining their costs is difficult and time-consuming.

How Does Web Scraping Assist in Travel Industry?

In today’s world, data is important in every specialty and business. Data is even more important in travel and tourism, since information can determine whether a trip is a success or a failure. In this business, data can be used in a variety of ways, including the following:

1. Construction of Search Engines

Travel and tourism data may be used to create search engines that make information even more available to individuals from all over the world.

Typical examples include Kayak and Trivago, which are meta-search engines that enable discovering information about places and vacations easier.

2. Delivering Better Consumer Services

Data is also necessary for providing excellent customer service. Customer preferences and opinions can be collected by brands in this area, as well as travel locations, accommodations, transit rates, and even consumer preferences and comments.

3. Price Monitoring

Another method that enterprises in this area might use information to benefit themselves and their customers is to modify their rates.

Customers may seek out other brands if fares are set excessively expensive, and reducing the rate to below-average can result in a significant revenue loss.

The brand must use appropriate data to optimize its prices to determine a price that works for both the company and the client.

Who will Need Data from Travel Aggregators?

Customers and brands may both benefit from the data acquired by travel fare aggregators; as a result, we see passengers and travel managers requesting data on a daily basis.

The following are some of the most commonly scraped data in this industry:

- Travel destinations, rates, rental availability in the area, and neighboring attractions

- Current and continuing discounts, as well as hotel listings, room rates, and availability

- Information on aviation, including airline routes and ticket prices, as well as timestamps

- Customer comments and reviews on vacation destinations, hotels, and items

Challenges of Scraping from Travel Aggregators Websites

We now understand why and what types of data are scraped in this sector. However, we are aware that there are a number of obstacles that companies have when attempting to scrape this data, and the following are the most typical issues:

1. Outdated Data

Finding obsolete information is the first challenge that many brands face while scraping. A piece of information from last week or the week before may or may not be applicable today, depending on how things function.

Finding websites that are always constantly updated might be a difficult task, but it is one that must be overcome.

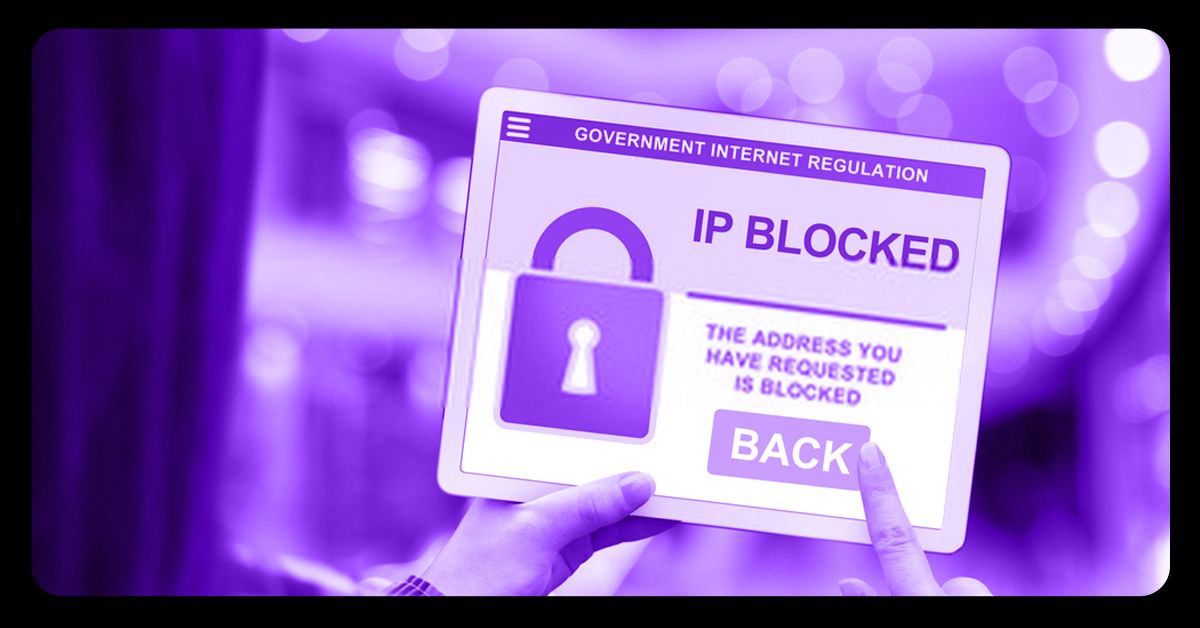

2. IP Bans and CAPTCHAs

When scraping information, it’s easy to become stuck because accurate and meaningful data extraction requires repetition. It entails returning to data sources and obtaining their material on a regular basis. Most websites dislike this, therefore they have measures in place to restrict an IP that continues to interact and take data regularly.

Second, several websites employ CAPTCHAs to distinguish genuine users from bots. Human users are then permitted, but scraping bots are prohibited. This discourages web scraping because bots are the preferred data collection mechanism.

3. Scraping Cost

Scraping the website might be expensive at times. In data extraction, processing, storage, and analysis, skilled persons are required. When a company can’t afford to hire more people, they’ll have to allocate some employees to this critical task. However, these employees will require some training, which will be costly.

This, combined with the costs of keeping information and maintaining equipment, poses significant hurdles, particularly for smaller businesses.

4. Website Complications

The majority of travel sector websites are complicated and have layouts that change daily. And this can be a problem for scrapers, whether they’re collecting data with a basic bot or a human.

Most basic bots become overwhelmed and crash when a layout or website structure changes, whereas human fare scrapers must begin learning how and where to deal with a new layout until it changes again.

5. Geo-Restrictions

This is the final and most difficult hurdle that brands must overcome when scraping prices from aggregators’ webpages.

Some websites use geo-restrictions to ensure that individuals from specific regions are not able to access them.

This method detects where users are surfing from and prevents them from continuing if they are coming from a prohibited location.

Fare Web Scraper API as a Solution

Web scraping is the technique of gathering a large volume of data from many sources employing sophisticated tools in the most efficient way feasible.

A fare scraper API by X-Byte Enterprise Crawling is one of these technologies, a high-level software designed to interface with aggregators’ websites and collect their content with ease. This program detects and adapts to changes on the webpage.

To get over IP limits and geo-restrictions, web scraping is combined with proxies, which can hide original IPs and locations and then move to alternative IPs and locations.

To ensure that information is recorded on a regular basis, the procedure is frequently automated; there will be no room for obsolete information.

Finally, these tools take little work, no training, and just minor maintenance, which eliminates the cost of collecting.

Final Words

Because of technology advancements, big data is becoming a crucial component of the travel and tourism sector.

As a result, data scraping is a must. Although there are certain problems, using a fare scraper API and other tools will make the process go more smoothly, faster, and efficiently.

For more information, contact X-Byte Enterprise Crawling or request for a free quote!