Today, we will observe how to scrape MercadoLibre product data with the help of BeautifulSoup and Python in an easy and sophisticated manner.

So, initially, you need to ensure that you have installed Python 3. In case you don’t have, just get the Python 3 installed before proceeding.

pip3 install beautifulsoup4

Also, we would require library’s requests, soupsieve, and lxml to scrape data, break that down to the XML, as well as utilize CSS selectors. Then install them through

pip3 install requests soupsieve lxml

When installed, open the editor as well as type in

# -*- coding: utf-8 -*- from bs4 import BeautifulSoup

import requests

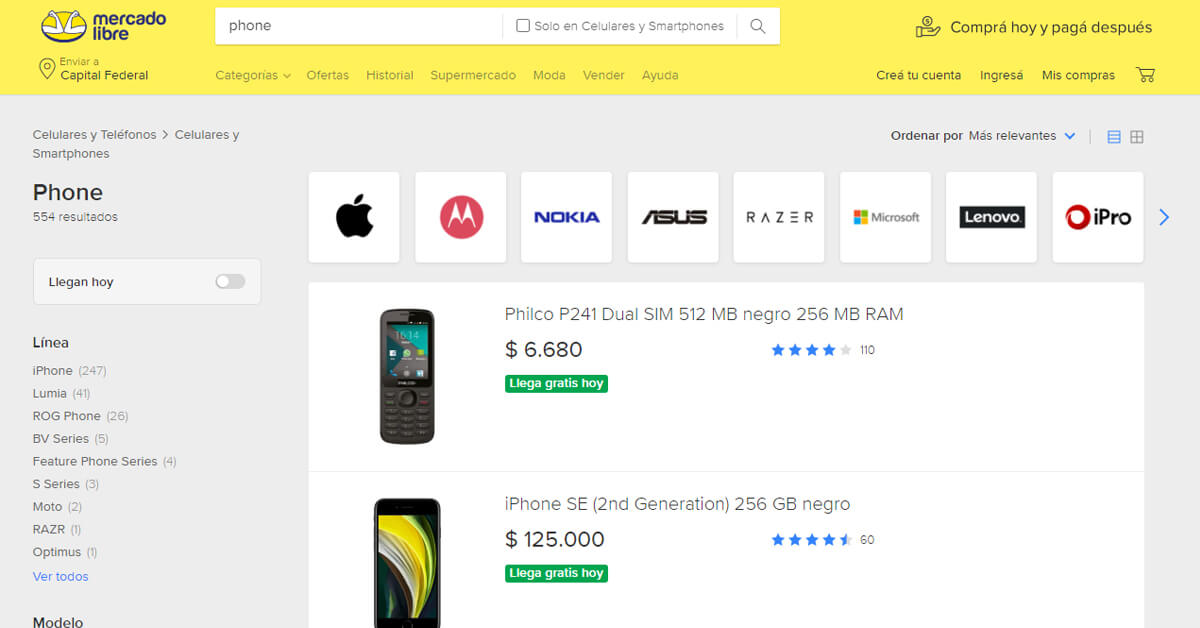

Now, go to MercadoLibre’s search page as well as examine the data that we can have.

That is how it will look.

Coming back to our code, let’s try to get the data through assuming that we are also a browser like that.

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url='https://listado.mercadolibre.com.mx/phone#D[A:phone]'

response=requests.get(url,headers=headers)

print(response)Save that as a scrapeMercado.py file name.

In case, you run that.

python3 scrapeMercado.py

You would see the entire HTML page.

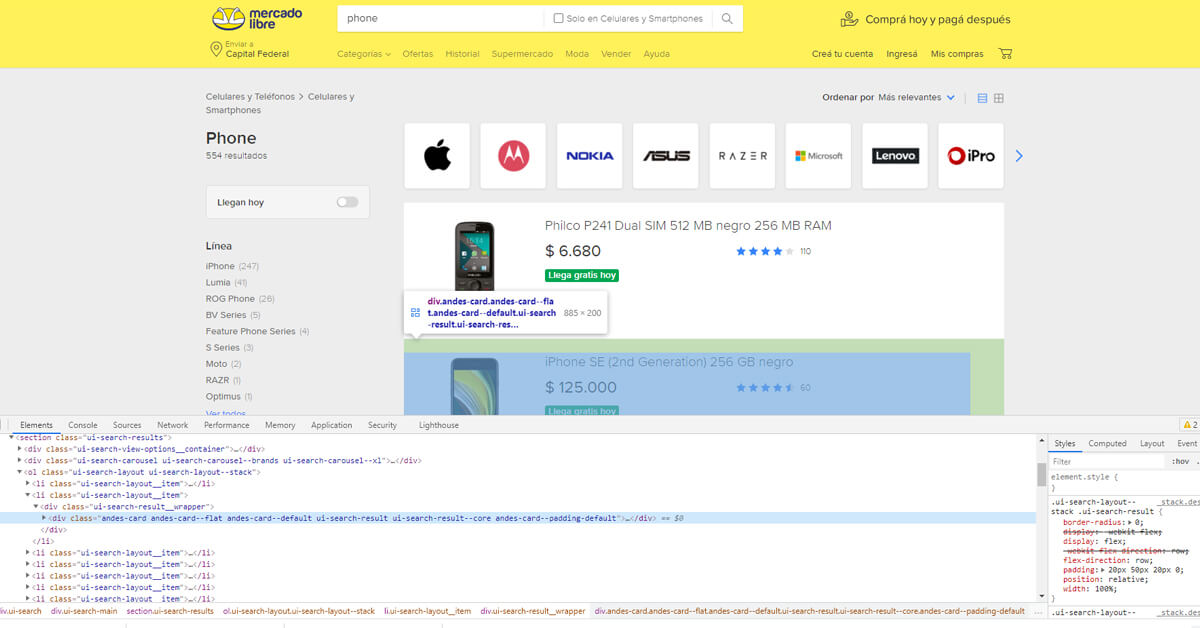

Nowadays, let’s utilize CSS selectors for getting the required data… To perform that, let’s use Chrome as well as open an inspected tool. We notify that with a class ‘.results-item.’ has all the personal product information together.

In case, you observe that the blog title is confined in the element within results-item classes, we could get that like this.

# -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.11 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9', 'Accept-Encoding': 'identity' } #'Accept-Encoding': 'identity'url = 'https://listado.mercadolibre.com.mx/phone#D[A:phone]' response=requests.get(url,headers=headers) #print(response.content) soup=BeautifulSoup(response.content,'lxml') for item in soup.select('.results-item'): try: print('---------------------------') print(item.select('h2')[0].get_text()) except Exception as e: #raise e print('')It chooses all the pb-layout-item blocks as well as runs through that, searching for the elements as well as printing the text.

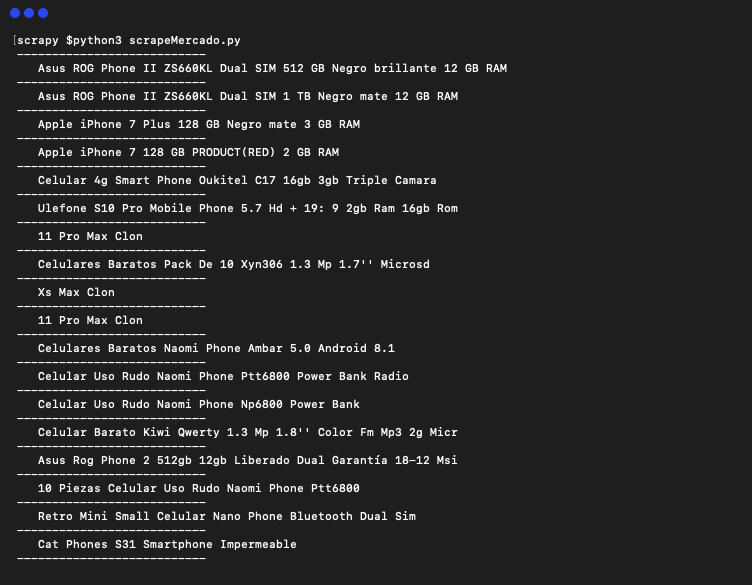

Therefore, whenever you run that, you have

Great News!! We have got product titles.

Now, having the similar procedure, we get class names about all other data including product image, links, and pricing.

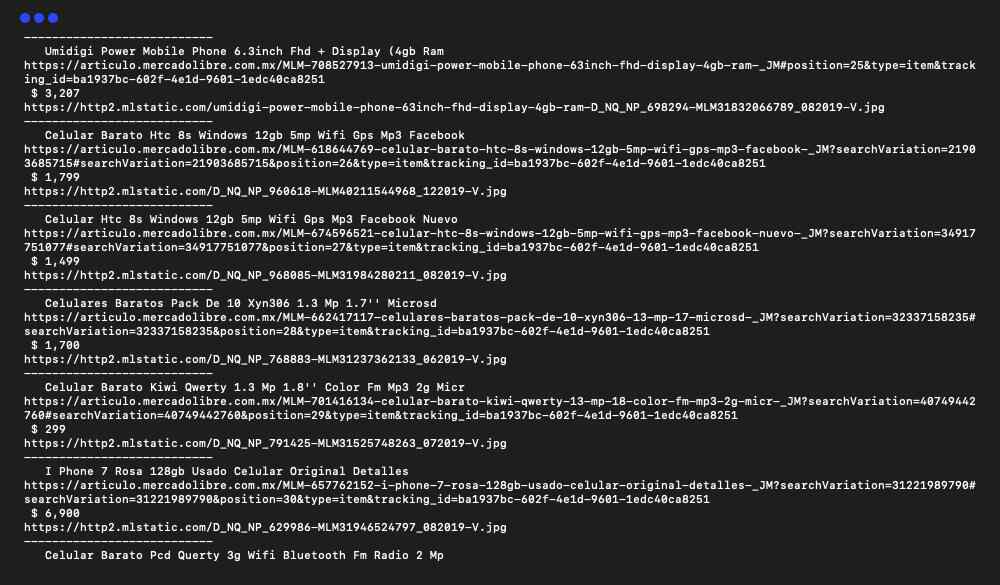

# -*- coding: utf-8 -*- from bs4 import BeautifulSoup import requests headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.11 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9', 'Accept-Encoding': 'identity' } #'Accept-Encoding': 'identity' url = 'https://listado.mercadolibre.com.mx/phone#D[A:phone]' response=requests.get(url,headers=headers) #print(response.content) soup=BeautifulSoup(response.content,'lxml') for item in soup.select('.results-item'): try: print('---------------------------') print(item.select('h2')[0].get_text()) print(item.select('h2 a')[0]['href']) print(item.select('.price__container .item__price')[0].get_text()) print(item.select('.image-content a img')[0]['data-src']) except Exception as e: #raise e print('')That, whenever run, it should print all the things we require from every product like that.

In case, you wish to utilize it in the production as well as wish to scale at thousands of different links, you will discover that you will have quickly get the IP blocked by MercadoLibre. During the scenario, through rotating proxy services to rotating IPs is a must. You may use the services like Proxies API for routing your calls with the pool of millions of domestic proxies.

In case, you wish to increase crawling speed as well as don’t wish to set up the infrastructure, then you may utilize Cloud base crawler to scrape thousands of URLs with higher speed from the network of different crawlers.